- Joined

- Apr 14, 2022

- Messages

- 790 (0.71/day)

- Location

- London, UK

| Processor | AMD Ryzen 7 5800X3D |

|---|---|

| Motherboard | ASUS B550M-Plus WiFi II |

| Cooling | Noctua U12A chromax.black |

| Memory | Corsair Vengeance 32GB 3600Mhz |

| Video Card(s) | Palit RTX 4080 GameRock OC |

| Storage | Samsung 970 Evo Plus 1TB + 980 Pro 2TB |

| Display(s) | Acer Nitro XV271UM3B IPS 180Hz |

| Case | Asus Prime AP201 |

| Audio Device(s) | Creative Gigaworks - Razer Blackshark V2 Pro |

| Power Supply | Corsair SF750 |

| Mouse | Razer Viper |

| Keyboard | Asus ROG Falchion |

| Software | Windows 11 64bit |

The same here.

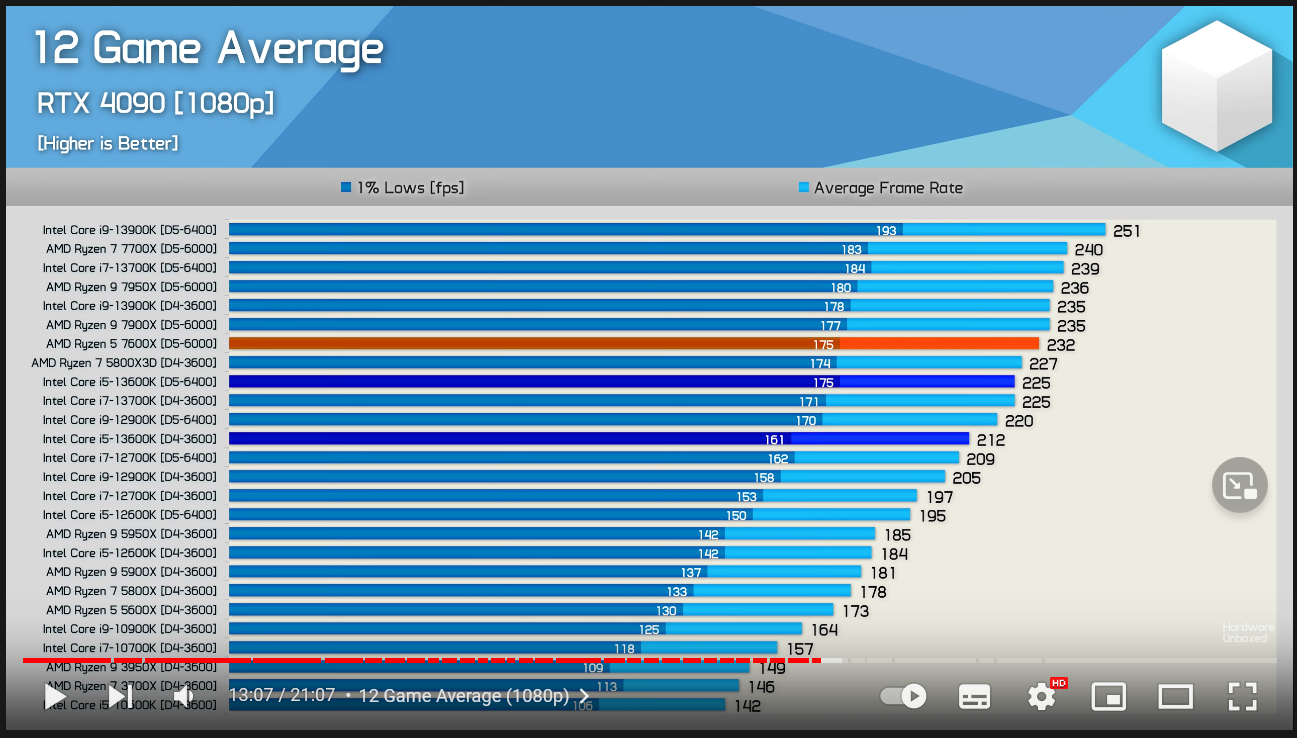

With 4090, the 7600X is faster.

With 4090, the 7600X is faster.