- Joined

- Nov 11, 2016

- Messages

- 3,700 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

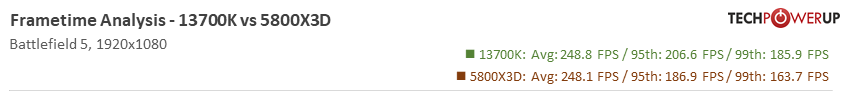

wow the frametimes consistency of 13700K is certainly amazing, 95th and 99th percentile beating 5800X3D by 10% while avg FPS is not much faster