- Joined

- Jan 1, 2021

- Messages

- 1,067 (0.67/day)

| System Name | The Sparing-No-Expense Build |

|---|---|

| Processor | Ryzen 5 5600X |

| Motherboard | Asus ROG Strix X570-E Gaming Wifi II |

| Cooling | Noctua NH-U12S chromax.black |

| Memory | 32GB: 2x16GB Patriot Viper Steel 3600MHz C18 |

| Video Card(s) | NVIDIA RTX 3060Ti Founder's Edition |

| Storage | 500GB 970 Evo Plus NVMe, 2TB Crucial MX500 |

| Display(s) | AOC C24G1 144Hz 24" 1080p Monitor |

| Case | Lian Li O11 Dynamic EVO White |

| Power Supply | Seasonic X-650 Gold PSU (SS-650KM3) |

| Software | Windows 11 Home 64-bit |

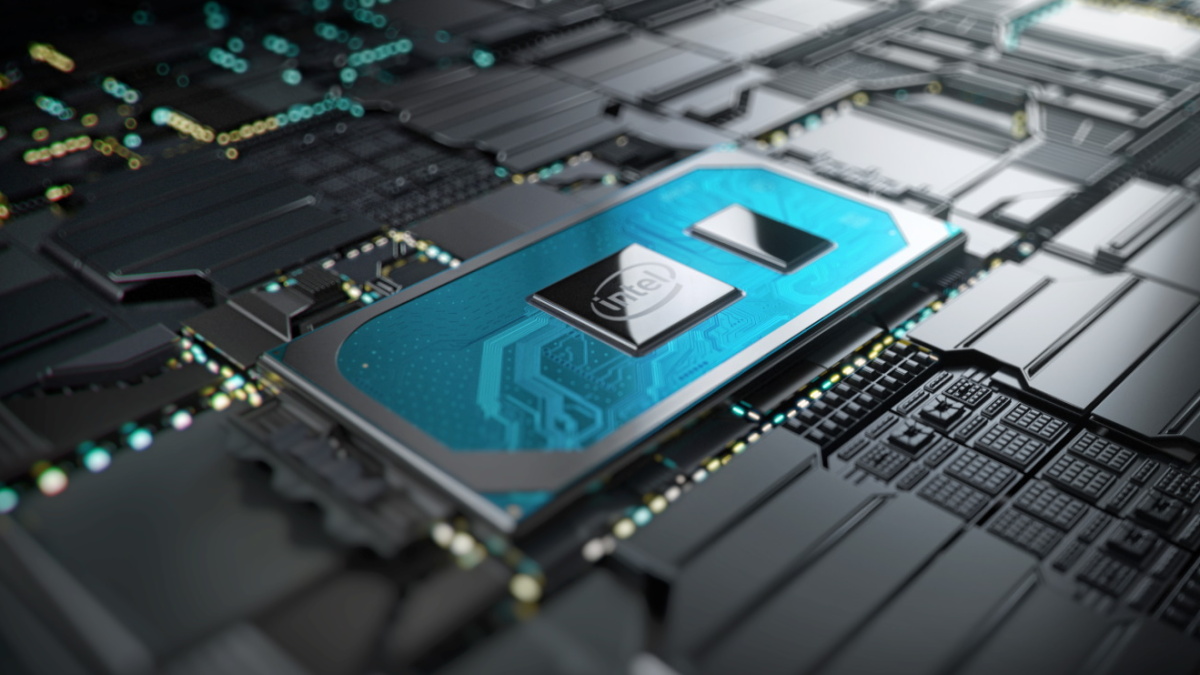

Intel's Desktop TDPs No Longer Useful to Predict CPU Power Consumption

Intel's higher-end desktop CPU TDPs no longer communicate anything useful about the CPUs power consumption under load.

Lying about power consumption numbers to make your products look good is just despicable.

Thank God I have a 4690k which means I don't have to deal with this mess.