- Joined

- Jul 7, 2019

- Messages

- 1,002 (0.47/day)

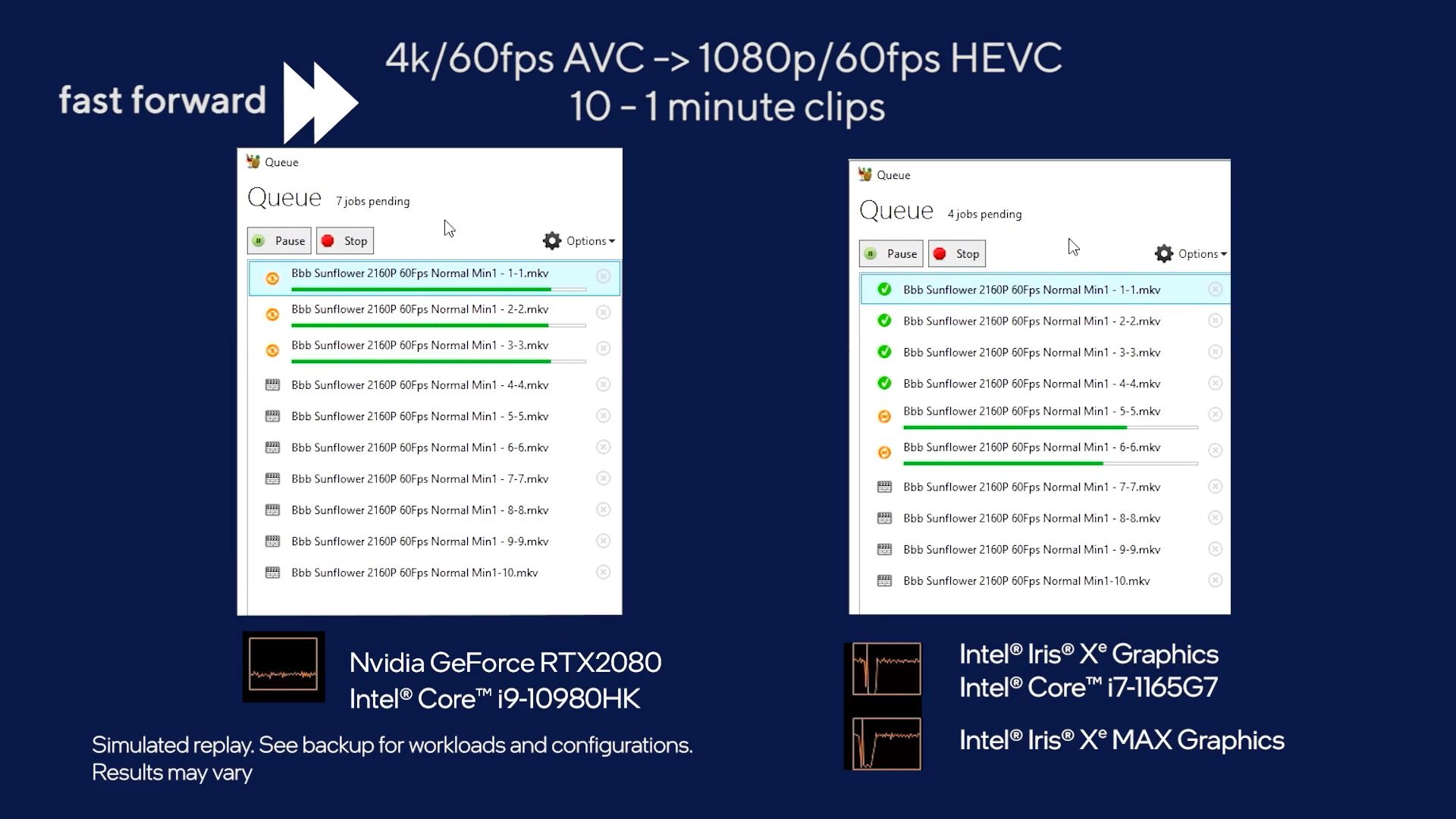

The only novel thing here is their ability to have an iGPU and dGPU link and work together, which isn't something AMD is really doing currently. Although I recall AMD did have a more rudimentary version of it with Crossfire, and was revisiting the idea more for future MCM GPUs and heterogenous computing via Infinity Architecture in general.

Still, it is a small edge (feature-wise) Intel has for now, and if they're able to go further with a SAM-like equivalent, they could potentially squeeze out a bit more performance that way. That said, it'll be a while until they can sufficiently catch up in actual performance, unless AMD or NVIDIA trips up hard.

Still, it is a small edge (feature-wise) Intel has for now, and if they're able to go further with a SAM-like equivalent, they could potentially squeeze out a bit more performance that way. That said, it'll be a while until they can sufficiently catch up in actual performance, unless AMD or NVIDIA trips up hard.