That was never the point of ROCm. It facilitates CUDA code translation.

I think that most are too focused on the gaming aspects of this chip. While yes, you can game on this and it's gaming performance is comparable to a MX350/GTX1050, that's not the main selling point of DG1. You don't even buy a laptop with a MX350 for gaming, do you? You mainly want it to improve content creation. Case in point, the three laptop it launches in. Not meant for gaming whatsoever.

The combined power of the Iris Xe and Iris Xe Max is nothing to be scoffed at considering the power enveloppe. FP16 => 8TFLOPS. That's GTX 1650m level.

I don't know if anybody here uses their computer for work but Gigapixel AI acceleration, video encoding, ... these things really matter for content creators.

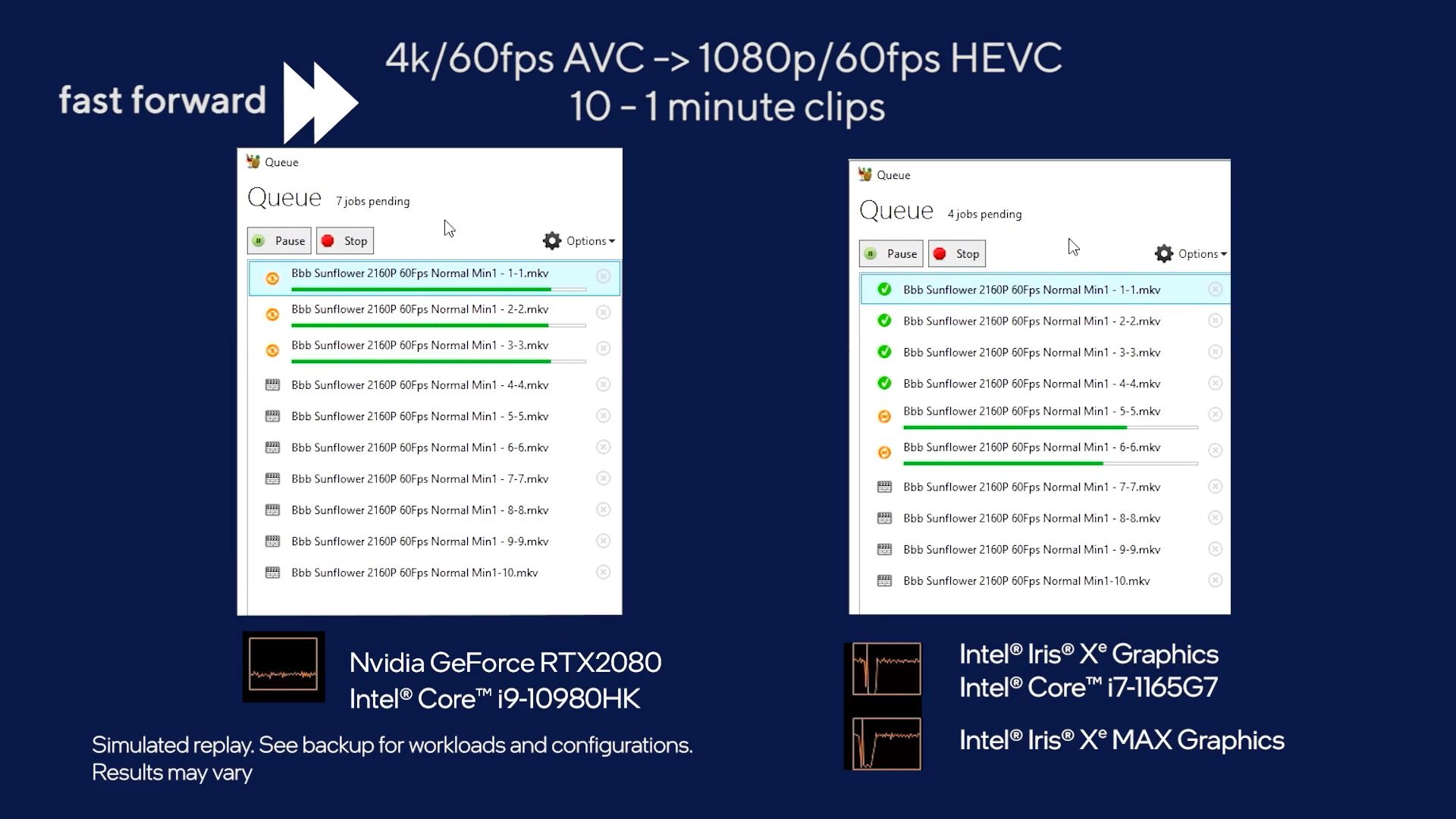

I this multi-stream video encoding test, it beat a RTX2080 & i9-10980HK combination. Can you imagine that a puny lightweight Acer Swift 3 laptop beating a >€2000 heavy gaming laptop in any task? I would say mission accomplished.

We're going have to wait another year before Intel launches their actual gaming product, DG2.

..

.. ..

.. ..

.. ..

.. ..

..

some slide give a very childish vibe ...

some slide give a very childish vibe ...