cdawall

where the hell are my stars

- Joined

- Jul 23, 2006

- Messages

- 27,683 (4.03/day)

- Location

- Houston

| System Name | Moving into the mobile space |

|---|---|

| Processor | 7940HS |

| Motherboard | HP trash |

| Cooling | HP trash |

| Memory | 2x8GB |

| Video Card(s) | 4070 mobile |

| Storage | 512GB+2TB NVME |

| Display(s) | some 165hz thing that isn't as nice as it sounded |

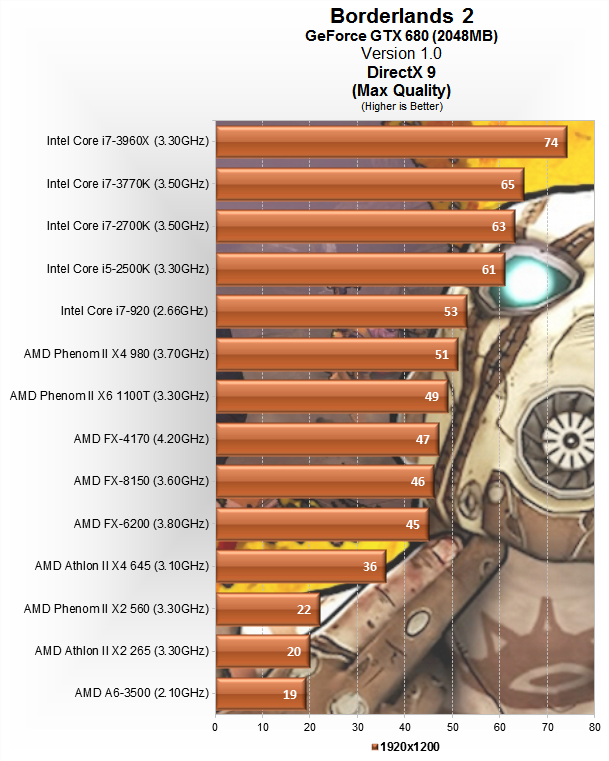

That's the issue, to get the best performance out of it you need to use this and use that.

Why do you have to compromise when you can get a product that performs well everywhere?

Same reason those CPU's are taking over the server market pretty quickly. They do perform well. I don't use any single application were the performance of an FX CPU is so awful I cannot happily/quickly complete my task, but there are applications I use that it works exceedingly well. (3D CAD with proper rendering tools/the games I play)