-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA Announces GeForce Ampere RTX 3000 Series Graphics Cards: Over 10000 CUDA Cores

- Thread starter btarunr

- Start date

D

Deleted member 185088

Guest

Why? Gamer Nexus made a piece on how much power a rig actually uses.So I have a PSU 750W bronze, I know I need to upgrade but to which wattage

Be serious. You need 1KW power Titanium class and then load it to 50-60% where it runs at highest efficiency 97% whisper quiet or fanless. 400 watt GPU 200 watt CPU. This is all, everything else in the system is irrelevant unless you have 10 hdd or 10 fans or something preposterous.

- Joined

- Sep 15, 2011

- Messages

- 6,474 (1.40/day)

| Processor | Intel® Core™ i7-13700K |

|---|---|

| Motherboard | Gigabyte Z790 Aorus Elite AX |

| Cooling | Noctua NH-D15 |

| Memory | 32GB(2x16) DDR5@6600MHz G-Skill Trident Z5 |

| Video Card(s) | ZOTAC GAMING GeForce RTX 3080 AMP Holo |

| Storage | 2TB SK Platinum P41 SSD + 4TB SanDisk Ultra SSD + 500GB Samsung 840 EVO SSD |

| Display(s) | Acer Predator X34 3440x1440@100Hz G-Sync |

| Case | NZXT PHANTOM410-BK |

| Audio Device(s) | Creative X-Fi Titanium PCIe |

| Power Supply | Corsair 850W |

| Mouse | Logitech Hero G502 SE |

| Software | Windows 11 Pro - 64bit |

| Benchmark Scores | 30FPS in NFS:Rivals |

I love how they spelled "Hotel" with both Latin and Katakana characters

- Joined

- Nov 11, 2016

- Messages

- 3,067 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

You know...I'll be super pissed if i buy RTX 3070 and then a few months later Nvidia announces a 3070 with 16GB of VRAM. So i'll just wait for AMD to release their cards as well. They said RDNA 2 cards will be on the market before new consoles.

Might as well buy the RTX 3080 then, 1GB of GDDR6 used to cost like 12usd so adding another 8GB to the 3070 would make it too close to the 3080. Furthermore AMD won't be able to undercut Ampere pricing without hurting their financial, like when they released the Radeon VII.

Why everyone is so obsessed with VRAM is beyond me, with DLSS 2.0, you effectively only use as much VRAM as 1080p and 1440p require when playing at 4K. Nvidia is already touting 8K upscaled from 1440p with DLSS 2.0

Yeah DLSS just make your GPU last much longer, even the 2060 6GB can survive AAA games for a quite a while thanks to DLSS.

Last edited:

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

You keep saying that, as if repeating it makes it true. Do you have any basis on which to back up that claim? Any proof that DF is being paid by Nvidia? Any proof that this is an "advertorial" rather than strictly limited exclusive-access hands-on content? So far all you've served to demonstrate is that you seem unable to differentiate between similar but fundamentally different things.It's a paid marketing deal.

And how many games use DLSS? A handful. Also RAy tracing. The more i think about Ampere. the more it looks like garbage architecture. Nvidia had to increase number of cuda cores to ridiculous amounts, TDP is 320W for 3080, and if you do the math, the card is only 30-35% faster than 2080Ti. RDNA 2 in XBOX Series X is 12 TFLOP and according to Digital Foundry it performs on level with RTX 2080 Super. So if AMD managed to achieve that level of performance in a console, that high end/ high power consumption graphics card could easily beat RTX 2080Ti by 30-40%. AMd surely won't cheap out on amount of VRAM as Nvidia does. Worst case scenario, i expect AMD to release their top card for $599, performance between RTX 3070 and 3080, 12GB of VRAM and TDP < 300W.Might as well buy the RTX 3080 then, 1GB of GDDR6 used to cost like 12usd so adding another 8GB to the 3070 would make it too close to the 3080. Furthermore AMD won't be able to undercut Ampere pricing without hurting their financial, like when they released the Radeon VII.

Why everyone is so obsessed with VRAM is beyond me, with DLSS 2.0, you effectively only use as much VRAM as 1080p and 1440p require when playing at 4K. Nvidia is already touting 8K upscaled from 1440p with DLSS 2.0

Yeah DLSS just make your GPU last much longer, even the 2060 6GB can survive AAA games for a quite a while thanks to DLSS.

- Joined

- Nov 11, 2016

- Messages

- 3,067 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

And how many games use DLSS? A handful. Also RAy tracing. The more i think about Ampere. the more it looks like garbage architecture. Nvidia had to increase number of cuda cores to ridiculous amounts, TDP is 320W for 3080, and if you do the math, the card is only 30-35% faster than 2080Ti. RDNA 2 in XBOX Series X is 12 TFLOP and according to Digital Foundry it performs on level with RTX 2080 Super. So if AMD managed to achieve that level of performance in a console, that high end/ high power consumption graphics card could easily beat RTX 2080Ti by 30-40%. AMd surely won't cheap out on amount of VRAM as Nvidia does. Worst case scenario, i expect AMD to release their top card for $599, performance between RTX 3070 and 3080, 12GB of VRAM and TDP < 300W.

So you are looking into the future proofing with extra VRAM but disregard DLSS as future-proof because "currently" it doesn't get supported by many games ? what kinda red-tinted logic is that

How many games currently need more than 8GB VRAM at 4K ? 1 or 2, one is a flight SIM and the other is a poorly ported game

How many DLSS 2.0 games atm ? more than 7 atm and more to come in the very near future, all of them are AAA games

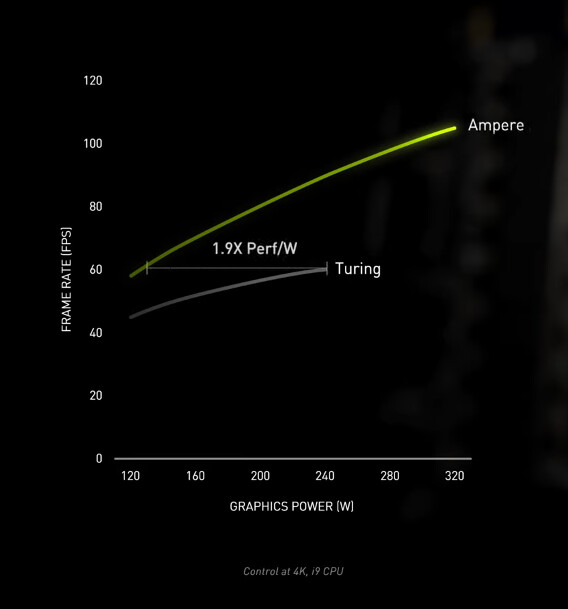

Yeah Ampere doesn't not impress me on the efficiency gain standpoint but the Samsung 8N node just scale so well with power that capping the TDP would just waste its potential, I'm sure many 5700XT owner would understand this argument

From the look of this I bet pushing even more power will would net meaningful performance gain but it would just be ridiculous. But hey at least you can have a free heater in the winter.

And if you cap the FPS, which I think would give a better gaming experience than letting the FPS run wild, the 3080 would match the 2080 Ti performance at 1/2 the TGP which is 160W

- Joined

- Jun 10, 2014

- Messages

- 2,902 (0.80/day)

| Processor | AMD Ryzen 9 5900X ||| Intel Core i7-3930K |

|---|---|

| Motherboard | ASUS ProArt B550-CREATOR ||| Asus P9X79 WS |

| Cooling | Noctua NH-U14S ||| Be Quiet Pure Rock |

| Memory | Crucial 2 x 16 GB 3200 MHz ||| Corsair 8 x 8 GB 1333 MHz |

| Video Card(s) | MSI GTX 1060 3GB ||| MSI GTX 680 4GB |

| Storage | Samsung 970 PRO 512 GB + 1 TB ||| Intel 545s 512 GB + 256 GB |

| Display(s) | Asus ROG Swift PG278QR 27" ||| Eizo EV2416W 24" |

| Case | Fractal Design Define 7 XL x 2 |

| Audio Device(s) | Cambridge Audio DacMagic Plus |

| Power Supply | Seasonic Focus PX-850 x 2 |

| Mouse | Razer Abyssus |

| Keyboard | CM Storm QuickFire XT |

| Software | Ubuntu |

So Nvidia just announced their largest architectural improvement in ages, and you think it looks like garbage. You're trolling, right? By your standards, what is not a garbage architecture then?The more i think about Ampere. the more it looks like garbage architecture.

I would like to see that math.Nvidia had to increase number of cuda cores to ridiculous amounts, TDP is 320W for 3080, and if you do the math, the card is only 30-35% faster than 2080Ti.

Is it the same kind of math that predicted Turing to perfor at most 10% better than Pascal?

It's funny that you would know that. I honestly don't know precisely where it will end up, and I'm not sure AMD even knows yet.AMd surely won't cheap out on amount of VRAM as Nvidia does. Worst case scenario, i expect AMD to release their top card for $599, performance between RTX 3070 and 3080, 12GB of VRAM and TDP < 300W.

Historically, AMD have put extra VRAM to lure buyers into thinking a product is more future proof, especially when the competition is more attractive.

- Joined

- May 15, 2020

- Messages

- 697 (0.48/day)

- Location

- France

| System Name | Home |

|---|---|

| Processor | Ryzen 3600X |

| Motherboard | MSI Tomahawk 450 MAX |

| Cooling | Noctua NH-U14S |

| Memory | 16GB Crucial Ballistix 3600 MHz DDR4 CAS 16 |

| Video Card(s) | MSI RX 5700XT EVOKE OC |

| Storage | Samsung 970 PRO 512 GB |

| Display(s) | ASUS VA326HR + MSI Optix G24C4 |

| Case | MSI - MAG Forge 100M |

| Power Supply | Aerocool Lux RGB M 650W |

Disabling parts of most chips is s standard industry way of improving yields, and all manufacturers have been using it for a long time.It might very well be garbage if their selling a busted chip (3090) for 1500 euros.

All that matters is that the GPU is giving you the amount of shaders, memory, etc. that is written on the box, the rest is irrelevant.

- Joined

- Nov 11, 2016

- Messages

- 3,067 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

More VRAM /= more Future proof, otherwise Radeon VII should have beaten every GPU here

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

What on earth are you going on about? This is the silliest thing I've seen in all of these Ampere threads, and that is saying something. Cut-down chips are in no way whatsoever broken. The 2080 Ti was a cut-down chip. Did you raise the same point about that? Is the Radeon 5700 "broken", or the 5600 XT? Disabling defective parts of chips to improve yields is entirely unproblematic, has been the standard mode of operations for chipmakers for many years, and is a godsend for those of us who value reasonable component prices, as without it they would likely double.Is the 3090 RTX full chip, or does it have some parts/shaders disabled ?

If it would, basically nvidia is selling broken chip for 1500 eur.

- Joined

- Mar 10, 2010

- Messages

- 11,878 (2.30/day)

- Location

- Manchester uk

| System Name | RyzenGtEvo/ Asus strix scar II |

|---|---|

| Processor | Amd R5 5900X/ Intel 8750H |

| Motherboard | Crosshair hero8 impact/Asus |

| Cooling | 360EK extreme rad+ 360$EK slim all push, cpu ek suprim Gpu full cover all EK |

| Memory | Corsair Vengeance Rgb pro 3600cas14 16Gb in four sticks./16Gb/16GB |

| Video Card(s) | Powercolour RX7900XT Reference/Rtx 2060 |

| Storage | Silicon power 2TB nvme/8Tb external/1Tb samsung Evo nvme 2Tb sata ssd/1Tb nvme |

| Display(s) | Samsung UAE28"850R 4k freesync.dell shiter |

| Case | Lianli 011 dynamic/strix scar2 |

| Audio Device(s) | Xfi creative 7.1 on board ,Yamaha dts av setup, corsair void pro headset |

| Power Supply | corsair 1200Hxi/Asus stock |

| Mouse | Roccat Kova/ Logitech G wireless |

| Keyboard | Roccat Aimo 120 |

| VR HMD | Oculus rift |

| Software | Win 10 Pro |

| Benchmark Scores | 8726 vega 3dmark timespy/ laptop Timespy 6506 |

Your answer to a misconception is no more true then his misconception.More VRAM /= more Future proof, otherwise Radeon VII should have beaten every GPU here

More Vram could increase time of useful use, Not performance per say though marginally, that can be the case.

The RVII will remain pliable for quite a while yet.

You are missing my point here. I understand your point of view about disabling chips.

I would have raised the same complain about 2080ti, if i were interested in it.

Nvidia designed a chip the way they did, and when they build it, parts of it didnt work out. So basically its a busted silicon, an imperfect chip they are selling for 1500 euros. If i give out 1500 euro, at least give me the full working chip as it was designed.

I payed 1300 euro for a vega frontier edition liquid cooled, but i got a full vega chip as it was intended with full working shaders, paiered with 16gb hbm memory, and watercooling.

The 3090 is a "garbage chip" they sell to get some money back out, while they keep the full working chip and sell it for 5000 euro in quadro or a titan card.

Compared to that the full chip vega i payed 1300 euros is a good deal.

My point is i want the full chip they designed, not an imperfect version sold for lots of money.

While you and/or other might see a good deal to buy a 3090 "busted" chip for 1500 euro, i on the other hand do not.

And you are mistaken my friend. Cut down chips are indeed broken. A part of them couldnt be build as intended - hence broken, so they scraped that and disabled it. Its a wasted chip space that could have held more transistors, making the whole chip better overall.

I dont find it funny to pay 1500 eur for "broken chip".

And the fact is, we dont even know if 3090 is full ship or not. I have asked about it, but havent gotten any answer untill now. You seem to believe that 3090 is indeed a non full chip ?

I would have raised the same complain about 2080ti, if i were interested in it.

Nvidia designed a chip the way they did, and when they build it, parts of it didnt work out. So basically its a busted silicon, an imperfect chip they are selling for 1500 euros. If i give out 1500 euro, at least give me the full working chip as it was designed.

I payed 1300 euro for a vega frontier edition liquid cooled, but i got a full vega chip as it was intended with full working shaders, paiered with 16gb hbm memory, and watercooling.

The 3090 is a "garbage chip" they sell to get some money back out, while they keep the full working chip and sell it for 5000 euro in quadro or a titan card.

Compared to that the full chip vega i payed 1300 euros is a good deal.

My point is i want the full chip they designed, not an imperfect version sold for lots of money.

While you and/or other might see a good deal to buy a 3090 "busted" chip for 1500 euro, i on the other hand do not.

And you are mistaken my friend. Cut down chips are indeed broken. A part of them couldnt be build as intended - hence broken, so they scraped that and disabled it. Its a wasted chip space that could have held more transistors, making the whole chip better overall.

I dont find it funny to pay 1500 eur for "broken chip".

And the fact is, we dont even know if 3090 is full ship or not. I have asked about it, but havent gotten any answer untill now. You seem to believe that 3090 is indeed a non full chip ?

- Joined

- Dec 22, 2011

- Messages

- 3,890 (0.86/day)

| Processor | AMD Ryzen 7 3700X |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | AMD Wraith Prism |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | NVIDIA GeForce RTX 3080 FE |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Samsung 50" QN94A Neo QLED |

| Case | Antec 1200 |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

More VRAM /= more Future proof, otherwise Radeon VII should have beaten every GPU here

Yeah I mean does 8GB on a RX 570 make it magically "future proof"? I guess for some it does

- Joined

- Jun 10, 2014

- Messages

- 2,902 (0.80/day)

| Processor | AMD Ryzen 9 5900X ||| Intel Core i7-3930K |

|---|---|

| Motherboard | ASUS ProArt B550-CREATOR ||| Asus P9X79 WS |

| Cooling | Noctua NH-U14S ||| Be Quiet Pure Rock |

| Memory | Crucial 2 x 16 GB 3200 MHz ||| Corsair 8 x 8 GB 1333 MHz |

| Video Card(s) | MSI GTX 1060 3GB ||| MSI GTX 680 4GB |

| Storage | Samsung 970 PRO 512 GB + 1 TB ||| Intel 545s 512 GB + 256 GB |

| Display(s) | Asus ROG Swift PG278QR 27" ||| Eizo EV2416W 24" |

| Case | Fractal Design Define 7 XL x 2 |

| Audio Device(s) | Cambridge Audio DacMagic Plus |

| Power Supply | Seasonic Focus PX-850 x 2 |

| Mouse | Razer Abyssus |

| Keyboard | CM Storm QuickFire XT |

| Software | Ubuntu |

If anything, it shows that VRAM is not a bottleneck.Your answer to a misconception is no more true then his misconception.

More Vram could increase time of useful use, Not performance per say though marginally, that can be the case.

The RVII will remain pliable for quite a while yet.

But your claim that more VRAM can increase the time of useful use has been used as an argument for years, but have yet to happen. The reality is that unless the usage pattern in games changes significantly, you're not going to get some future proofing out of it, for in order to use more VRAM for higher details, you also need more bandwidth to deliver that data and more computational power to utilize it.

More VRAM mostly makes sense for various pro/semi-pro uses. Which is why I've said I prefer extra VRAM to be an option for AIBs instead of mandated.

- Joined

- Mar 10, 2010

- Messages

- 11,878 (2.30/day)

- Location

- Manchester uk

| System Name | RyzenGtEvo/ Asus strix scar II |

|---|---|

| Processor | Amd R5 5900X/ Intel 8750H |

| Motherboard | Crosshair hero8 impact/Asus |

| Cooling | 360EK extreme rad+ 360$EK slim all push, cpu ek suprim Gpu full cover all EK |

| Memory | Corsair Vengeance Rgb pro 3600cas14 16Gb in four sticks./16Gb/16GB |

| Video Card(s) | Powercolour RX7900XT Reference/Rtx 2060 |

| Storage | Silicon power 2TB nvme/8Tb external/1Tb samsung Evo nvme 2Tb sata ssd/1Tb nvme |

| Display(s) | Samsung UAE28"850R 4k freesync.dell shiter |

| Case | Lianli 011 dynamic/strix scar2 |

| Audio Device(s) | Xfi creative 7.1 on board ,Yamaha dts av setup, corsair void pro headset |

| Power Supply | corsair 1200Hxi/Asus stock |

| Mouse | Roccat Kova/ Logitech G wireless |

| Keyboard | Roccat Aimo 120 |

| VR HMD | Oculus rift |

| Software | Win 10 Pro |

| Benchmark Scores | 8726 vega 3dmark timespy/ laptop Timespy 6506 |

So five years++ of acceptable 1080p mainstreamYeah I mean does 8GB on a RX 570 make it magically "future proof"? I guess for some it does

Gaming is not proof of that to you, no point debating it here , it's not on topic but I disagree with you.

@effikan see Polaris, your wrong pal.

We'll have to just disagree, you can retort but this is off topic so I won't be continuing it on this line.

- Joined

- Dec 22, 2011

- Messages

- 3,890 (0.86/day)

| Processor | AMD Ryzen 7 3700X |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | AMD Wraith Prism |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | NVIDIA GeForce RTX 3080 FE |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Samsung 50" QN94A Neo QLED |

| Case | Antec 1200 |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

What on earth are you going on about? This is the silliest thing I've seen in all of these Ampere threads, and that is saying something. Cut-down chips are in no way whatsoever broken. The 2080 Ti was a cut-down chip. Did you raise the same point about that? Is the Radeon 5700 "broken", or the 5600 XT? Disabling defective parts of chips to improve yields is entirely unproblematic, has been the standard mode of operations for chipmakers for many years, and is a godsend for those of us who value reasonable component prices, as without it they would likely double.

I for one wouldn't mind if my cut down garbage GPU performed like a 3090.

- Joined

- Mar 18, 2008

- Messages

- 5,400 (0.92/day)

- Location

- Australia

| System Name | Night Rider | Mini LAN PC | Workhorse |

|---|---|

| Processor | AMD R7 5800X3D | Ryzen 1600X | i7 970 |

| Motherboard | MSi AM4 Pro Carbon | GA- | Gigabyte EX58-UD5 |

| Cooling | Noctua U9S Twin Fan| Stock Cooler, Copper Core)| Big shairkan B |

| Memory | 2x8GB DDR4 G.Skill Ripjaws 3600MHz| 2x8GB Corsair 3000 | 6x2GB DDR3 1300 Corsair |

| Video Card(s) | MSI AMD 6750XT | 6500XT | MSI RX 580 8GB |

| Storage | 1TB WD Black NVME / 250GB SSD /2TB WD Black | 500GB SSD WD, 2x1TB, 1x750 | WD 500 SSD/Seagate 320 |

| Display(s) | LG 27" 1440P| Samsung 20" S20C300L/DELL 15" | 22" DELL/19"DELL |

| Case | LIAN LI PC-18 | Mini ATX Case (custom) | Atrix C4 9001 |

| Audio Device(s) | Onboard | Onbaord | Onboard |

| Power Supply | Silverstone 850 | Silverstone Mini 450W | Corsair CX-750 |

| Mouse | Coolermaster Pro | Rapoo V900 | Gigabyte 6850X |

| Keyboard | MAX Keyboard Nighthawk X8 | Creative Fatal1ty eluminx | Some POS Logitech |

| Software | Windows 10 Pro 64 | Windows 10 Pro 64 | Windows 7 Pro 64/Windows 10 Home |

If the performance is true then im more excited about the 2660 Ti? if thats what they would call it?

- Joined

- May 2, 2017

- Messages

- 7,762 (3.04/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

This screed is beyond ridicuous.You are missing my point here. I understand your point of view about disabling chips.

I would have raised the same complain about 2080ti, if i were interested in it.

Nvidia designed a chip the way they did, and when they build it, parts of it didnt work out. So basically its a busted silicon, an imperfect chip they are selling for 1500 euros. If i give out 1500 euro, at least give me the full working chip as it was designed.

I payed 1300 euro for a vega frontier edition liquid cooled, but i got a full vega chip as it was intended with full working shaders, paiered with 16gb hbm memory, and watercooling.

The 3090 is a "garbage chip" they sell to get some money back out, while they keep the full working chip and sell it for 5000 euro in quadro or a titan card.

Compared to that the full chip vega i payed 1300 euros is a good deal.

My point is i want the full chip they designed, not an imperfect version sold for lots of money.

While you and/or other might see a good deal to buy a 3090 "busted" chip for 1500 euro, i on the other hand do not.

And you are mistaken my friend. Cut down chips are indeed broken. A part of them couldnt be build as intended - hence broken, so they scraped that and disabled it. Its a wasted chip space that could have held more transistors, making the whole chip better overall.

I dont find it funny to pay 1500 eur for "broken chip".

And the fact is, we dont even know if 3090 is full ship or not. I have asked about it, but havent gotten any answer untill now. You seem to believe that 3090 is indeed a non full chip ?

Firstly, it clearly demonstrates that you don't understand how defects in silicon happen. They are an unavoidable consequence of manufacturing - there will always be defects. There is nothing Nvidia can do to alleviate this. Sure, designing bigger chips increases the likelihood of them having some defect or other, but calling them "broken" is ridiculous. Are the i7-10700 or the Ryzen 7 3700X "broken" just because they couldn't reach the power/frequency bin required to be designated as 10700K or 3800X? Obviously not. And just like defects, differing clock and power behaviours of different chips across the same wafer is ultimately random. It can be controlled for somewhat and mitigated, but never removed entirely.

Secondly, unless having the full chip delivers noticeably more performance, what value does it have to you if your chip is fully enabled? The Vega 64 Water Cooled edition barely outperformed the V64 or the V56, yet cost 2x more. That is just silly. I mean, you're welcome to your delusions, but don't go pushing them on others here. Cut-down chips are a fantastic way of getting better yields out of intrinsically imperfect manufacturing methods, allowing for far better products to reach end users.

Thirdly, many (if not most!) cut-down chips aren't even defective, they are cut down to provide silicon for faster-selling lower-tier SKUs. On a mature process even with big dice the defect rate is tiny, meaning that after a while, most cut-down chips will be made from fully working silicon simply because there is no more defective silicon to use. Sure, some will always be defective, as I said above, but mostly this comes down to product segmentation.

So Nvidia just announced their largest architectural improvement in ages, and you think it looks like garbage. You're trolling, right? By your standards, what is not a garbage architecture then?

I would like to see that math.

Is it the same kind of math that predicted Turing to perfor at most 10% better than Pascal?

LOL. And you actually believe what Jensen said in that reveal? He also said 2 years ago that RTX 2080Ti gives 50% better performance that 1080Ti. In reality, it turned out more to be 10-15%. So not hard to beat your previous (turing) architecture when that brought minimalistic improvements to performance. RTX excluded ofc. RTX 2080 achieves around 45fps in Shadow of Tomb Raider in 4K and same settings as Digital Foundry used in their video. They say RTX 3080 is 80% faster. So simple math says that is around 80fps. RTX 2080Ti achieves around 60fps (source Guru3D) So performance difference RTX 2080Ti-3080 is 30-35% based on Tomb Raider. RTX 2080Ti 12nm manufacturing process, 250W TDP, 13.5TFLOPS. RTX 3080 8nm manufacturing process, 320W TDP, 30TFLOPS. And only 30-35% performance difference from this power hungry GPU with double the number of CUDA cores. Yes, i would call Ampere catastrophically ineffective garbage.So Nvidia just announced their largest architectural improvement in ages, and you think it looks like garbage. You're trolling, right? By your standards, what is not a garbage architecture then?

I would like to see that math.

Is it the same kind of math that predicted Turing to perfor at most 10% better than Pascal?

It's funny that you would know that. I honestly don't know precisely where it will end up, and I'm not sure AMD even knows yet.

Historically, AMD have put extra VRAM to lure buyers into thinking a product is more future proof, especially when the competition is more attractive.

Also, where the F is Jensen pulling that data about Ampere having 1.9 times performance per Watt compared to Turing? It would mean that RTX 3080 at 250W TDP should have 90% higher performance tham RTX 2080TI. But no. It has 320W TDP and 30-35% higher performance. So power effectiveness of Ampere is minimally better Watt for Watt. Claim about 1.9X effectibness i straight out LIE.

I wonder if Jensen believes that bullshit coming out of his mouth?

Last edited by a moderator: