From my limited understanding, changing to a smaller process node can (doesn't have to, can get worse) result in transistor switching occurring faster. That can and has resulted in a microcode instruction that used to take say 2 cycles to complete, to take 1 cycle.

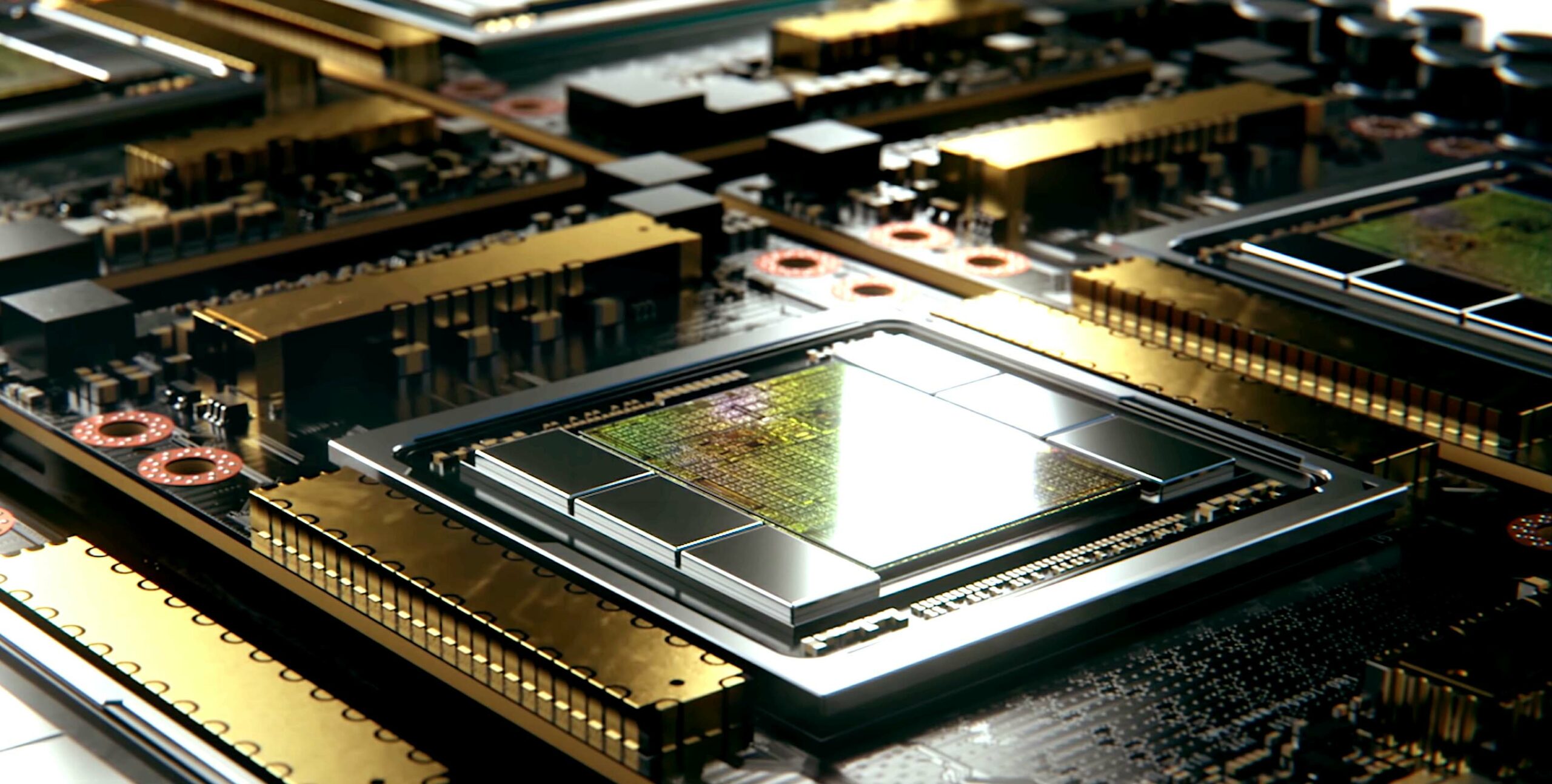

The processor's process size is always something that's frequently discussed in the chip's specifications. But what is that, and why does it matter?

www.maketecheasier.com

"Smaller processes also have a lower capacitance, allowing transistors to turn on and off more quickly while using less energy. And if you’re trying to make a better chip, that’s perfect. The faster a transistor can toggle on and off, the faster it can do work. "