-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Post your CrystalDiskMark speeds

- Thread starter T4C Fantasy

- Start date

- Joined

- Jul 30, 2019

- Messages

- 3,756 (1.77/day)

| System Name | Still not a thread ripper but pretty good. |

|---|---|

| Processor | Ryzen 9 7950x, Thermal Grizzly AM5 Offset Mounting Kit, Thermal Grizzly Extreme Paste |

| Motherboard | ASRock B650 LiveMixer (BIOS/UEFI version P3.08, AGESA 1.2.0.2) |

| Cooling | EK-Quantum Velocity, EK-Quantum Reflection PC-O11, D5 PWM, EK-CoolStream PE 360, XSPC TX360 |

| Memory | Micron DDR5-5600 ECC Unbuffered Memory (2 sticks, 64GB, MTC20C2085S1EC56BD1) + JONSBO NF-1 |

| Video Card(s) | XFX Radeon RX 5700 & EK-Quantum Vector Radeon RX 5700 +XT & Backplate |

| Storage | Samsung 4TB 980 PRO, 2 x Optane 905p 1.5TB (striped), AMD Radeon RAMDisk |

| Display(s) | 2 x 4K LG 27UL600-W (and HUANUO Dual Monitor Mount) |

| Case | Lian Li PC-O11 Dynamic Black (original model) |

| Audio Device(s) | Corsair Commander Pro for Fans, RGB, & Temp Sensors (x4) |

| Power Supply | Corsair RM750x |

| Mouse | Logitech M575 |

| Keyboard | Corsair Strafe RGB MK.2 |

| Software | Windows 10 Professional (64bit) |

| Benchmark Scores | RIP Ryzen 9 5950x, ASRock X570 Taichi (v1.06), 128GB Micron DDR4-3200 ECC UDIMM (18ASF4G72AZ-3G2F1) |

- Joined

- Mar 13, 2021

- Messages

- 6 (0.00/day)

The peak of performance.

Connor 210MB (386 era)

Wowwwws. I distinctly remember my Conner (120mb?) HD getting 700KB/s on a dx2-66 in (i think..) HD-tach. My mate then got a quantum fireball and it did like 1200KB/s on a dx2. I was well jealous. You, however, have surpassed this.

edit -- okay, can't find any pics of hdtach in dos, so maybe it wasn't that...

- Joined

- Jul 19, 2015

- Messages

- 1,031 (0.29/day)

- Location

- Nova Scotia, Canada

| Processor | Ryzen 5 5600 @ 4.65GHz CO -30 |

|---|---|

| Motherboard | AsRock X370 Taichi |

| Cooling | Cooler Master Hyper 212 Plus |

| Memory | 32GB 4x8 G.SKILL Trident Z 3200 CL14 1.35V |

| Video Card(s) | PCWINMAX RTX 3060 6GB Laptop GPU (80W) |

| Storage | 1TB Kingston NV2 |

| Display(s) | LG 25UM57-P @ 75Hz OC |

| Case | Fractal Design Arc XL |

| Audio Device(s) | ATH-M20x |

| Power Supply | Evga SuperNova 1300 G2 |

| Mouse | Evga Torq X3 |

| Keyboard | Thermaltake Challenger |

| Software | Win 11 Pro 64-Bit |

In ATTO it get's a more reasonable 700/600KB/s R/W.Wowwwws. I distinctly remember my Conner (120mb?) HD getting 700KB/s on a dx2-66 in (i think..) HD-tach. My mate then got a quantum fireball and it did like 1200KB/s on a dx2. I was well jealous. You, however, have surpassed this.

edit -- okay, can't find any pics of hdtach in dos, so maybe it wasn't that...

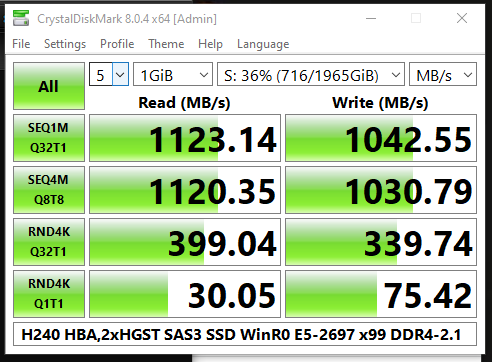

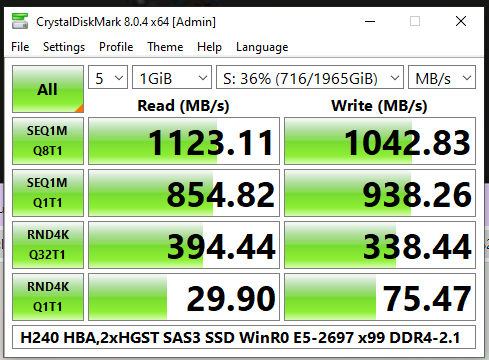

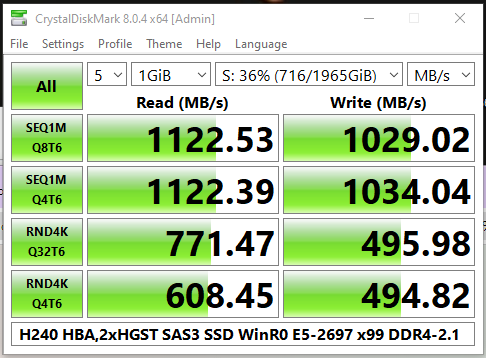

x99 Xeons soldier on.

2x 1.5TB HGST HUSMM series SAS-3 12gbs SSDs on an HP 240 HBA (on a x99 based Dell workstation mobo ). CPU is a 14 core Xeon E5-2697 2.6/3.0 ("Haswell") with 40gb of DDR4 2133 (registered ECC). This is dedicated storage, the system is running on its own SATA SSD. Half of each disk is mirrored, half striped, using Windows LVM/StorageSpaces. The test shown is of the striped logical volume.

). CPU is a 14 core Xeon E5-2697 2.6/3.0 ("Haswell") with 40gb of DDR4 2133 (registered ECC). This is dedicated storage, the system is running on its own SATA SSD. Half of each disk is mirrored, half striped, using Windows LVM/StorageSpaces. The test shown is of the striped logical volume.

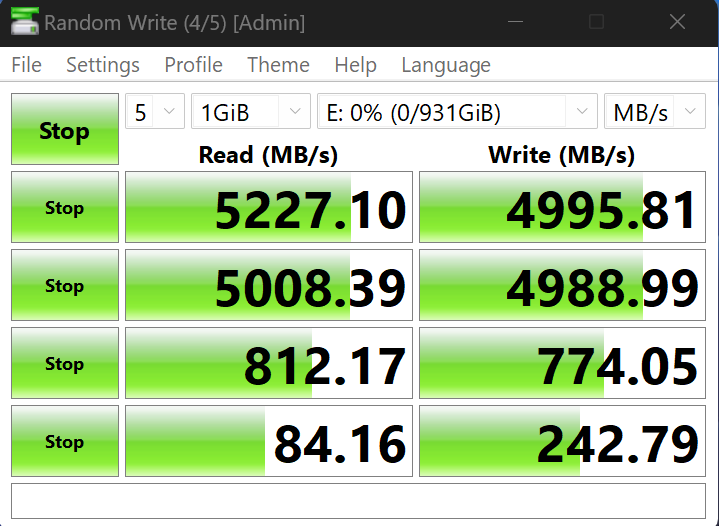

According to the thread instructions:

Defaults:

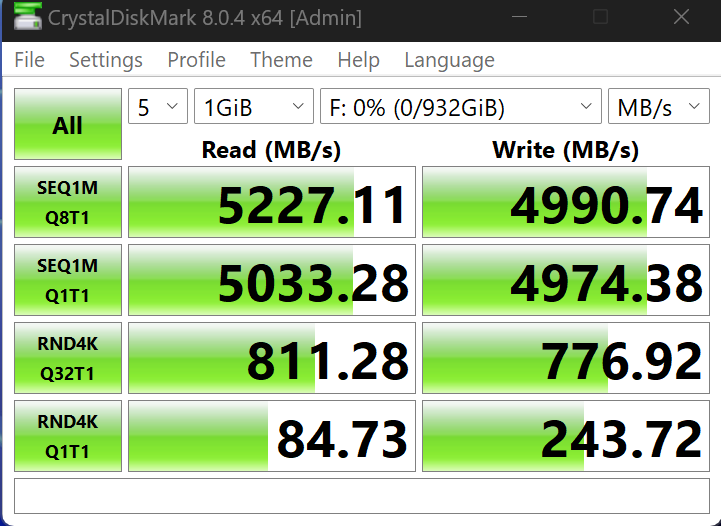

The NVME suite, for comparison:

These disks show up reliably on the 'bay as SAN pulls. I've never had any issues reformatting them with sg_utils when neccesary.

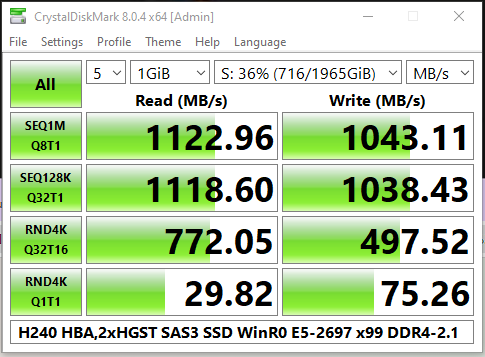

Performance with more queue depth and six threads (for more parallelism, to exercise the cores):

Significant boost to RND4K, but probably not meaningful for anything but development, virtual machine hosting, keeping up w/streaming I/O, etc.

E5 Xeons and x99 boards aren't quite as old as a 386, but still not bad for a platform that's nearing a decade.

Though I'd still like to figure out why I'm not exceeding 12gbps in sequential reads despite the striped volume having drives on different SAS-3 ports and the card being PCI-E 3 x8. Maybe an issue with the benchmark? Seems too coincidental to be limited right at the SAS3 bandwidth, allowing for a little overhead.

2x 1.5TB HGST HUSMM series SAS-3 12gbs SSDs on an HP 240 HBA (on a x99 based Dell workstation mobo

). CPU is a 14 core Xeon E5-2697 2.6/3.0 ("Haswell") with 40gb of DDR4 2133 (registered ECC). This is dedicated storage, the system is running on its own SATA SSD. Half of each disk is mirrored, half striped, using Windows LVM/StorageSpaces. The test shown is of the striped logical volume.

). CPU is a 14 core Xeon E5-2697 2.6/3.0 ("Haswell") with 40gb of DDR4 2133 (registered ECC). This is dedicated storage, the system is running on its own SATA SSD. Half of each disk is mirrored, half striped, using Windows LVM/StorageSpaces. The test shown is of the striped logical volume.According to the thread instructions:

Defaults:

The NVME suite, for comparison:

These disks show up reliably on the 'bay as SAN pulls. I've never had any issues reformatting them with sg_utils when neccesary.

Performance with more queue depth and six threads (for more parallelism, to exercise the cores):

Significant boost to RND4K, but probably not meaningful for anything but development, virtual machine hosting, keeping up w/streaming I/O, etc.

E5 Xeons and x99 boards aren't quite as old as a 386, but still not bad for a platform that's nearing a decade.

Though I'd still like to figure out why I'm not exceeding 12gbps in sequential reads despite the striped volume having drives on different SAS-3 ports and the card being PCI-E 3 x8. Maybe an issue with the benchmark? Seems too coincidental to be limited right at the SAS3 bandwidth, allowing for a little overhead.

Last edited:

- Joined

- Aug 16, 2004

- Messages

- 3,288 (0.43/day)

- Location

- Sunny California

| Processor | AMD Ryzen 7 9800X3D |

|---|---|

| Motherboard | Gigabyte Aorus X870E Elite |

| Cooling | Asus Ryujin II 360 EVA Edition |

| Memory | 4x16GBs DDR5 6000MHz Corsair Vengeance |

| Video Card(s) | Zotac RTX 4090 AMP Extreme Airo |

| Storage | 2TB Samsung 990 Pro OS - 4TB Nextorage G Series Games - 8TBs WD Black Storage |

| Display(s) | LG C2 OLED 42" 4K 120Hz HDR G-Sync enabled TV |

| Case | Asus ROG Helios EVA Edition |

| Audio Device(s) | Denon AVR-S910W - 7.1 Klipsch Dolby ATMOS Speaker Setup - Audeze Maxwell |

| Power Supply | beQuiet Straight Power 12 1500W |

| Mouse | Asus ROG Keris EVA Edition - Asus ROG Scabbard II EVA Edition |

| Keyboard | Asus ROG Strix Scope EVA Edition |

| VR HMD | Pimax Crystal Light/DMAS |

| Software | Windows 11 Pro 64bit |

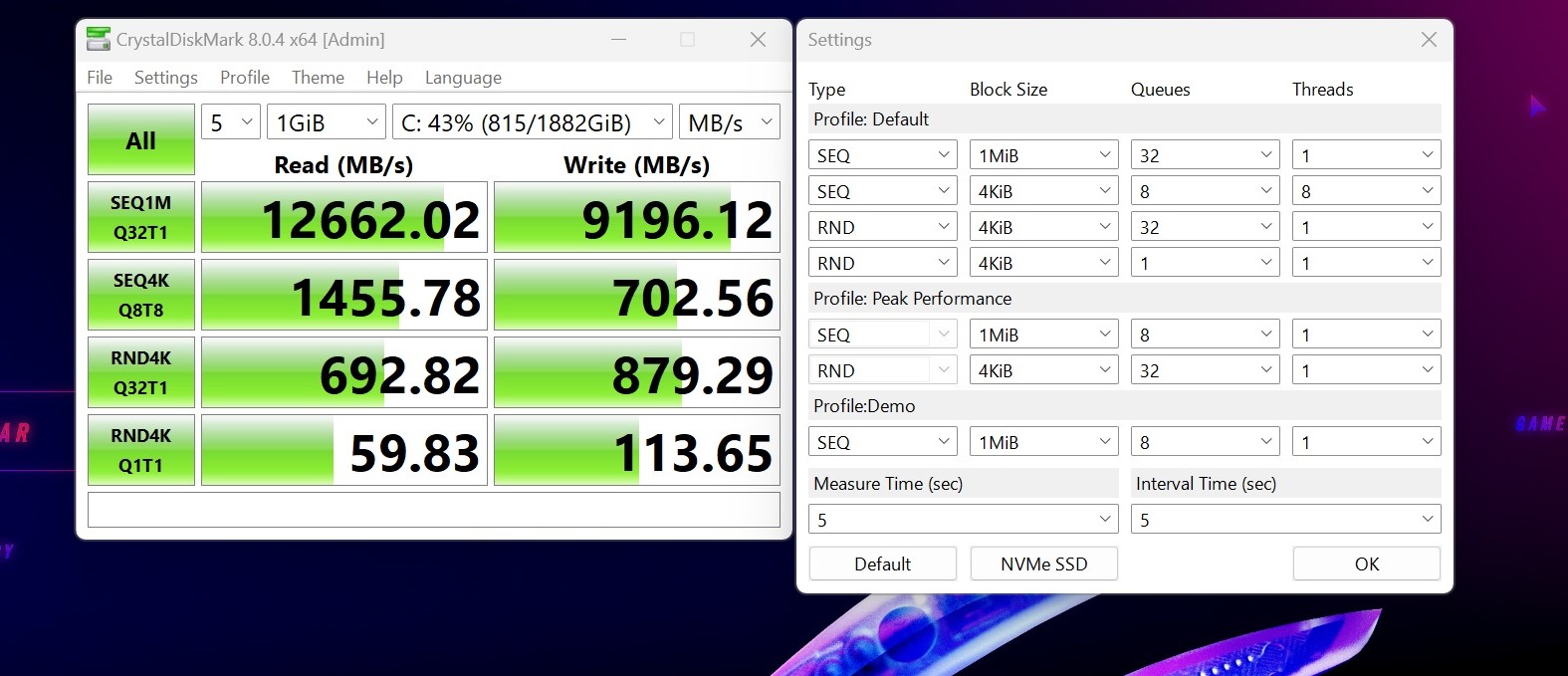

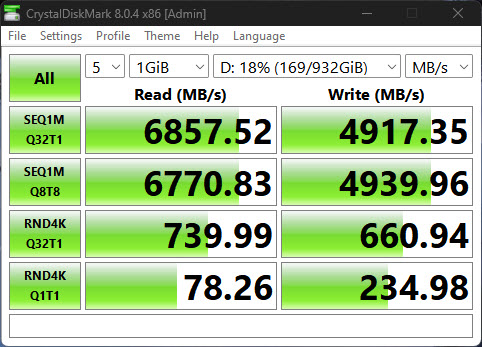

Asus ROG Strix Scar 16, 2 Samsung 1TB in RAID 0

- Joined

- Jul 30, 2019

- Messages

- 3,756 (1.77/day)

| System Name | Still not a thread ripper but pretty good. |

|---|---|

| Processor | Ryzen 9 7950x, Thermal Grizzly AM5 Offset Mounting Kit, Thermal Grizzly Extreme Paste |

| Motherboard | ASRock B650 LiveMixer (BIOS/UEFI version P3.08, AGESA 1.2.0.2) |

| Cooling | EK-Quantum Velocity, EK-Quantum Reflection PC-O11, D5 PWM, EK-CoolStream PE 360, XSPC TX360 |

| Memory | Micron DDR5-5600 ECC Unbuffered Memory (2 sticks, 64GB, MTC20C2085S1EC56BD1) + JONSBO NF-1 |

| Video Card(s) | XFX Radeon RX 5700 & EK-Quantum Vector Radeon RX 5700 +XT & Backplate |

| Storage | Samsung 4TB 980 PRO, 2 x Optane 905p 1.5TB (striped), AMD Radeon RAMDisk |

| Display(s) | 2 x 4K LG 27UL600-W (and HUANUO Dual Monitor Mount) |

| Case | Lian Li PC-O11 Dynamic Black (original model) |

| Audio Device(s) | Corsair Commander Pro for Fans, RGB, & Temp Sensors (x4) |

| Power Supply | Corsair RM750x |

| Mouse | Logitech M575 |

| Keyboard | Corsair Strafe RGB MK.2 |

| Software | Windows 10 Professional (64bit) |

| Benchmark Scores | RIP Ryzen 9 5950x, ASRock X570 Taichi (v1.06), 128GB Micron DDR4-3200 ECC UDIMM (18ASF4G72AZ-3G2F1) |

which samsung? NVMe PCIe 3 or 4? Hardware, firmware, or software RAID?Asus ROG Strix Scar 16, 2 Samsung 1TB in RAID 0

View attachment 291871

- Joined

- Aug 16, 2004

- Messages

- 3,288 (0.43/day)

- Location

- Sunny California

| Processor | AMD Ryzen 7 9800X3D |

|---|---|

| Motherboard | Gigabyte Aorus X870E Elite |

| Cooling | Asus Ryujin II 360 EVA Edition |

| Memory | 4x16GBs DDR5 6000MHz Corsair Vengeance |

| Video Card(s) | Zotac RTX 4090 AMP Extreme Airo |

| Storage | 2TB Samsung 990 Pro OS - 4TB Nextorage G Series Games - 8TBs WD Black Storage |

| Display(s) | LG C2 OLED 42" 4K 120Hz HDR G-Sync enabled TV |

| Case | Asus ROG Helios EVA Edition |

| Audio Device(s) | Denon AVR-S910W - 7.1 Klipsch Dolby ATMOS Speaker Setup - Audeze Maxwell |

| Power Supply | beQuiet Straight Power 12 1500W |

| Mouse | Asus ROG Keris EVA Edition - Asus ROG Scabbard II EVA Edition |

| Keyboard | Asus ROG Strix Scope EVA Edition |

| VR HMD | Pimax Crystal Light/DMAS |

| Software | Windows 11 Pro 64bit |

Drives are NVMe 2x 1TB Samsung PM9A1s MZVL21T0HCLR-00$00/07, PCIe Gen 4 at 4x, came configured in RAID 0 out of the box in the firmware.

- Joined

- Jan 3, 2015

- Messages

- 3,078 (0.81/day)

| System Name | The beast and the little runt. |

|---|---|

| Processor | Ryzen 5 5600X - Ryzen 9 5950X |

| Motherboard | ASUS ROG STRIX B550-I GAMING - ASUS ROG Crosshair VIII Dark Hero X570 |

| Cooling | Noctua NH-L9x65 SE-AM4a - NH-D15 chromax.black with IPPC Industrial 3000 RPM 120/140 MM fans. |

| Memory | G.SKILL TRIDENT Z ROYAL GOLD/SILVER 32 GB (2 x 16 GB and 4 x 8 GB) 3600 MHz CL14-15-15-35 1.45 volts |

| Video Card(s) | GIGABYTE RTX 4060 OC LOW PROFILE - GIGABYTE RTX 4090 GAMING OC |

| Storage | Samsung 980 PRO 1 TB + 2 TB - Samsung 870 EVO 4 TB - 2 x WD RED PRO 16 GB + WD ULTRASTAR 22 TB |

| Display(s) | Asus 27" TUF VG27AQL1A and a Dell 24" for dual setup |

| Case | Phanteks Enthoo 719/LUXE 2 BLACK |

| Audio Device(s) | Onboard on both boards |

| Power Supply | Phanteks Revolt X 1200W |

| Mouse | Logitech G903 Lightspeed Wireless Gaming Mouse |

| Keyboard | Logitech G910 Orion Spectrum |

| Software | WINDOWS 10 PRO 64 BITS on both systems |

| Benchmark Scores | Se more about my 2 in 1 system here: kortlink.dk/2ca4x |

Two new drives added to my system. Other drives in my system can be seen in my older post here: https://www.techpowerup.com/forums/threads/post-your-crystaldiskmark-speeds.250319/post-4566865

Samsung 980 PRO 1 TB nvme ssd added in for a comparison. 980 PRO has been in use for 2 years, so might have effected it´s maximum speed.

Else the two new drives are Samsung EVO 870 sata ssd with and with out rapid mode engade and a WD RED PRO 16 TB HDD.

Samsung 980 PRO 1 TB nvme ssd added in for a comparison. 980 PRO has been in use for 2 years, so might have effected it´s maximum speed.

Else the two new drives are Samsung EVO 870 sata ssd with and with out rapid mode engade and a WD RED PRO 16 TB HDD.

Jean Bon Deparme

New Member

- Joined

- May 9, 2023

- Messages

- 1 (0.00/day)

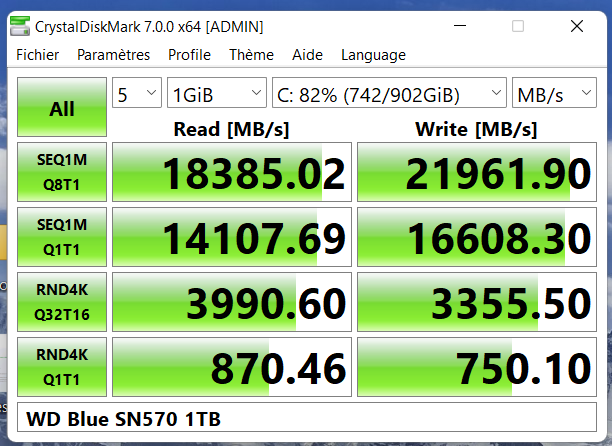

WD Blue SN570 1TB :

80CHF well spent.

How can I join the ranking ?

80CHF well spent.

How can I join the ranking ?

Last edited:

Mistakes_were_made

New Member

- Joined

- May 9, 2023

- Messages

- 1 (0.00/day)

[...]

"Seq Q32T1 (R/W)" Speeds are clickable, leading to the original post.

Name Drive Size Type RPM Connector Seq Q32T1 (R/W) Raid mama SK hynix Platinum P41 1TB NVMe M.2 7372.7 / 6739.8 Det0x SK hynix Platinum P41 1TB NVMe M.2 7366.3 / 6519.1 mama Kingston 3000 1TB NVMe M.2 7355.7 / 6074.5

[...]

You have to check (delete) the 1st and 3rd place Benchmarks from Mama, because there are no Seq1M Q32T1 (R/W) in the linked screenshots.

They are Q8T1 and so the Scores are not for this SEQ1M Q32T1 highscore list.

Attachments

- Joined

- Jan 22, 2007

- Messages

- 952 (0.14/day)

- Location

- Round Rock, TX

| Processor | 9800x3d |

|---|---|

| Motherboard | Asus Strix X870E-E |

| Cooling | Kraken Elite 280 |

| Memory | 64GB G.skill 6000mhz CL30 |

| Video Card(s) | Sapphire 9070XT Nitro+ |

| Storage | 1X 4TB MP700 Pro - 1 X 4TB SN850X |

| Display(s) | LG 32" 4K OLED + LG 38" IPS |

| Case | Corsair Frame 4000D |

| Power Supply | Corsair RM1000x |

| Software | WIndows 11 Pro |

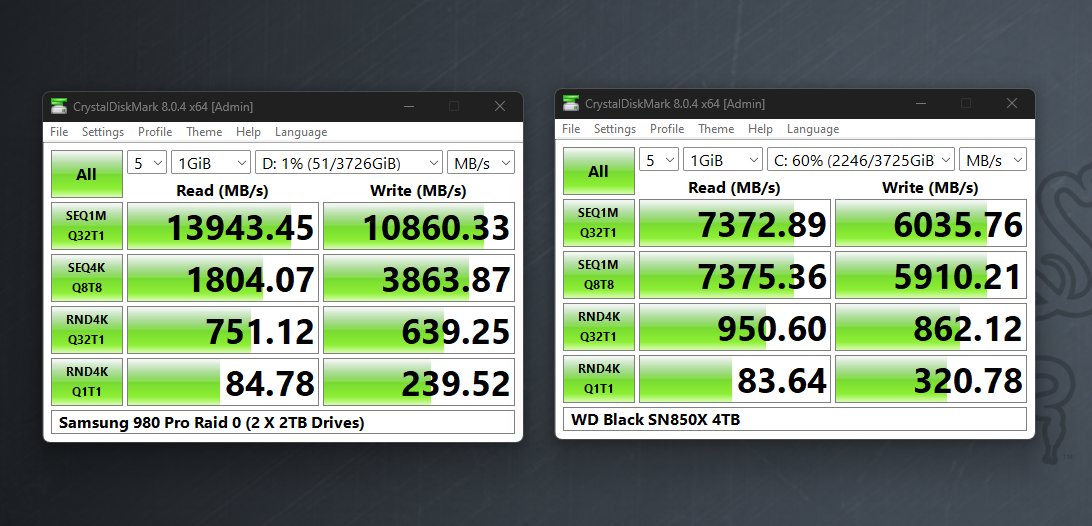

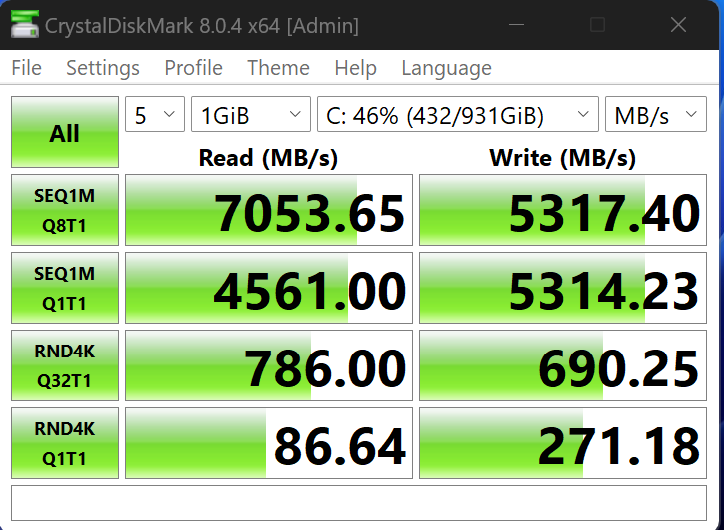

Left side: 980 Pro RAID 0 (2 X 2TB) -- D: drive

Right side : 4TB WD Black SN850X Single drive -- C: drive

Right side : 4TB WD Black SN850X Single drive -- C: drive

- Joined

- Jul 30, 2019

- Messages

- 3,756 (1.77/day)

| System Name | Still not a thread ripper but pretty good. |

|---|---|

| Processor | Ryzen 9 7950x, Thermal Grizzly AM5 Offset Mounting Kit, Thermal Grizzly Extreme Paste |

| Motherboard | ASRock B650 LiveMixer (BIOS/UEFI version P3.08, AGESA 1.2.0.2) |

| Cooling | EK-Quantum Velocity, EK-Quantum Reflection PC-O11, D5 PWM, EK-CoolStream PE 360, XSPC TX360 |

| Memory | Micron DDR5-5600 ECC Unbuffered Memory (2 sticks, 64GB, MTC20C2085S1EC56BD1) + JONSBO NF-1 |

| Video Card(s) | XFX Radeon RX 5700 & EK-Quantum Vector Radeon RX 5700 +XT & Backplate |

| Storage | Samsung 4TB 980 PRO, 2 x Optane 905p 1.5TB (striped), AMD Radeon RAMDisk |

| Display(s) | 2 x 4K LG 27UL600-W (and HUANUO Dual Monitor Mount) |

| Case | Lian Li PC-O11 Dynamic Black (original model) |

| Audio Device(s) | Corsair Commander Pro for Fans, RGB, & Temp Sensors (x4) |

| Power Supply | Corsair RM750x |

| Mouse | Logitech M575 |

| Keyboard | Corsair Strafe RGB MK.2 |

| Software | Windows 10 Professional (64bit) |

| Benchmark Scores | RIP Ryzen 9 5950x, ASRock X570 Taichi (v1.06), 128GB Micron DDR4-3200 ECC UDIMM (18ASF4G72AZ-3G2F1) |

Raid 0 via HBA, motherboard firmware, or software (like windows storage spaces?)Left side: 980 Pro RAID 0 (2 X 2TB) -- D: drive

Right side : 4TB WD Black SN850X Single drive -- C: drive

View attachment 296113

- Joined

- Jan 22, 2007

- Messages

- 952 (0.14/day)

- Location

- Round Rock, TX

| Processor | 9800x3d |

|---|---|

| Motherboard | Asus Strix X870E-E |

| Cooling | Kraken Elite 280 |

| Memory | 64GB G.skill 6000mhz CL30 |

| Video Card(s) | Sapphire 9070XT Nitro+ |

| Storage | 1X 4TB MP700 Pro - 1 X 4TB SN850X |

| Display(s) | LG 32" 4K OLED + LG 38" IPS |

| Case | Corsair Frame 4000D |

| Power Supply | Corsair RM1000x |

| Software | WIndows 11 Pro |

Raid 0 via HBA, motherboard firmware, or software (like windows storage spaces?)

Striped in windows via software.

How did you manage to get 22GB/s from a drive with a max rating of 3GB/s?

- Joined

- Jul 8, 2022

- Messages

- 282 (0.27/day)

- Location

- USA

| Processor | i9-11900K |

|---|---|

| Motherboard | Asus ROG Maximus XIII Hero |

| Cooling | Arctic Liquid Freezer II 360 |

| Memory | 4x8GB DDR4 |

| Video Card(s) | Alienware RTX 3090 OEM |

| Storage | OEM Kioxia 2tb NVMe (OS), 4TB WD Blue HDD (games) |

| Display(s) | LG 27GN950-B |

| Case | Lian Li Lancool II Mesh Performance (black) |

| Audio Device(s) | Logitech Pro X Wireless |

| Power Supply | Corsair RM1000x |

| Keyboard | HyperX Alloy Elite 2 |

And it only has a PCIe gen 3 interface. Is ram caching software being used? Is it an error? I am confusedHow did you manage to get 22GB/s from a drive with a max rating of 3GB/s?

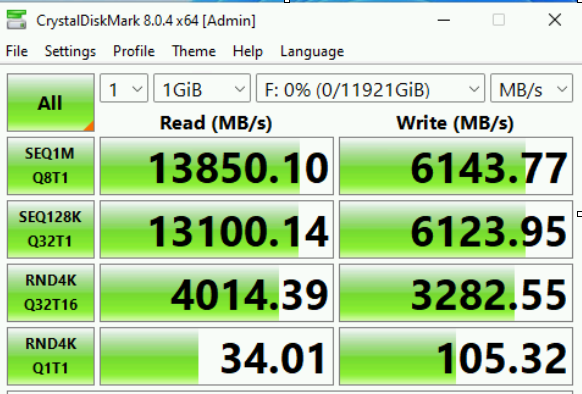

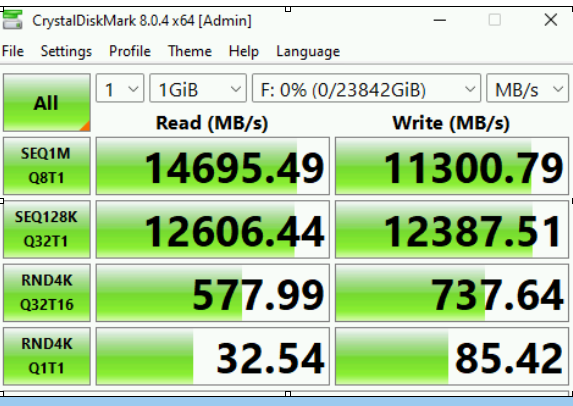

8 x Dell P5600 3.2TB Nvme drives in Raid 10:

This is the same 8 drives in Raid 0:

I am not sure why the speed numbers appear to be so slow given these drives cost $10k each and with 8 drives in raid 10 i would be expecting a 8x read and 4x write boost over a standard nvme drive (raid 0 would be 8x for both), so i was hoping someone here might have some advise to get my numbers up?

This is the same 8 drives in Raid 0:

I am not sure why the speed numbers appear to be so slow given these drives cost $10k each and with 8 drives in raid 10 i would be expecting a 8x read and 4x write boost over a standard nvme drive (raid 0 would be 8x for both), so i was hoping someone here might have some advise to get my numbers up?

Last edited:

- Joined

- Sep 16, 2018

- Messages

- 10,562 (4.33/day)

- Location

- Winnipeg, Canada

| Processor | AMD R9 9900X @ booost |

|---|---|

| Motherboard | Asus Strix X670E-F |

| Cooling | Thermalright Phantom Spirit 120 EVO, 2x T30 |

| Memory | 2x 16GB Lexar Ares @ 6400 28-36-36-68 1.55v |

| Video Card(s) | Zotac 4070 Ti Trinity OC @ 3045/1500 |

| Storage | WD SN850 1TB, SN850X 2TB, 2x SN770 1TB |

| Display(s) | LG 50UP7100 |

| Case | Asus ProArt PA602 |

| Audio Device(s) | JBL Bar 700 |

| Power Supply | Seasonic Vertex GX-1000, Monster HDP1800 |

| Mouse | Logitech G502 Hero |

| Keyboard | Logitech G213 |

| VR HMD | Oculus 3 |

| Software | Yes |

| Benchmark Scores | Yes |

My dirty and unkept SN850 boot:

New SN770 on a Gen 3 connection:

SN770 on Gen 4:

And the other SN770 on Gen 4:

I couldn't get the system to see all 3 SN770 on the Asus M.2 card. I am guessing I am bumping into a lane shortage :/

Edit:

I just noticed the test wasn't done running in the one shot

New SN770 on a Gen 3 connection:

SN770 on Gen 4:

And the other SN770 on Gen 4:

I couldn't get the system to see all 3 SN770 on the Asus M.2 card. I am guessing I am bumping into a lane shortage :/

Edit:

I just noticed the test wasn't done running in the one shot

- Joined

- Oct 16, 2018

- Messages

- 987 (0.41/day)

- Location

- Uttar Pradesh, India

| Processor | AMD R7 1700X @ 4100Mhz |

|---|---|

| Motherboard | MSI B450M MORTAR MAX (MS-7B89) |

| Cooling | Phanteks PH-TC14PE |

| Memory | Crucial Technology 16GB DR (DDR4-3600) - C9BLM:045M:E BL16G36C16U4W.M16FE1 X2 @ CL14 |

| Video Card(s) | XFX RX480 GTR 8GB @ 1408Mhz (AMD Auto OC) |

| Storage | Samsung SSD 850 EVO 250GB |

| Display(s) | Acer KG271 1080p @ 81Hz |

| Power Supply | SuperFlower Leadex II 750W 80+ Gold |

| Keyboard | Redragon Devarajas RGB |

| Software | Microsoft Windows 10 (10.0) Professional 64-bit |

| Benchmark Scores | https://valid.x86.fr/mvvj3a |

Your test settings are also incorrect like numerous others that post benhmarks in this thread.I just noticed the test wasn't done running in the one shot

Correct test setttings in this post.

Post your CrystalDiskMark speeds

Kingston Q500 SSD / SATA III 2.5" Called a HDD replacement on the packaging (10x faster!)

- Joined

- Sep 16, 2018

- Messages

- 10,562 (4.33/day)

- Location

- Winnipeg, Canada

| Processor | AMD R9 9900X @ booost |

|---|---|

| Motherboard | Asus Strix X670E-F |

| Cooling | Thermalright Phantom Spirit 120 EVO, 2x T30 |

| Memory | 2x 16GB Lexar Ares @ 6400 28-36-36-68 1.55v |

| Video Card(s) | Zotac 4070 Ti Trinity OC @ 3045/1500 |

| Storage | WD SN850 1TB, SN850X 2TB, 2x SN770 1TB |

| Display(s) | LG 50UP7100 |

| Case | Asus ProArt PA602 |

| Audio Device(s) | JBL Bar 700 |

| Power Supply | Seasonic Vertex GX-1000, Monster HDP1800 |

| Mouse | Logitech G502 Hero |

| Keyboard | Logitech G213 |

| VR HMD | Oculus 3 |

| Software | Yes |

| Benchmark Scores | Yes |

Whoops, just box stock settings all aroundYour test settings are also incorrect like numerous others that post benhmarks in this thread.

Correct test setttings in this post.

Post your CrystalDiskMark speeds

Kingston Q500 SSD / SATA III 2.5" Called a HDD replacement on the packaging (10x faster!)www.techpowerup.com

- Joined

- Oct 16, 2018

- Messages

- 987 (0.41/day)

- Location

- Uttar Pradesh, India

| Processor | AMD R7 1700X @ 4100Mhz |

|---|---|

| Motherboard | MSI B450M MORTAR MAX (MS-7B89) |

| Cooling | Phanteks PH-TC14PE |

| Memory | Crucial Technology 16GB DR (DDR4-3600) - C9BLM:045M:E BL16G36C16U4W.M16FE1 X2 @ CL14 |

| Video Card(s) | XFX RX480 GTR 8GB @ 1408Mhz (AMD Auto OC) |

| Storage | Samsung SSD 850 EVO 250GB |

| Display(s) | Acer KG271 1080p @ 81Hz |

| Power Supply | SuperFlower Leadex II 750W 80+ Gold |

| Keyboard | Redragon Devarajas RGB |

| Software | Microsoft Windows 10 (10.0) Professional 64-bit |

| Benchmark Scores | https://valid.x86.fr/mvvj3a |

- Joined

- Feb 21, 2008

- Messages

- 106 (0.02/day)

| System Name | i9-13900k |

|---|---|

| Processor | Intel Core i9 13900K |

| Motherboard | Asus ProArt Z790 Creator WiFi |

| Cooling | Corsair H150i Elite Capellix XT |

| Memory | Corsair Dominator 64gb 5600MT/s DDR5 Dual Channel |

| Video Card(s) | Sapphire Nitro+ RX 7900 XTX |

| Storage | Samsung 980 PRO 1 & 2 TB |

| Display(s) | LG 50" QNED TV 120hz |

| Case | Corsair RGB Smart Case 5000x (white) |

| Audio Device(s) | Fiio K5PRO USB DAC |

| Power Supply | Corsair RM1200x Shift |

| Mouse | Logitech MX Ergo Trackball |

| Keyboard | Logitech K860 |

| Software | Windows 11 Pro |

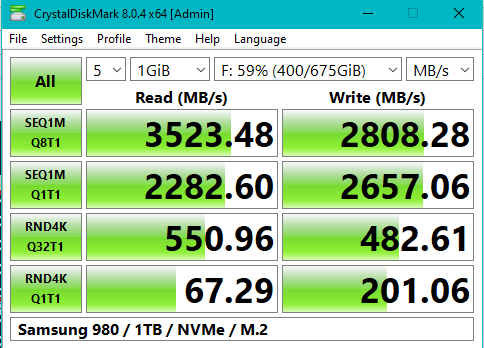

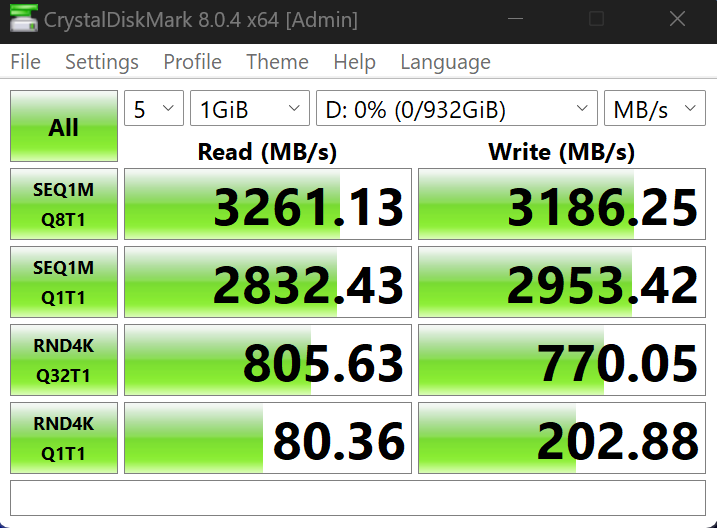

My D: drive - Samsung 980 PRO 1TB

- Joined

- Jun 2, 2017

- Messages

- 9,828 (3.38/day)

| System Name | Best AMD Computer |

|---|---|

| Processor | AMD 7900X3D |

| Motherboard | Asus X670E E Strix |

| Cooling | In Win SR36 |

| Memory | GSKILL DDR5 32GB 5200 30 |

| Video Card(s) | Sapphire Pulse 7900XT (Watercooled) |

| Storage | Corsair MP 700, Seagate 530 2Tb, Adata SX8200 2TBx2, Kingston 2 TBx2, Micron 8 TB, WD AN 1500 |

| Display(s) | GIGABYTE FV43U |

| Case | Corsair 7000D Airflow |

| Audio Device(s) | Corsair Void Pro, Logitch Z523 5.1 |

| Power Supply | Deepcool 1000M |

| Mouse | Logitech g7 gaming mouse |

| Keyboard | Logitech G510 |

| Software | Windows 11 Pro 64 Steam. GOG, Uplay, Origin |

| Benchmark Scores | Firestrike: 46183 Time Spy: 25121 |

Seagate Firecude 530 2TB.

- Joined

- Nov 4, 2015

- Messages

- 196 (0.06/day)

- Location

- Dutchland

| Processor | Ryzen 3600x |

|---|---|

| Motherboard | MPG B550 GAMING PLUS |

| Cooling | Gamdias AIO 3x |

| Memory | 32768 MB Corsair 8192 MB (DDR4-3200) |

| Video Card(s) | RX 6750 XT (RTX 2060) |

| Storage | Samsung SSD 990 PRO 1TB/Samsung SSD 970 EVO 500GB |

| Display(s) | 2x AOC 27G2G8 (AOC2702) 27.2 inches (69.1 cm) / 1920 x 1080 pixels @ 48-240 (curved) |

| Mouse | No idea brand |

| Keyboard | Same as above |

| Software | Win11 Pro |

de.das.dude

Pro Indian Modder

- Joined

- Jun 13, 2010

- Messages

- 9,254 (1.70/day)

- Location

- Internet is borked, please help.

| System Name | Monke | Work Thinkpad | Old Monke |

|---|---|

| Processor | Ryzen 5600X | Ryzen 5500U | FX8320 |

| Motherboard | ASRock B550 Extreme4 | ? | Asrock 990FX Extreme 4 |

| Cooling | 240mm Rad | Not needed | hyper 212 EVO |

| Memory | 2x16GB DDR4 3600 Corsair RGB | 16 GB DDR4 3600 | 16GB DDR3 1600 |

| Video Card(s) | Sapphire Pulse RX6700XT 12GB | Vega 8 | Sapphire Pulse RX580 8GB |

| Storage | Samsung 980 nvme (Primary) | some samsung SSD |

| Display(s) | Dell 2723DS | Some 14" 1080p 98%sRGB IPS | Dell 2240L |

| Case | Ant Esports Tempered case | Thinkpad | NZXT Guardian 921RB |

| Audio Device(s) | Logitech Z333 | Jabra corpo stuff | Some USB speakers |

| Power Supply | Corsair RM750e | not needed | Corsair GS 600 |

| Mouse | Logitech G400 | nipple | Dell |

| Keyboard | Logitech G213 | stock kb is awesome | Logitech K230 |

| VR HMD | ;_; |

| Software | Windows 10 Professional x3 |

| Benchmark Scores | There are no marks on my bench |

Samsung 980 1TB NVMe M.2