-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Relative performence - what does it mean?

- Thread starter DUS

- Start date

- Joined

- May 14, 2004

- Messages

- 28,770 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

- Joined

- May 14, 2004

- Messages

- 28,770 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

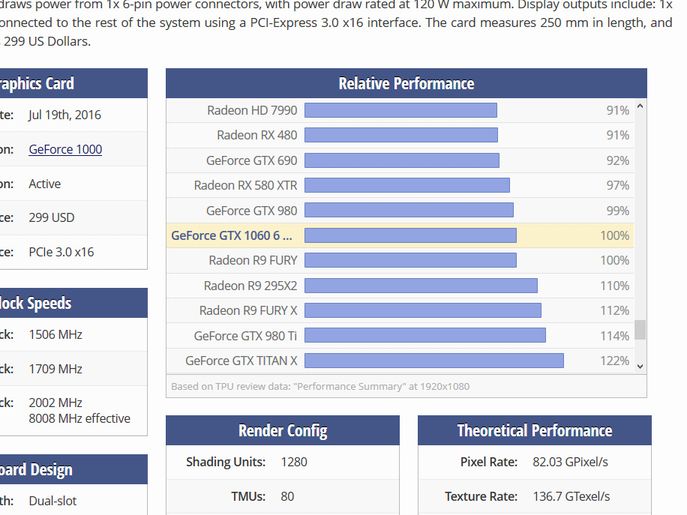

It's in percent (%). Averaging fps without normalizing gives high fps titles too much weight over other titles

Test system and driver info is included in all reviews

Test system and driver info is included in all reviews

Last edited:

- Joined

- Mar 18, 2015

- Messages

- 2,970 (0.80/day)

- Location

- Long Island

relative means "in realtin to one another". So if the 1080 Ti is 100% and the 1070 is 70%, the 1070 is 70% as fast as the 1080 RTo ... and the 1080 ti is 1.43 times (100/70) as fast as the 1070

My question concerns test parameters rather than how percentages are calculated.

Just so it's clear- Relative Performance does not reflect benchmarks that are drivers, patches & settings agnostic, right?

That is to say that excluding GPUs that were benchmarked together, there is no data from which to construe from say a 160% RP increase between a GTX 960 and a 970 (supposing tests done at significant time interval) how much of it is in merit of optimized patches, updated drivers or even different game settings (however slightly), is this correct?

In other words- do these test parameters change between differently timed benchmarks, specifically between different GPU generations, and if so how are these fluctuations calculated for?

Just so it's clear- Relative Performance does not reflect benchmarks that are drivers, patches & settings agnostic, right?

That is to say that excluding GPUs that were benchmarked together, there is no data from which to construe from say a 160% RP increase between a GTX 960 and a 970 (supposing tests done at significant time interval) how much of it is in merit of optimized patches, updated drivers or even different game settings (however slightly), is this correct?

In other words- do these test parameters change between differently timed benchmarks, specifically between different GPU generations, and if so how are these fluctuations calculated for?

Last edited:

- Joined

- Nov 15, 2005

- Messages

- 1,014 (0.14/day)

| Processor | 2500K @ 4.5GHz 1.28V |

|---|---|

| Motherboard | ASUS P8P67 Deluxe |

| Cooling | Corsair A70 |

| Memory | 8GB (2x4GB) Corsair Vengeance 1600 9-9-9-24 1T |

| Video Card(s) | eVGA GTX 470 |

| Storage | Crucial m4 128GB + Seagate RAID 1 (1TB x 2) |

| Display(s) | Dell 22" 1680x1050 nothing special |

| Case | Antec 300 |

| Audio Device(s) | Onboard |

| Power Supply | PC Power & Cooling 750W |

| Software | Windows 7 64bit Pro |

If you check the "Test Setup" page, the driver version used for all compared cards as well as the CPU clocks, Windows version and applied updates, etc. are all given. Here is an example: https://www.techpowerup.com/reviews/MSI/RX_580_Mech_2/6.html

If you check the "Test Setup" page, the driver version used for all compared cards as well as the CPU clocks, Windows version and applied updates, etc. are all given. Here is an example: https://www.techpowerup.com/reviews/MSI/RX_580_Mech_2/6.html

And how are those differences translated into raw numbers, such as the ones shown in the RP charts? The RP chart is there, with the numbers, neatly showing precise percentages, not ~10% estimations so how is it done in this regard?

- Joined

- Nov 15, 2005

- Messages

- 1,014 (0.14/day)

| Processor | 2500K @ 4.5GHz 1.28V |

|---|---|

| Motherboard | ASUS P8P67 Deluxe |

| Cooling | Corsair A70 |

| Memory | 8GB (2x4GB) Corsair Vengeance 1600 9-9-9-24 1T |

| Video Card(s) | eVGA GTX 470 |

| Storage | Crucial m4 128GB + Seagate RAID 1 (1TB x 2) |

| Display(s) | Dell 22" 1680x1050 nothing special |

| Case | Antec 300 |

| Audio Device(s) | Onboard |

| Power Supply | PC Power & Cooling 750W |

| Software | Windows 7 64bit Pro |

Differences? The point of that setup page is to show the standard test configuration that all the compared cards were tested in. All of those cards you see in the chart were run using xxx.xx driver version and whichever mentioned update of Windows, etc. If you see a GTX 970 and a GTX 1070 in the same chart and the test setup page says they were using the same driver, well then it would seem to be a pretty direct comparison and therefore no estimation required for performance results. The only gray area I can see in this regard is if any weighting is done in the overall percentage results due to particular games favoring AMD or NVIDIA architectures. If that doesn't answer your inquiry, then I'm not really sure what is the question.

- Joined

- May 14, 2004

- Messages

- 28,770 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

Relative performance is specific to the test setup obviously, because it's based on real data, not estimates.Relative Performance does not reflect benchmarks that are drivers, patches & settings agnostic, right?

The relative performance values do change between rebenches, due to drivers, patches, test scene change, test duration change, non-GPU hardware changes.

Not 100% sure what exactly you are asking

@Beertintedgoggles, the RP chart compares ALL GPUs from ALL benchmarks.

If the test platform itself changes from test to test, then some numeric compensation needs to take place in order to list all of the GPUs the way they are currently listed in the RP chart. If the case was such that the site compared apples to apples and the platforms are static than it was simply a matter of listing raw FPS results and the comparison was clear. However, the case is different so the strength of the RP chart would be in the ability to quantify and compensate for the changes in testing platform.

If no such formula is in place (something in the lines of /Score = Game FPS @release date X Platform hardware multiplier X Platform OS version multiplier X GPU drivers multiplier X Game patch multiplier X Game settings multiplier) then it would seem that the Relative Performance chart is not only superfluos but downright misleading.

If the test platform itself changes from test to test, then some numeric compensation needs to take place in order to list all of the GPUs the way they are currently listed in the RP chart. If the case was such that the site compared apples to apples and the platforms are static than it was simply a matter of listing raw FPS results and the comparison was clear. However, the case is different so the strength of the RP chart would be in the ability to quantify and compensate for the changes in testing platform.

If no such formula is in place (something in the lines of /Score = Game FPS @release date X Platform hardware multiplier X Platform OS version multiplier X GPU drivers multiplier X Game patch multiplier X Game settings multiplier) then it would seem that the Relative Performance chart is not only superfluos but downright misleading.

- Joined

- May 14, 2004

- Messages

- 28,770 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

the platform is static for all results in a given reviewplatforms are static

What you are looking for isn't possible, not even in theory. Game performance is a function of many factors, none of which can be removed entirely.ability to quantify and compensate for the changes in testing platform.

Or are you looking for a theoretical FLOPs rating? Which doesn't represent real-life performance at all

What you are looking for isn't possible, not even in theory. Game performance is a function of many factors, none of which can be removed entirely.

I was merely looking for an explanation to what is in place already mind. It's still no way clearer to me how that baffling chart is made in the first place but I'm clear on the fact that it represents something else than what one might assume it does, and I would suggest, very very humbly, that a big fat asterisk should be added to that chart.

- Joined

- Nov 15, 2005

- Messages

- 1,014 (0.14/day)

| Processor | 2500K @ 4.5GHz 1.28V |

|---|---|

| Motherboard | ASUS P8P67 Deluxe |

| Cooling | Corsair A70 |

| Memory | 8GB (2x4GB) Corsair Vengeance 1600 9-9-9-24 1T |

| Video Card(s) | eVGA GTX 470 |

| Storage | Crucial m4 128GB + Seagate RAID 1 (1TB x 2) |

| Display(s) | Dell 22" 1680x1050 nothing special |

| Case | Antec 300 |

| Audio Device(s) | Onboard |

| Power Supply | PC Power & Cooling 750W |

| Software | Windows 7 64bit Pro |

Oh.... the GPU Database. Sorry, I had assumed you were talking about the reviews (which I guess the database results are based on but I can see where those might be based upon different driver versions and updates). Yeah, although it's an attempt to compare multiple cards and generations; maybe not the best / most accurate chart due to those mentioned limitations.

- Joined

- Mar 18, 2015

- Messages

- 2,970 (0.80/day)

- Location

- Long Island

My question concerns test parameters rather than how percentages are calculated.

Just so it's clear- Relative Performance does not reflect benchmarks that are drivers, patches & settings agnostic, right?

That is to say that excluding GPUs that were benchmarked together, there is no data from which to construe from say a 160% RP increase between a GTX 960 and a 970 (supposing tests done at significant time interval) how much of it is in merit of optimized patches, updated drivers or even different game settings (however slightly), is this correct?

In other words- do these test parameters change between differently timed benchmarks, specifically between different GPU generations, and if so how are these fluctuations calculated for?

Yes the test parameters will change ... but if ya careful, it shouldnt matter much. Lets say that in any given test the Whoopdeedoo 2000 is 20% faster than the Whoopdedoo 1800. But the review you are looking at is for the MSI Whoopdedoo 2000 Gaming X. So in the new review for the MSI card, you see:

MSI Whoopdedoo 2000 Gaming X gets a 100% score.

Reference Whoopdedoo 2000 gets a 95% score.

Reference Whoopdedoo 1800 gets a 79% score.

So from that data, we can easily note that "outta the box":

a) The reference Whoopdedoo 2000 averaged 20.3% faster (95/79) than the Whoopdedoo 1800

b) The MSI Whoopdedoo 2000 Gaming X averaged 5.3% faster (100/95) than the reference Whoopdedoo 2000

But ya don't know... how much faster is the MSI Whoopdedoo 2000 Gaming X compared to the MSI Whoopdedoo 1800 Gaming X when both are overclocked. To find that out....

The MSI Whoopdedoo 2000 Gaming X article will show, some pages later that the reference card got 60 fps, 64 fps (+6.7%) "outta the box" and 68.4 fps (+14%) manually overclocked with the overclock test game.

Now we go back to the MSI Whoopdedoo 1800 Gaming X article from 2 years ago and we see that the reference card got 70.0 fps, 75.6 fps (+8.0%) "outta the box" and 88.9 fps (+27%) manually overclocked with a different overclock test game.

We can still equate the two cards:

Reference Whoopdedoo 2000score of 95% x 1.14 = 108.3 for the MSI Gaming version manually overclocked

Reference Whoopdedoo 1800 score of 79% x 1.27 = 100.3 for the MSI Gaming version manually overclocked

Reference Whoopdedoo 2000score of 95% x 1.067 = 101.4 for the MSI Gaming version outta the box

Reference Whoopdedoo 1800 score of 79% x 1.080 = 85.3 for the MSI Gaming version outta the box

Looking at the above numbers, an upgrade would be pretty hard to justify outta the box... but the extra OC ability of th older generation mas compared to current one makes an upgrade significantly less attractive.

I hope I explained the process simply enough, the logic is sound but there's always variables. i would expect them to be significant tho.

- Joined

- Nov 15, 2005

- Messages

- 1,014 (0.14/day)

| Processor | 2500K @ 4.5GHz 1.28V |

|---|---|

| Motherboard | ASUS P8P67 Deluxe |

| Cooling | Corsair A70 |

| Memory | 8GB (2x4GB) Corsair Vengeance 1600 9-9-9-24 1T |

| Video Card(s) | eVGA GTX 470 |

| Storage | Crucial m4 128GB + Seagate RAID 1 (1TB x 2) |

| Display(s) | Dell 22" 1680x1050 nothing special |

| Case | Antec 300 |

| Audio Device(s) | Onboard |

| Power Supply | PC Power & Cooling 750W |

| Software | Windows 7 64bit Pro |

Maybe I'm reading more into their inquiry than the OP meant but I think the point they are making is what if Whoopdedoo 2000 gained a performance increase with a driver update. Now the percentage difference does not align with the previous findings and makes that MSI Whoopdedoo 1800 Gaming X article from 2 years ago not a straight comparison anymore. How would one go about weighing which relative performance numbers to use in the comparison now? That's why I came to the conclusion that the GPU Database relative performance graphs are nice to look at but are a rather poor excuse for an actual in depth review where all the cards are an equal footing (drivers, updates, patches, etc.)

To state the obvious let me just say that as a simple consumer and not some hardcore gamer that follows daily GPU related news I'd gravitate towards the place that can easily show me the value for purchase, or upgrade ,and help me make the best informed choice with less of being sent to dig around in old articles. At least not until my preference is down to 2-3 options from the list, whereupon further details may be choice instructive. Plus, it saves me the ridiculous mental guess work of comparing apples to oranges to penguins and trying to put that in percentages.Now we go back to the MSI Whoopdedoo 1800 Gaming X article from 2 years ago and we see that the reference card got 70.0 fps, 75.6 fps (+8.0%) "outta the box" and 88.9 fps (+27%) manually overclocked with a different overclock test game.

That's the value of a good relative performance chart for a guy like me.

Even so, as it is latest gen benchmark results only tell me 'these cards are all within a performance range of 200% between 'em, and one additional penguin between each of them and your GPU from two gens ago and is absent from this benchmark'.

At best it's indicative, at worst- useless.

That's it....I think the point they are making is what if Whoopdedoo 2000 gained a performance increase with a driver update. Now the percentage difference does not align with the previous findings and makes that MSI Whoopdedoo 1800 Gaming X article from 2 years ago not a straight comparison anymore.

Excluding OC there are practically zero unknowns when GPUs are benchmarked, and done so purely on performance values. Everything can be calculated for (no build quality, no warranty, no aesthetics, no ergonomics or convenience and not even human factor parameters but rather pure, cold, raw numbers)- so no holly grail for any site that wants a claim of authority in its field.

- Joined

- Jul 2, 2008

- Messages

- 8,104 (1.31/day)

- Location

- Hillsboro, OR

| System Name | Main/DC |

|---|---|

| Processor | i7-3770K/i7-2600K |

| Motherboard | MSI Z77A-GD55/GA-P67A-UD4-B3 |

| Cooling | Phanteks PH-TC14CS/H80 |

| Memory | Crucial Ballistix Sport 16GB (2 x 8GB) LP /4GB Kingston DDR3 1600 |

| Video Card(s) | Asus GTX 660 Ti/MSI HD7770 |

| Storage | Crucial MX100 256GB/120GB Samsung 830 & Seagate 2TB(died) |

| Display(s) | Asus 24' LED/Samsung SyncMaster B1940 |

| Case | P100/Antec P280 It's huge! |

| Audio Device(s) | on board |

| Power Supply | SeaSonic SS-660XP2/Seasonic SS-760XP2 |

| Software | Win 7 Home Premiun 64 Bit |

You want buying a GPU made easy? Okay. Buy a MSI "Gaming" or Asus "Strix". Both run cool and quiet, both are know for their build quality, both have a "no questions asked" 3 year warranty, and both look good without RGB.

I don't think you have a clue as to how much work goes into a review.your GPU from two gens ago and is absent from this benchmark'.

One of the things we have to learn in life is to take the advice given when asked. If the "hardcore gamer that follows daily GPU related news" tells you to go read, it's because there are so many subjective aspects to GPU's that they want you to figure out what matters to you.To state the obvious let me just say that as a simple consumer and not some hardcore gamer that follows daily GPU related news I'd gravitate towards the place that can easily show me the value for purchase, or upgrade ,and help me make the best informed choice with less of being sent to dig around in old articles.

- Joined

- Mar 25, 2009

- Messages

- 9,842 (1.67/day)

- Location

- 04578

| System Name | Old reliable |

|---|---|

| Processor | Intel 8700K @ 4.8 GHz |

| Motherboard | MSI Z370 Gaming Pro Carbon AC |

| Cooling | Custom Water |

| Memory | 32 GB Crucial Ballistix 3666 MHz |

| Video Card(s) | MSI RTX 3080 10GB Suprim X |

| Storage | 3x SSDs 2x HDDs |

| Display(s) | ASUS VG27AQL1A x2 2560x1440 8bit IPS |

| Case | Thermaltake Core P3 TG |

| Audio Device(s) | Samson Meteor Mic / Generic 2.1 / KRK KNS 6400 headset |

| Power Supply | Zalman EBT-1000 |

| Mouse | Mionix NAOS 7000 |

| Keyboard | Mionix |

I dont get what people are bitching about. W1zz generally has multiple generations of GPUs in the article and they are regularly rebenched with newer drivers so that the data remains consistent. No one else reviews the same number of GPUs or rebenches the number that techpowerup does. Considering renbenching takes place periodically with new drivers. The performance delta seldom changes more than a 1-3% overall from what I have seen. Even then since some games improve and other fall back performance wise between drivers it ends up being a wash.

- Joined

- Sep 17, 2014

- Messages

- 24,004 (6.14/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

To state the obvious let me just say that as a simple consumer and not some hardcore gamer that follows daily GPU related news I'd gravitate towards the place that can easily show me the value for purchase, or upgrade ,and help me make the best informed choice with less of being sent to dig around in old articles. At least not until my preference is down to 2-3 options from the list, whereupon further details may be choice instructive. Plus, it saves me the ridiculous mental guess work of comparing apples to oranges to penguins and trying to put that in percentages.

That's the value of a good relative performance chart for a guy like me.

Even so, as it is latest gen benchmark results only tell me 'these cards are all within a performance range of 200% between 'em, and one additional penguin between each of them and your GPU from two gens ago and is absent from this benchmark'.

At best it's indicative, at worst- useless.

That's it.

Excluding OC there are practically zero unknowns when GPUs are benchmarked, and done so purely on performance values. Everything can be calculated for (no build quality, no warranty, no aesthetics, no ergonomics or convenience and not even human factor parameters but rather pure, cold, raw numbers)- so no holly grail for any site that wants a claim of authority in its field.

Relative performance charts are for hierarchy of GPUs. Not for being some sort of exact science. Like you've said: indicative. And not 'at best' but just that: indicative. Each entry in the list is an indicator of its performance relative to the rest.

Apart from theoretical possible changes in performance, the reality is most cards get released and re-benched but I seriously cannot recall ONE card that has actually switched places in a GPU hierarchy chart. And if they do, we're not talking about stock vs stock but stock versus OC, which is irrelevant in this discussion altogether. Very recently, a TPU member has tested how much performance changed across a range of Nvidia drivers on Kepler. Conclusion: nearly within margin of error for most individual games and averaged across all of them, negligible.

You're making far too much of that RP chart and it was never intended to be a tool that allows you to avoid any sort of reading. If you want info, you'll need to get into it, its that simple. If you want a ballpark idea, the RP chart is there for you and does a perfect job at that. Your example of a simple consumer that wants something is an example of being a lazy consumer that wants everything handed on a silver platter. And if I'm honest, we need LESS of those people, not accommodate and encourage more of them.

TL : DR stop obsessing over it

Last edited: