- Joined

- Aug 13, 2010

- Messages

- 5,521 (1.02/day)

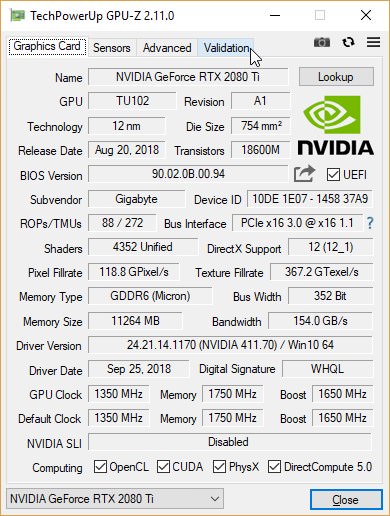

Hi w1z, seems like this RTX 2080 turned into an 8800GT

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

| System Name | Cabal |

|---|---|

| Processor | intel i9 9900k @ 5.0ghz 1.33v - cache @ 4.7ghz |

| Motherboard | Asrock z370 fatal1ty gaming k6 |

| Cooling | Corsair H115i with 2x Corsair LL140mm rgb fans |

| Memory | Corsair Dominator Platinum 32GB(4x8kit) ddr4 4000mhz@4100mhz |

| Video Card(s) | Msi rtx 5070ti Gaming OC 16gb |

| Storage | samsung evo 860 500gbx2, sandisk 3d ultra 500gbx2, kingston hyperX ssd 480gb, Seagate Barracuda3TB |

| Display(s) | Asus ROG Swift pg278q G-Sync |

| Case | Corsair 760t Graphite Series with 3x Corsair LL140mm fans |

| Audio Device(s) | Sound Blaster X ae-5 |

| Power Supply | Corsair RM850i |

| Mouse | Roccat Tyon |

| Keyboard | Corsair RGB Strafe mechanical keyboard |

| Software | win10pro 64bit |

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

No way it's like I said, I was just poking fun.yeah i noticed it too, very curious...maybe a gpuz needs an update maybe it is like bug said...

| System Name | Gaming PC / I7 XEON |

|---|---|

| Processor | I7 4790K @stock / XEON W3680 @ stock |

| Motherboard | Asus Z97 MAXIMUS VII FORMULA / GIGABYTE X58 UD7 |

| Cooling | X61 Kraken / X61 Kraken |

| Memory | 32gb Vengeance 2133 Mhz / 24b Corsair XMS3 1600 Mhz |

| Video Card(s) | Gainward GLH 1080 / MSI Gaming X Radeon RX480 8 GB |

| Storage | Samsung EVO 850 500gb ,3 tb seagate, 2 samsung 1tb in raid 0 / Kingdian 240 gb, megaraid SAS 9341-8 |

| Display(s) | 2 BENQ 27" GL2706PQ / Dell UP2716D LCD Monitor 27 " |

| Case | Corsair Graphite Series 780T / Corsair Obsidian 750 D |

| Audio Device(s) | ON BOARD / ON BOARD |

| Power Supply | Sapphire Pure 950w / Corsair RMI 750w |

| Mouse | Steelseries Sesnsei / Steelseries Sensei raw |

| Keyboard | Razer BlackWidow Chroma / Razer BlackWidow Chroma |

| Software | Windows 1064bit PRO / Windows 1064bit PRO |

| System Name | 4K-gaming / console |

|---|---|

| Processor | 5800X @ PBO +200 / i5-8600K @ 4.6GHz |

| Motherboard | ROG Crosshair VII Hero / ROG Strix Z370-F |

| Cooling | Eisbaer 360 + EK Vector TUF + Acool ST30 240mm + Swiftech res + EK-XTOP SPC-60 / Eisbaer 240 + DCC |

| Memory | 32GB DDR4-3466 / 16GB DDR4-3600 |

| Video Card(s) | Asus RTX 3080 TUF / Powercolor RX 6700 XT |

| Storage | 3TB of SSDs / several small SSDs |

| Display(s) | 4K120 IPS + 4K60 IPS / 1080p projector @ 90" |

| Case | Corsair 4000D AF White / DeepCool CC560 WH |

| Audio Device(s) | Sony WH-CH720N / Hecate G1500 |

| Power Supply | EVGA G2 750W / Seasonic FX-750 |

| Mouse | Razer Basilisk / Ajazz i303 Pro |

| Keyboard | Roccat Vulcan 121 AIMO / Obinslab Anne 2 Pro |

| VR HMD | Oculus Rift CV1 |

| Software | Windows 11 Pro / Windows 11 Pro |

| Benchmark Scores | They run Crysis |

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

| System Name | 4K-gaming / console |

|---|---|

| Processor | 5800X @ PBO +200 / i5-8600K @ 4.6GHz |

| Motherboard | ROG Crosshair VII Hero / ROG Strix Z370-F |

| Cooling | Eisbaer 360 + EK Vector TUF + Acool ST30 240mm + Swiftech res + EK-XTOP SPC-60 / Eisbaer 240 + DCC |

| Memory | 32GB DDR4-3466 / 16GB DDR4-3600 |

| Video Card(s) | Asus RTX 3080 TUF / Powercolor RX 6700 XT |

| Storage | 3TB of SSDs / several small SSDs |

| Display(s) | 4K120 IPS + 4K60 IPS / 1080p projector @ 90" |

| Case | Corsair 4000D AF White / DeepCool CC560 WH |

| Audio Device(s) | Sony WH-CH720N / Hecate G1500 |

| Power Supply | EVGA G2 750W / Seasonic FX-750 |

| Mouse | Razer Basilisk / Ajazz i303 Pro |

| Keyboard | Roccat Vulcan 121 AIMO / Obinslab Anne 2 Pro |

| VR HMD | Oculus Rift CV1 |

| Software | Windows 11 Pro / Windows 11 Pro |

| Benchmark Scores | They run Crysis |

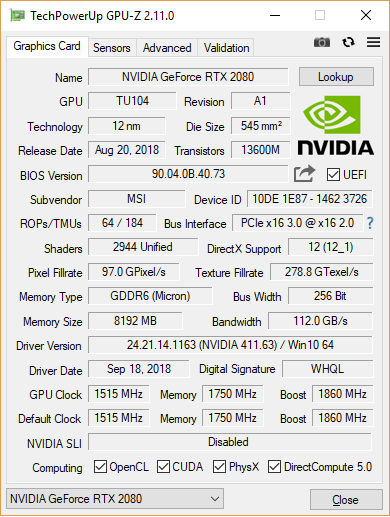

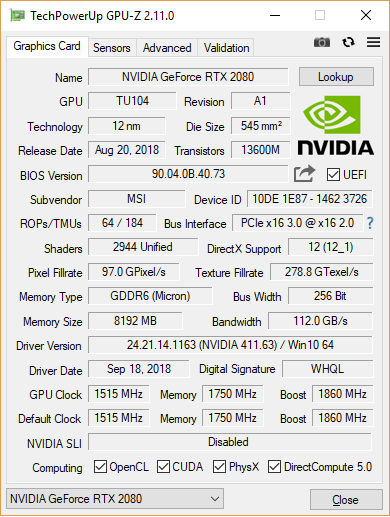

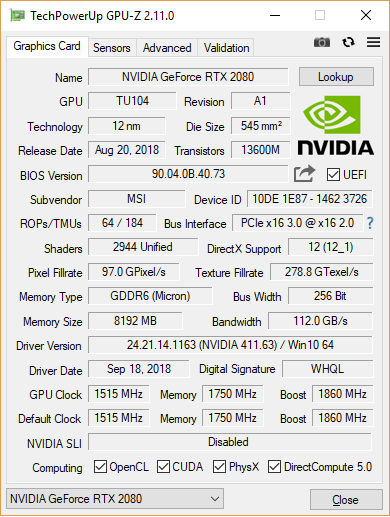

So in a nutshell, this means that it's quadruple like GDDR5?The formula for calculating memory bandwidth is (bus bandwidth / 8) * (memory clock speed) * (memory type bits per cycle). So for stock GTX 2080 that's (256 / 8) * 1750 * 8 = 448 000 MB/s = 448 GB/s. My guess is that @W1zzard 's calc for memory doesn't know about GDDR6 (or isn't detecting it correctly) and is thus defaulting to standard DDR, which has only 2 bits/cycle versus GDDR6 which has 8 bits/cycle.

All the GPU-Z screenshots in the GTX 2080/2080 Ti reviews have the same issue... Wiz is gonna have to redo all of those once he fixes this bug!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

So in a nutshell, this means that it's quadruple like GDDR5?

| System Name | My PC |

|---|---|

| Processor | 4670K@4.4GHz |

| Motherboard | Gryphon Z87 |

| Cooling | CM 212 |

| Memory | 2x8GB+2x4GB @2400GHz |

| Video Card(s) | XFX Radeon RX 580 GTS Black Edition 1425MHz OC+, 8GB |

| Storage | Intel 530 SSD 480GB + Intel 510 SSD 120GB + 2x500GB hdd raid 1 |

| Display(s) | HP envy 32 1440p |

| Case | CM Mastercase 5 |

| Audio Device(s) | Sbz ZXR |

| Power Supply | Antec 620W |

| Mouse | G502 |

| Keyboard | G910 |

| Software | Win 10 pro |

| System Name | 4K-gaming / console |

|---|---|

| Processor | 5800X @ PBO +200 / i5-8600K @ 4.6GHz |

| Motherboard | ROG Crosshair VII Hero / ROG Strix Z370-F |

| Cooling | Eisbaer 360 + EK Vector TUF + Acool ST30 240mm + Swiftech res + EK-XTOP SPC-60 / Eisbaer 240 + DCC |

| Memory | 32GB DDR4-3466 / 16GB DDR4-3600 |

| Video Card(s) | Asus RTX 3080 TUF / Powercolor RX 6700 XT |

| Storage | 3TB of SSDs / several small SSDs |

| Display(s) | 4K120 IPS + 4K60 IPS / 1080p projector @ 90" |

| Case | Corsair 4000D AF White / DeepCool CC560 WH |

| Audio Device(s) | Sony WH-CH720N / Hecate G1500 |

| Power Supply | EVGA G2 750W / Seasonic FX-750 |

| Mouse | Razer Basilisk / Ajazz i303 Pro |

| Keyboard | Roccat Vulcan 121 AIMO / Obinslab Anne 2 Pro |

| VR HMD | Oculus Rift CV1 |

| Software | Windows 11 Pro / Windows 11 Pro |

| Benchmark Scores | They run Crysis |

Ah, ok. It's always nice to be a little wiser.Nope, octuple. GDDR5 is 4 bits/cycle which is why GTX 1070 needs to run its 256bit GDDR5 at 2GHz to reach "only" 256GB/s bandwidth, while RTX 2080's 256bit GDDR6 purrs along at a much lower 1.75GHz yet is able to reach almost double the bandwidth of 1070 @ 448GB/s.

On the other hand, GDDR5X (which GDDR6 is essentially the successor of) is also 8 bits/cycle, hence why GTX 1080 can get 320GB/s bandwidth (far higher than GTX 1070) at only 1250MHz memory clock (far lower).

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

*next build multiplies all bandwidths 4 times*Just a calculation bug, will be fixed in next release

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Not a dime (media), but thanks for the care.O man i feel sorry for you, wasted money eh?

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

| System Name | The beast and the little runt. |

|---|---|

| Processor | Ryzen 5 5600X - Ryzen 9 5950X |

| Motherboard | ASUS ROG STRIX B550-I GAMING - ASUS ROG Crosshair VIII Dark Hero X570 |

| Cooling | Noctua NH-L9x65 SE-AM4a - NH-D15 chromax.black with IPPC Industrial 3000 RPM 120/140 MM fans. |

| Memory | G.SKILL TRIDENT Z ROYAL GOLD/SILVER 32 GB (2 x 16 GB and 4 x 8 GB) 3600 MHz CL14-15-15-35 1.45 volts |

| Video Card(s) | GIGABYTE RTX 4060 OC LOW PROFILE - GIGABYTE RTX 4090 GAMING OC |

| Storage | Samsung 980 PRO 1 TB + 2 TB - Samsung 870 EVO 4 TB - 2 x WD RED PRO 16 GB + WD ULTRASTAR 22 TB |

| Display(s) | Asus 27" TUF VG27AQL1A and a Dell 24" for dual setup |

| Case | Phanteks Enthoo 719/LUXE 2 BLACK |

| Audio Device(s) | Onboard on both boards |

| Power Supply | Phanteks Revolt X 1200W |

| Mouse | Logitech G903 Lightspeed Wireless Gaming Mouse |

| Keyboard | Logitech G910 Orion Spectrum |

| Software | WINDOWS 10 PRO 64 BITS on both systems |

| Benchmark Scores | Se more about my 2 in 1 system here: kortlink.dk/2ca4x |

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

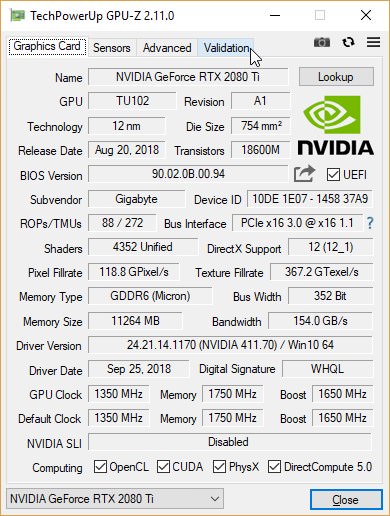

Thats the same texture fill rate I see with a 2080.Also here's one from an RTX 2080 Ti:

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

Oh, good. For a moment there I was worried we ran out of people that can tell others what they need to doW1zz, GPU-Z has to be fixed to suite RTX cards.

Base clock slot became extremely irrelevant this time around.

Having default and current is fine when it is accurate - it isnt as for 2.11.0

No mention of RT or Tensor cores in any way.

Boost is just the official stuff, and while being in the same realm of Intel's CPU boost clocks, the numbers were not relevant since GTX 900 four years ago.

Maybe time to move those slots into real-time reading? or at least real time vs official in the box below.

No RTX 2080 Ti is going to operate at 1350Mhz core under 3D load.

Stuff has to change.