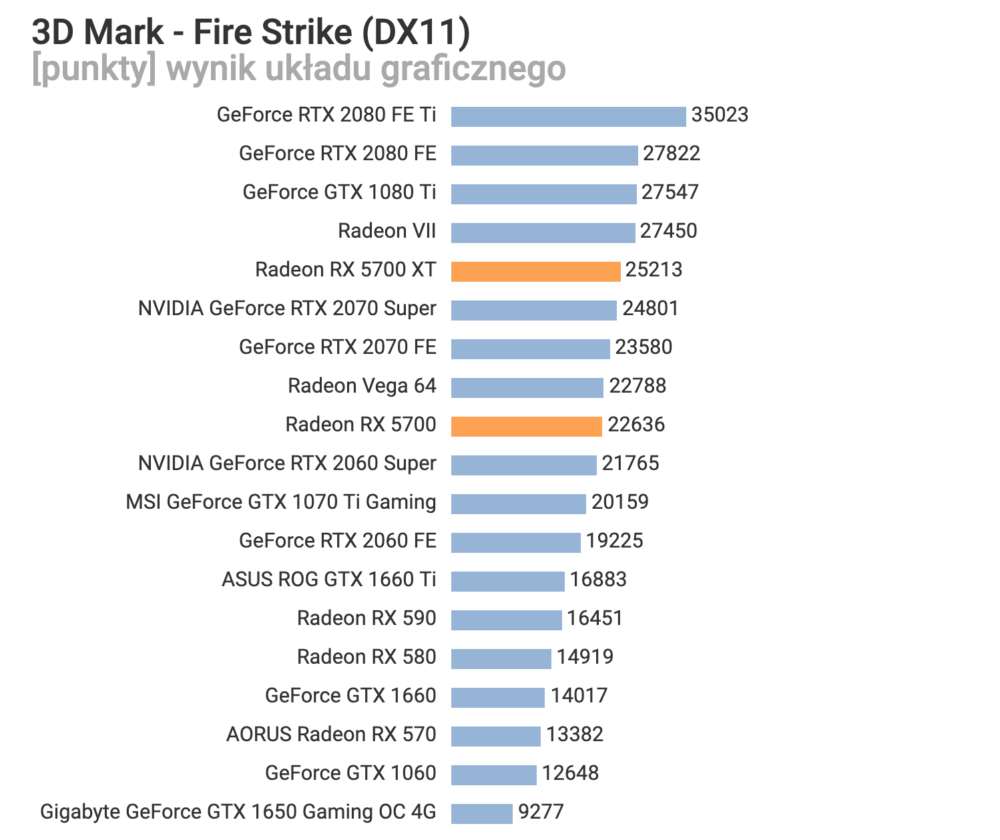

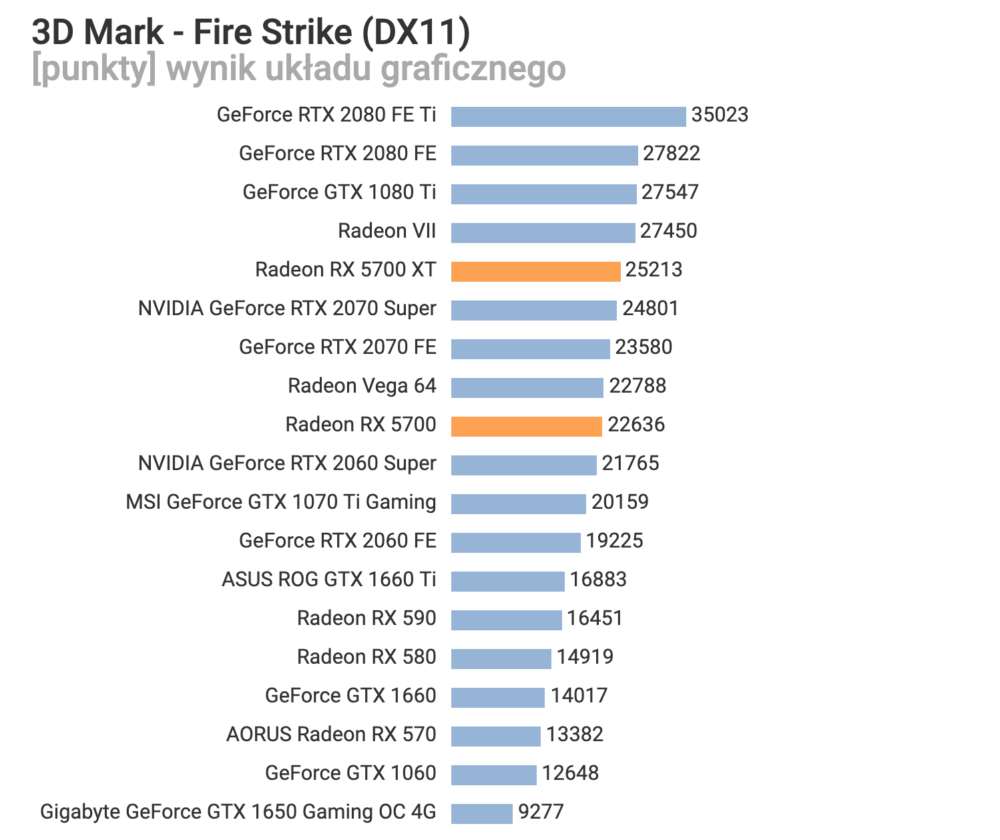

You see how your table is unfair, I mean that's not your table, that's NVIDIA trick.

GTX1080Ti is slower then RTX2080 FE. Why? Because with Turing NVIDIA increase clock of Founders Edition for 90MHz and use results on hundreds comparison while other people get much slower reference models by other brands. But they didn't forgot to charge that extra than reference models from all other companies.

Without exactly that trick, NVIDIA was not capable to show up and represent RTX2080 faster then GTX1080Ti.

They need such trick to represent that RTX2080 is faster then GTX1080Ti and they need same trick to avoid tragically low improvement between GTX1080Ti and successor RTX2080 Ti.

Without trick difference would be less then 20% and they asked 300-350$ more.

If NVIDIA increased 90MHz Founders Edition why then people with Pascal GTX1080Ti can't oc their GPU for 90MHz and compensate trick by NVIDIA.

Because if you show any fabric OC model GTX1080Ti results are different.

My GTX1080Ti is 10.300 Graphic score on fabric frequency.

NVIDIA increase frequency of Founders Edition to show better results and cause bigger failure rate and problems to significantly more number of people then in previous years.

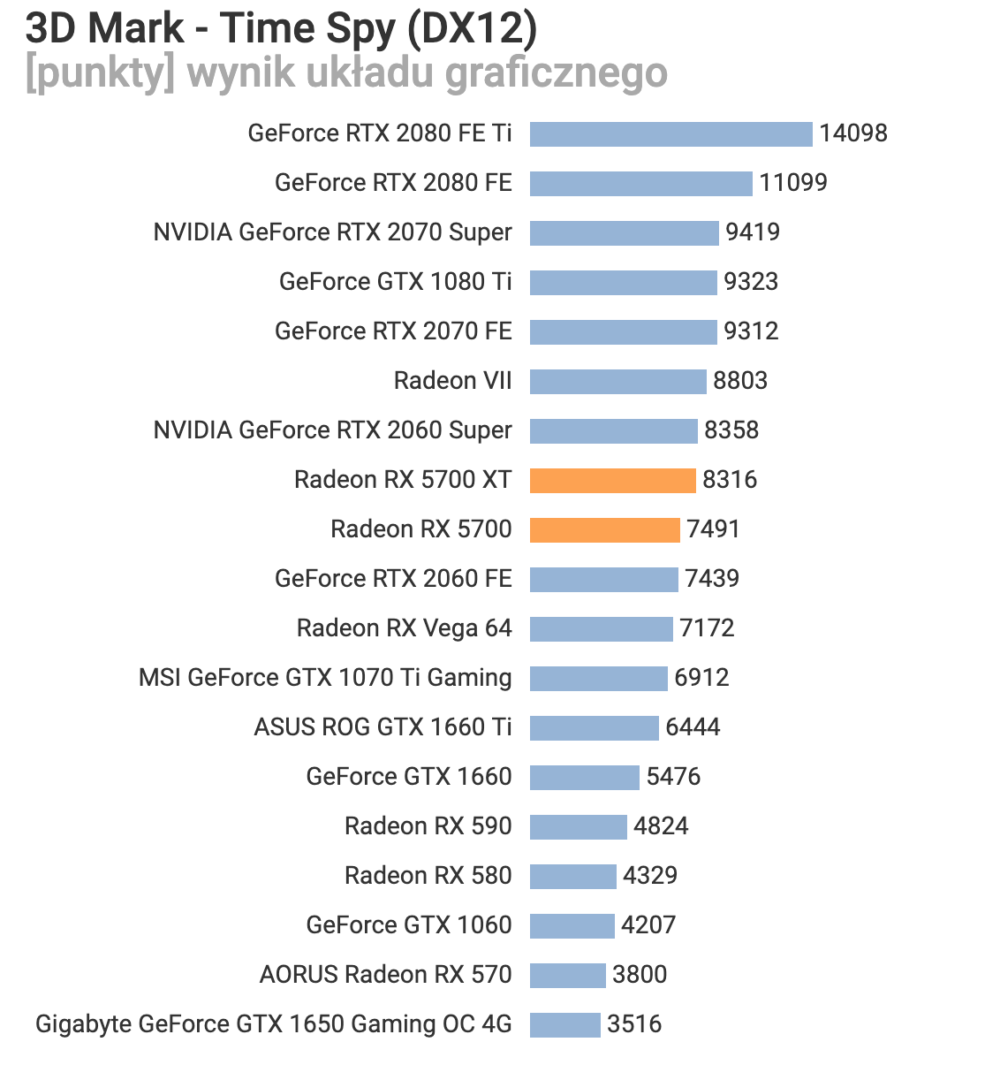

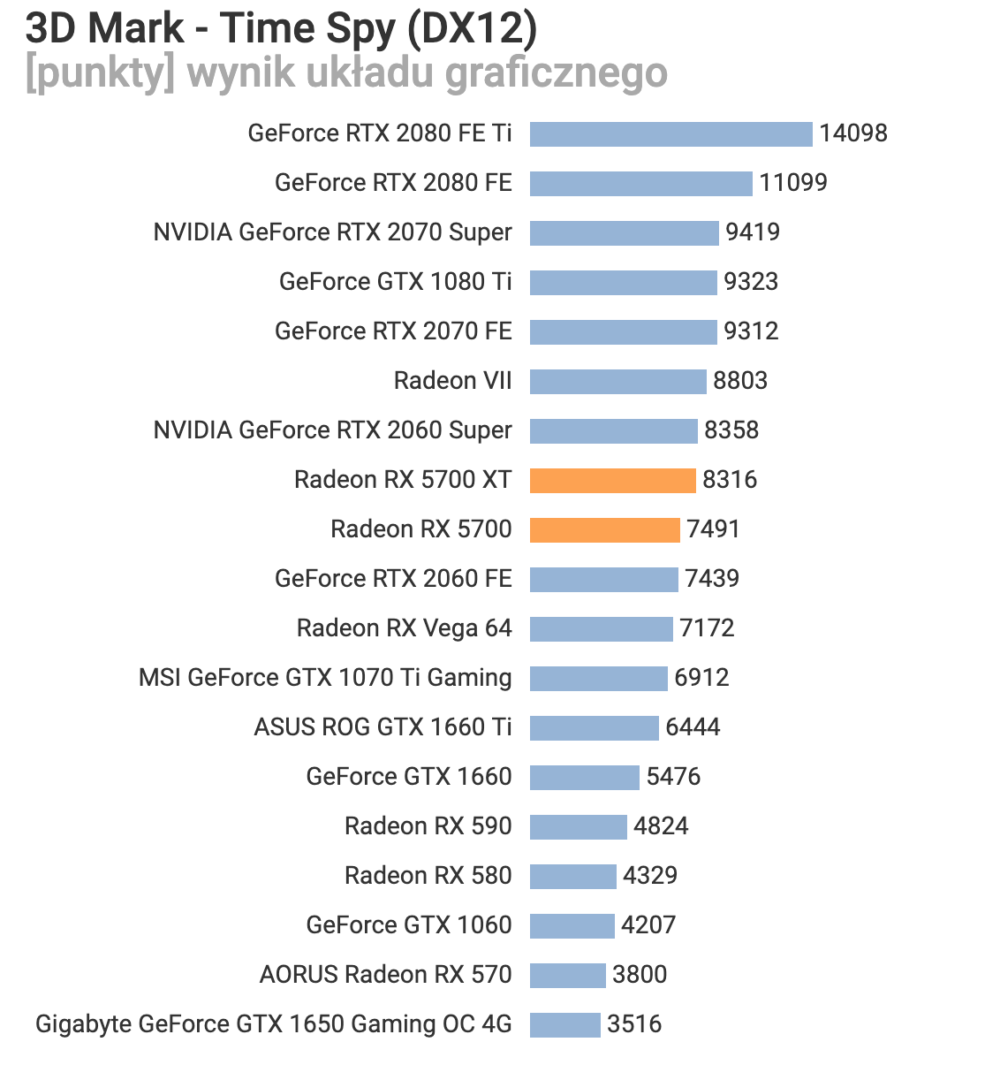

Manipulation with 3DMark results and playing with numbers between similar cards reach epic proportions.

No official result market for new generation, no average results, they are different and companies used them to show that new GPU is little better then previous even when they are same. And always at the end customers are disappointed with improvement. When you play 2 years on one GPU and replace with 15% better you feel nothing if you play games on 4K resolution and high details where every GPU struggle to give 50-60fps in graphically most demanding games. Over 30-40% you start to feel little improvement.

videocardz.com

videocardz.com