D

Deleted member 50521

Guest

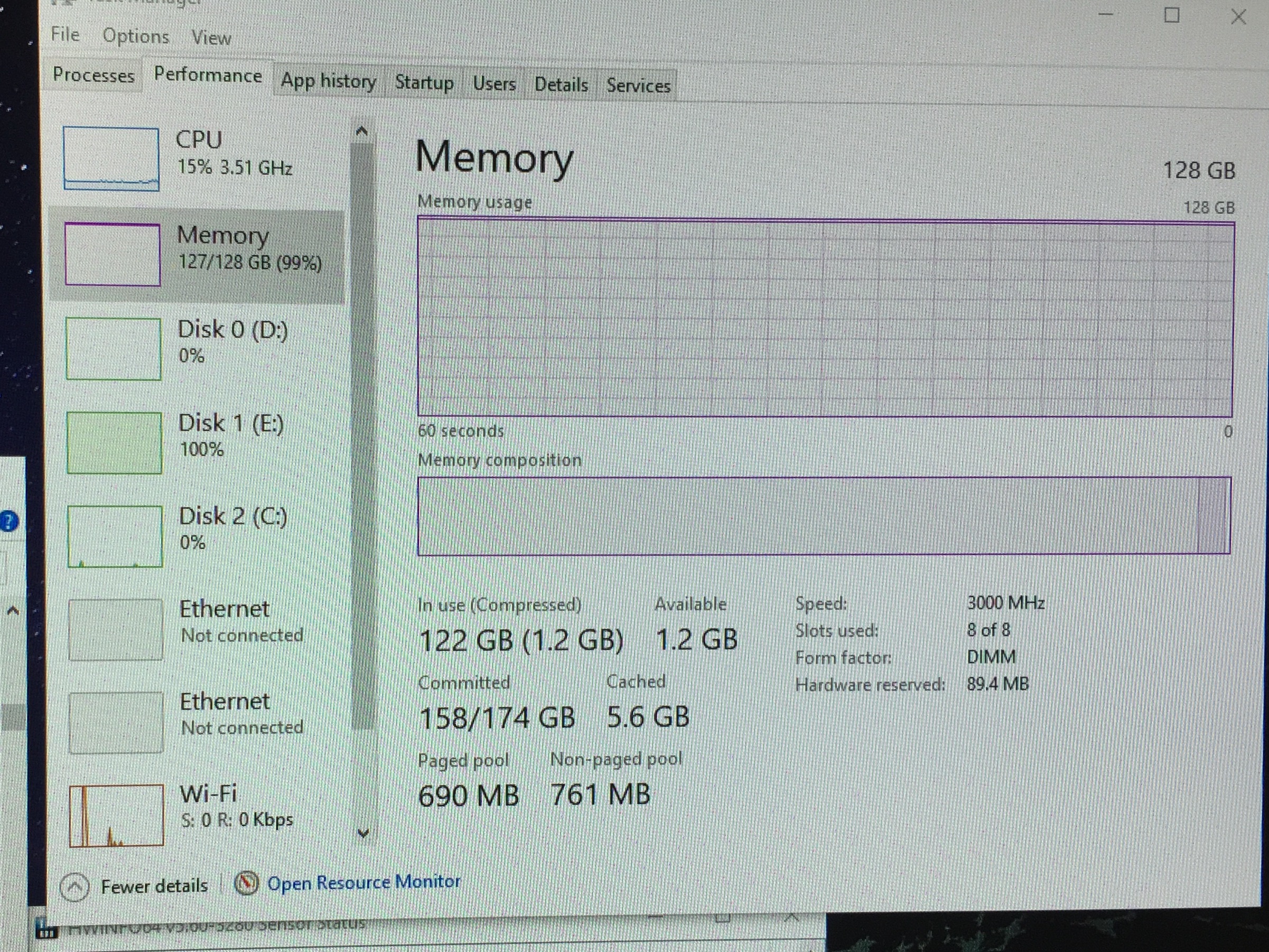

Running some of my work on PC. RAM usage is almost close to limits. Now system becomes unresponsive. I have data stored on HDD. I set up a 256GB page file on my nvme SSD. Somehow the pagefile drive is not seeing any activity. System is not BSOD. I can hear the disk operating. However no mouse movement or anything can be registered on screen. This is a fairly important piece of work which has been running for 3 days. Is such unresponsive behavior normal once RAM is filled up?

C drive is where i put my page file. You can see there is 0 activity

C drive is where i put my page file. You can see there is 0 activity