-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Temperature Spikes Reported on Intel's Core i7-7700, i7-7700K Processors

- Thread starter Raevenlord

- Start date

- Joined

- Sep 24, 2014

- Messages

- 1,269 (0.33/day)

- Location

- West Bromwich UK

| System Name | El Calpulator |

|---|---|

| Processor | AMD Ryzen R7 7800X3D |

| Motherboard | AORUS X870E Elite WiFi 7 |

| Cooling | ArcticCooling Freezer 3 360ARGB AIO |

| Memory | 32GB Corsair Vengance 6000Mhz C30 |

| Video Card(s) | MSI RTX 4080 Gaming Trio X @ 2925 / 23500 mhz |

| Storage | 5TB nvme SSD + Synology DS115j NAS with 4TB HDD |

| Display(s) | Samsung G8 34" QD-OLED + Samsung 28" 4K 60hz UR550 |

| Case | Antec Flux+ 6 ARCTIC P14 PWM PST A-RGB |

| Audio Device(s) | Marantz PM4001+Fosi P4+2xPolk XT60 |

| Power Supply | be quiet! Pure Power 12 M 1000W ATX 3.0 80+ Gold |

| Mouse | Logitech G502X Plus LightSpeed Hero Wireless plus Logitech G POWERPLAY Wireless Charging Mouse Pad |

| Keyboard | Logitech G915 LightSpeed Wireless |

| Software | Win 11 Pro |

| Benchmark Scores | Just enough |

My post was referring to the graphs posted.

Also please understand that heat output increases exponentially when you increase clocks and voltages....so of course a chip running slower and at lower voltage and without hyperthreading will probably run cooler.

Bare in mind that I have a better cooler now and I know that this is the i7 and has more voltage and higher speed but still I had a worse cooler

- Joined

- Apr 30, 2006

- Messages

- 1,181 (0.17/day)

| Processor | 7900 |

|---|---|

| Motherboard | Rampage Apex |

| Cooling | H115i |

| Memory | 64GB TridentZ 3200 14-14-14-34-1T |

| Video Card(s) | Fury X |

| Case | Corsair 740 |

| Audio Device(s) | 8ch LPCM via HDMI to Yamaha Z7 Receiver |

| Power Supply | Corsair AX860 |

| Mouse | G903 |

| Keyboard | G810 |

| Software | 8.1 x64 |

I looked up the vcore voltage specs on the white paper and it says 1.52 volts. Am I reading this right??

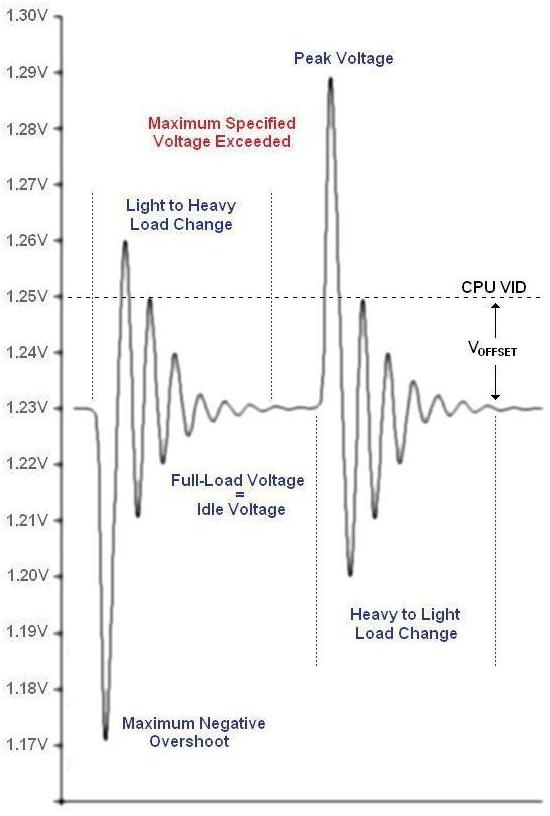

Thats the absolute maximum voltage which includes spikes. Switching from high load to idle can cause the voltage to spike well above the VID.

- Joined

- Nov 4, 2005

- Messages

- 12,184 (1.71/day)

| System Name | Compy 386 |

|---|---|

| Processor | 7800X3D |

| Motherboard | Asus |

| Cooling | Air for now..... |

| Memory | 64 GB DDR5 6400Mhz |

| Video Card(s) | 7900XTX 310 Merc |

| Storage | Samsung 990 2TB, 2 SP 2TB SSDs, 24TB Enterprise drives |

| Display(s) | 55" Samsung 4K HDR |

| Audio Device(s) | ATI HDMI |

| Mouse | Logitech MX518 |

| Keyboard | Razer |

| Software | A lot. |

| Benchmark Scores | Its fast. Enough. |

CIV and many other games use a frame cap as they use the finishing of one frame to start the calculations of physics or AI of the next frame.Any game supports 240hz, and it is miles better than 60hz or 144hz, specially for e-sports, period. You really want to know the percentage of gamers that use such high resolutions? Go n steam stats and check for yourself. Oh yeah I know that in 3 years many gamers will be using 4k, but in 3 years you will also have much better CPUs. And there isn´t such thing as "ryzen being better than Intel at high resolutions"; is all error margin, because GPU is strained on those conditions, not CPU. Prolly you would get the same results on 90% of the games, with an i3 + GTX1070 at 4k.

You didn´t get it. I´m not saying me or someone plays at 640x480, but if a CPU can provide you 30% more frames that can mean the difference between 240fps and 180fps at 1080p and medium graphic settings with a good GPU. That´s the whole point of these Hardocp benchmarks, so you can get an idea of the real percentage difference between the CPUs on game engines.

As for Ryzen and CS GO you are wrong again. 7700k at 4,6ghz provides you 350-400fps in any condition, while on Ryzen you are in the house of 200s, wich for CS GO e-sports is low. An overclocked 7600k for CS is better than Ryzen. Now talk about "the future of multi threaded games" that no one cares about. CS GO is the 2nd most played game on PC and e-sports, and will remain like that.

You need to open your minds and understand that each case is different. Ryzen might be fine for you, but Intel is better at 240hz competitive gaming, period. Wether you despise that kind of experience, that´s a different story. So just don´t come and say that everyone should get a Ryzen because Intel is not worth it etc etc. We all want different things from our PCs.

If someone wants e-sports, why would this person buy a CPU that is better for Adobe Suites, Blender and streaming? If someone wants producitviy why would he buy a higher priced quad core 8 threaded CPU? Simple maths. Keep being ignorant.

Lastly, there are numerous reports that Ryzen is better at preventing lag and hiccups noticed on Intel setups.

- Joined

- Mar 22, 2011

- Messages

- 214 (0.04/day)

- Location

- USA

| System Name | Liquid 2022 |

|---|---|

| Processor | Intel i7-12700k |

| Motherboard | Asus Strix Z690-A GAMING WIFI D4 |

| Cooling | Custom loop with 9x120mm radiator area |

| Memory | Team 16GB (2x8GB) DDR4@4133 C18-18-18 |

| Video Card(s) | Nvidia GeForce RTX 4090 on Heatkiller block |

| Storage | 10TB SSD: Samsung 970 PRO 512GB (OS), Samsung 980 PRO 2TB, ADATA SX8200 PRO 2TB/500GB, 4TB/1TB MX500 |

| Display(s) | Samsung 34" G85SB OLED, Samsung Odyssey 21:9 |

| Case | Phanteks ENTHOO 719 (grey) |

| Audio Device(s) | Creative Sound BlasterX AE-5, Logitech Z906 5.1 speaker system |

| Power Supply | Cooler Master V1200, custom sleeved white cables |

| Mouse | Logitech G502 |

| Keyboard | Corsair K70 Lux RGB |

| Software | Windows 10 Pro 64-bit (maybe 11 soon?) |

I think what's going on is some of these motherboard bios over volt the CPU. For example my board at load feeds 1.35 v to the 7600k at stock and while encoding vids temps were in the mid 80s. If your concerned about temps steps to take are upgrade bios, manually dial in vcore at 1.2v ...

This is what I did with my MB and it greatly reduced my CPU temperature and eliminated spikes.

The motherboard manufacturers are trying to squeeze extra performance out of their boards in order to differentiate themselves from their competition and it's causing some erratic CPU behavior.

- Joined

- Dec 31, 2009

- Messages

- 19,396 (3.45/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

Didnt read one post... are these AVX/FMA instructions doing it? I dont get what the story is from the occt graphs and lack of direct links...

@fullinfusion

@newtekie1

@Raevenlord

@fullinfusion

@newtekie1

@Raevenlord

Last edited:

- Joined

- Jul 13, 2005

- Messages

- 369 (0.05/day)

| Processor | 7700 Kaby "baeby" @ 4.5 Ghz |

|---|---|

| Motherboard | ASUS MAXIMUS FORMULA IX |

| Cooling | Corsair H100i |

| Memory | 16 GB |

| Video Card(s) | EVGA 980 Ti SC+ |

| Storage | EVO 512 gb |

| Display(s) | 240 Hz |

| Case | ANTEC Twelve Hundred |

| Power Supply | EVGA 1300 Supernova |

| Mouse | Coolermaster Master Mouse MM710 |

| Keyboard | Steelseries 6gv2 |

| Software | Windows 10 |

| Benchmark Scores | Higher than the Light of Revanchist |

Completely normal for this small chip - I suspect the small die, will in fact, produce significant heat during milliseconds, along with the overclocked state.

Coming from an i7 920 to a 950 D0 now to this is almost like night and day. The difference in gaming smoothness and performance across the board in all aspects, which are, but not limited to: Microstutters (is that a thing anymore with Skaylake/Kaby lake Architecture? Not with this my EVGA 980 Ti SC Reference card +160 on the core with an extra little +36 , or 38 to 42, but no higher because you don't need to red-line her all the time (but Fans at 90% constant is also fine, as long as you don't get throttling and mind fan noise/use headphones. with 1515-20's being the absolute lottery cards (80 ASIC score), (this Maxwell card doesn't like the voltage being put to the limit it does not do anything but give false hope along with stuttering core clocks/unstable). (Also top core clock does not necessarily mean highest FPS). (Also overclocking memory does nothing in terms of performance, it could actually hinder your max core clock and provide unnecessary heat)

I go to my cousins house and watch his 5820k rig with 980 SLI destroy games like BF1, Doom, GTA, Overwatch, but in terms of single card games like PLAYERUNKNOWN'S BATTLEGROUNDS this system is wayy more smoother I find as opposed to his 5820k @ 4.5 ghz. Don't get me wrong, incredible system for many years to come, but Kaby Lake certainly is an incredible specimen and I love the architecture and what it brings for what I want (I'm an ex pro gamer haha ) . Get this, 1.26 volts and im at 4.52 ghz. How incredible is that? 1.29 V it goes up automatically sometimes when it is working hard, but she doesn't get very hot under my Corsair h100i andd Antec Twelve Hundred. Delidding? Why would I even need to do that? Sure I can autotune this baby on my Maximus Formula and it will hit 5 ghz at around 1.3 volts, but its just a novelty and a number at this point in my opinion and unnecessary.

) . Get this, 1.26 volts and im at 4.52 ghz. How incredible is that? 1.29 V it goes up automatically sometimes when it is working hard, but she doesn't get very hot under my Corsair h100i andd Antec Twelve Hundred. Delidding? Why would I even need to do that? Sure I can autotune this baby on my Maximus Formula and it will hit 5 ghz at around 1.3 volts, but its just a novelty and a number at this point in my opinion and unnecessary.

This Chip is a beast I wouldn't sweat the random temp spikes, I stressed mine in AIDA64 for hours it never want above 79'C AVG and that was at 4.5 Ghz not even 1.3 volts so.. This Kaby Lake is a baby In my honest opinion, love it, even though I paid a lot for the colourful motherboard haha although I plan to try out Optane and some nvme SSD drives

although I plan to try out Optane and some nvme SSD drives

I want to be that guy who says Intel is milking their consumers yaddayadda but I dont see beast quad cores becoming extinct any time soon (within 5 years at least). This thing plows through games and it was hugely bottlenecked by my old Nehalem (I was lowering settings thinking it was the GPU, now I run everything maxed)

Wouldn't worry about the temperature reading though, run it through AIDA64 for a couple hours to make sure your average is 80 or under preferably, But I am sure you can keep your system with under 90'c ,But I wouldn't do it personally.

tl;dr - I might delid it but it doesn't even hit 60'c in hard gaming, but it is an incredible small chip Hats off to the Intel team for this quad core beast.., Any chip will increasingly rise in temp under hard stress to a certain point incrementally, but I don't see any flaw with Kaby Lake thus far, I love it.

Coming from an i7 920 to a 950 D0 now to this is almost like night and day. The difference in gaming smoothness and performance across the board in all aspects, which are, but not limited to: Microstutters (is that a thing anymore with Skaylake/Kaby lake Architecture? Not with this my EVGA 980 Ti SC Reference card +160 on the core with an extra little +36 , or 38 to 42, but no higher because you don't need to red-line her all the time (but Fans at 90% constant is also fine, as long as you don't get throttling and mind fan noise/use headphones. with 1515-20's being the absolute lottery cards (80 ASIC score), (this Maxwell card doesn't like the voltage being put to the limit it does not do anything but give false hope along with stuttering core clocks/unstable). (Also top core clock does not necessarily mean highest FPS). (Also overclocking memory does nothing in terms of performance, it could actually hinder your max core clock and provide unnecessary heat)

I go to my cousins house and watch his 5820k rig with 980 SLI destroy games like BF1, Doom, GTA, Overwatch, but in terms of single card games like PLAYERUNKNOWN'S BATTLEGROUNDS this system is wayy more smoother I find as opposed to his 5820k @ 4.5 ghz. Don't get me wrong, incredible system for many years to come, but Kaby Lake certainly is an incredible specimen and I love the architecture and what it brings for what I want (I'm an ex pro gamer haha

) . Get this, 1.26 volts and im at 4.52 ghz. How incredible is that? 1.29 V it goes up automatically sometimes when it is working hard, but she doesn't get very hot under my Corsair h100i andd Antec Twelve Hundred. Delidding? Why would I even need to do that? Sure I can autotune this baby on my Maximus Formula and it will hit 5 ghz at around 1.3 volts, but its just a novelty and a number at this point in my opinion and unnecessary.

) . Get this, 1.26 volts and im at 4.52 ghz. How incredible is that? 1.29 V it goes up automatically sometimes when it is working hard, but she doesn't get very hot under my Corsair h100i andd Antec Twelve Hundred. Delidding? Why would I even need to do that? Sure I can autotune this baby on my Maximus Formula and it will hit 5 ghz at around 1.3 volts, but its just a novelty and a number at this point in my opinion and unnecessary.This Chip is a beast I wouldn't sweat the random temp spikes, I stressed mine in AIDA64 for hours it never want above 79'C AVG and that was at 4.5 Ghz not even 1.3 volts so.. This Kaby Lake is a baby In my honest opinion, love it, even though I paid a lot for the colourful motherboard haha

although I plan to try out Optane and some nvme SSD drives

although I plan to try out Optane and some nvme SSD drivesI want to be that guy who says Intel is milking their consumers yaddayadda but I dont see beast quad cores becoming extinct any time soon (within 5 years at least). This thing plows through games and it was hugely bottlenecked by my old Nehalem (I was lowering settings thinking it was the GPU, now I run everything maxed)

Wouldn't worry about the temperature reading though, run it through AIDA64 for a couple hours to make sure your average is 80 or under preferably, But I am sure you can keep your system with under 90'c ,But I wouldn't do it personally.

tl;dr - I might delid it but it doesn't even hit 60'c in hard gaming, but it is an incredible small chip Hats off to the Intel team for this quad core beast.., Any chip will increasingly rise in temp under hard stress to a certain point incrementally, but I don't see any flaw with Kaby Lake thus far, I love it.

Attachments

- Joined

- Dec 27, 2012

- Messages

- 226 (0.05/day)

- Location

- Athens,Greece

To be honest I have not read all of the comments but I will speak based on personal experience.

I have tested several motherboards with several 7700K's and I found out that my GB mobo was overvolting the cpu on default (VID would reach close to 1.38) compared to other vendors (+0.15v).

Of course it might be a bios version issue but would be cool if we would have the full picture (will have to check more of the comments to see if I missed smth).

Personally, I had no problems when proper voltages were applied.

I have tested several motherboards with several 7700K's and I found out that my GB mobo was overvolting the cpu on default (VID would reach close to 1.38) compared to other vendors (+0.15v).

Of course it might be a bios version issue but would be cool if we would have the full picture (will have to check more of the comments to see if I missed smth).

Personally, I had no problems when proper voltages were applied.

- Joined

- Jun 30, 2008

- Messages

- 337 (0.05/day)

Read this one! Intel tells core i7 7709k owners to stop overclocking!

http://www.pcgamer.com/intels-tells...m_source=facebook&utm_campaign=buffer-maxpcfb

http://www.pcgamer.com/intels-tells...m_source=facebook&utm_campaign=buffer-maxpcfb

- Joined

- Mar 27, 2005

- Messages

- 1,009 (0.14/day)

- Location

- South Africa

| Processor | Intel i7-8700k @ stock |

|---|---|

| Motherboard | MSI Z370 Gaming Pro Carbon |

| Cooling | Corsair H115i Pro iirc |

| Memory | 16GB Corsair DDR4-3466 |

| Video Card(s) | Gigabyte GTX 1070 FE |

| Storage | Samsung 960 Evo 500G NVMe |

| Display(s) | 34" ASUS ROG PG348Q + 28" ASUS TUF Gaming VG289 |

| Case | NZXT |

| Power Supply | Corsair 850W |

| Mouse | Logitech G502 |

| Keyboard | CoolerMaster Storm XT Stealth |

| VR HMD | Oculus Quest 2 |

Well, no one here actually KNOWS. We're all just speculating based on some seriously limited data.

Sure, I wasn't trying to say we KNOW, but my last sentence did make it sound that way. I should've phrased it a bit differently.

Right because you obviously missed the (two) recent Atom blowups. Intel have tons of money & influence in place to cover up such (bad PR) pieces & they control quite a few tech sites' narrative even today.@Bandalo this happens since Skylake gen on non K CPUs and on K overclocked CPUs with voltage set on AUTO or without an offset. These are not any news, is just an article to cause drama, because Techpowerup is like that now. Just need to pay attention to their last articles and reviews to conclude that, where a GPU that draws almost double the power and has a 5% increase in performance, is rated more than 9.0.

IF this was a real problem, it wouldn´t appear only 1 year and half after the release.

There was a time where this website was totally unbiased and my fav one to read about hardware. It isn´t like that anymore and isn´t only me saying it. Maybe I´m one of the few that bothers coming here to write it. Just pay attention to other hardware forums and you will see what´s happening with techpowerup.

Right now GamersNexus and Hardocp are 2 good unbiased websites that put the facts and the scientific graphs in your face, and you make your conclusions.

Just for instance

FYI: You can blow Intel-powered broadband modems off the 'net with a 'trivial' packet stream

UPDATE2 Low Bandwidth DoS Attack Can Hammer Virgin Media SuperHub 3

As for this particular issue, there's not much to go by atm but then again no one looked for the missing 0.5GB either until someone blew the lid off Nvidia's lie

Last edited:

- Joined

- Mar 23, 2016

- Messages

- 4,919 (1.47/day)

| Processor | Intel Core i7-13700 PL2 150W |

|---|---|

| Motherboard | MSI Z790 Gaming Plus WiFi |

| Cooling | Cooler Master RGB Tower cooler |

| Memory | Crucial Pro DDR5-5600 32GB Kit OC 6600 |

| Video Card(s) | Gigabyte Radeon RX 9070 GAMING OC 16G |

| Storage | 970 EVO NVMe 500GB, WD850N 2TB |

| Display(s) | Samsung 28” 4K monitor |

| Case | Corsair iCUE 4000D RGB AIRFLOW |

| Audio Device(s) | EVGA NU Audio, Edifier Bookshelf Speakers R1280 |

| Power Supply | TT TOUGHPOWER GF A3 Gold 1050W |

| Mouse | Logitech G502 Hero |

| Keyboard | Logitech G G413 Silver |

| Software | Windows 11 Professional v24H2 |

So Intel is telling everyone that bought a 7700K no overclocking in there statement besides removing the heatspreader is a no-no.  So much for that unlocked multiplier.

So much for that unlocked multiplier.

So much for that unlocked multiplier.

So much for that unlocked multiplier.- Joined

- Jan 9, 2011

- Messages

- 117 (0.02/day)

- Location

- Itasca IL

| System Name | Widow Maker |

|---|---|

| Processor | Ryzen 2700X |

| Motherboard | Asus Crosshair Hero VII |

| Cooling | AIO |

| Memory | 32GB @ 3200mhz |

| Video Card(s) | Evga 2080 XC gaming |

| Storage | WD 5tb Sata, WD 1tb WD 2.5tb Samsung 250gb SSD |

| Display(s) | 3 27 inch benq monitors 1is 144hz 1440p |

| Case | Corsair 1000D |

| Audio Device(s) | Supreme FX XFI |

| Power Supply | EVGA G2 1000W |

| Mouse | Corsair Glaive RGB aluminium |

| Keyboard | Corsair K95 Plat |

| Software | Windows 10 64bit |

The problem I have with this is I have a 4790K and I have never seen it just randomly spike up to 90c let alone any temp higher than the idle temps I get on liquid cooling. Of course a company is going to tell you that you shouldnt overclock your processor and that doing so could void your warrenty. That is something we all know going into liquid cooling. Intel's response to the sensor issue was simply stating that a CPU can see a spike of maybe 5-10 C while doing simple tasks. But in order for the processor to stay at a temp of 90+ for as long as they are in the graph that cpu is under a benchmark or stress test. Its that simple and if someone is having issues with temp spiking why does everyone think its the processor check your motherboard as well.

- Joined

- Oct 2, 2004

- Messages

- 13,882 (1.84/day)

| System Name | Dark Monolith |

|---|---|

| Processor | AMD Ryzen 7 5800X3D |

| Motherboard | ASUS Strix X570-E |

| Cooling | Arctic Cooling Freezer II 240mm + 2x SilentWings 3 120mm |

| Memory | 64 GB G.Skill Ripjaws V Black 3600 MHz |

| Video Card(s) | XFX Radeon RX 9070 XT Mercury OC Magnetic Air |

| Storage | Seagate Firecuda 530 4 TB SSD + Samsung 850 Pro 2 TB SSD + Seagate Barracuda 8 TB HDD |

| Display(s) | ASUS ROG Swift PG27AQDM 240Hz OLED |

| Case | Silverstone Kublai KL-07 |

| Audio Device(s) | Sound Blaster AE-9 MUSES Edition + Altec Lansing MX5021 2.1 Nichicon Gold |

| Power Supply | BeQuiet DarkPower 11 Pro 750W |

| Mouse | Logitech G502 Proteus Spectrum |

| Keyboard | UVI Pride MechaOptical |

| Software | Windows 11 Pro |

I guess this is somewhat end of the line for Intel and they'll have to pull another Core micro architecture from their sleeve. Just ramping up clock is reaching the limits.

- Joined

- Jul 16, 2014

- Messages

- 8,252 (2.08/day)

- Location

- SE Michigan

| System Name | Dumbass |

|---|---|

| Processor | AMD Ryzen 7800X3D |

| Motherboard | ASUS TUF gaming B650 |

| Cooling | Artic Liquid Freezer 2 - 420mm |

| Memory | G.Skill Sniper 32gb DDR5 6000 |

| Video Card(s) | GreenTeam 4070 ti super 16gb |

| Storage | Samsung EVO 500gb & 1Tb, 2tb HDD, 500gb WD Black |

| Display(s) | 1x Nixeus NX_EDG27, 2x Dell S2440L (16:9) |

| Case | Phanteks Enthoo Primo w/8 140mm SP Fans |

| Audio Device(s) | onboard (realtek?) - SPKRS:Logitech Z623 200w 2.1 |

| Power Supply | Corsair HX1000i |

| Mouse | Steeseries Esports Wireless |

| Keyboard | Corsair K100 |

| Software | windows 10 H |

| Benchmark Scores | https://i.imgur.com/aoz3vWY.jpg?2 |

I love all the self proclaimed experts in this thread!

- Joined

- Sep 17, 2014

- Messages

- 23,989 (6.15/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

The graphs look fine....the spikes are not random but adjust to the load from OCCT.

No they aren't fine. That graph looks scary as fuck. CPU usage is steady at 100% while the temps spike. Could be a sensor error, but it still triggers everything related to those temps including Intel's throttling. Essentially if your ambient temps go up, these spikes can easily hit the 100C ceiling.

If it is NOT a sensor, and I do see others also say this temp spiking also shows a vCore spike, this behaviour will rapidly decrease the lifespan of this CPU. I'd be very interested in seeing the vCore graph of these runs.

FWIW I have néver seen this temperature spiking on my Intel CPU, even when running Linpack/AVX, and also using OCCT graphs to monitor it.

Could this be a bad batch?

Last edited:

- Joined

- Dec 31, 2009

- Messages

- 19,396 (3.45/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

Why cant it be different instruction sets or activities within the stress test? Does it do that with p95? Linpack? Etc?

All we have is a half assed article which doesnt really explain a damn thing. Sensationalist without a proper explanation..

All we have is a half assed article which doesnt really explain a damn thing. Sensationalist without a proper explanation..

- Joined

- Sep 17, 2014

- Messages

- 23,989 (6.15/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Sure, cherry picking, there you go:

https://www.hardocp.com/article/2017/04/11/amd_ryzen_5_1600_1400_cpu_review/4

that´s how you effectively test a CPU. Before you jump saying "no one plays at such conditions" let me remind you of 144hz and 240hz gaming, where all the fps are important.

Hate to break it to ya, but there is only one game engine in use right now that benefits from running at 240hz and that is CS GO, and even then, the benefit versus 120/160 fps is extremely minimal, and definitely NOT worth buying a different processor for, unless all you do is play CS GO - and in that case you have other problems to worry about IMO. Also, you may or may not have noticed, but CS:GO is just one of the most played games on STEAM. In the whole PC gaming market its just a tiny sliver of everything.

If you're actually convinced that 240hz gaming has any use whatsoever for any other game, you're completely deluded. 240hz is marketing for the full 100 %.

- Joined

- Feb 20, 2017

- Messages

- 92 (0.03/day)

- Location

- Wild Coast, South Africa

Quite a while actually. Haswell chips ran hot and K-series were able to hit TJMAX 100C on stock cooling under stress testing. I've seen chips run for years near 90C with no issues. IIRC Haswell was actually started as a mobile CPU, which are generally built to withstand constant heat cycles at or near thermal throttle limits. To me that part of it would be a non-issue. The real issue is these temp spikes, when and how they occur and if they're legitimate or a bug/issue with sensors. Surely wouldn't be the first time (looks at RMA'd Core2 E8600 from 8 years ago...).

Still doesn't make the issue any less annoying though for those folks going through this.

I'm pretty damn content with my 4790K atm, it stays cool under air even with a decent OC to 4.8GHz.

Devil's Canyon is a beautiful thing...

- Joined

- Oct 19, 2007

- Messages

- 8,314 (1.29/day)

| Processor | Intel i9 9900K @5GHz w/ Corsair H150i Pro CPU AiO w/Corsair HD120 RBG fan |

|---|---|

| Motherboard | Asus Z390 Maximus XI Code |

| Cooling | 6x120mm Corsair HD120 RBG fans |

| Memory | Corsair Vengeance RGB 2x8GB 3600MHz |

| Video Card(s) | Asus RTX 3080Ti STRIX OC |

| Storage | Samsung 970 EVO Plus 500GB , 970 EVO 1TB, Samsung 850 EVO 1TB SSD, 10TB Synology DS1621+ RAID5 |

| Display(s) | Corsair Xeneon 32" 32UHD144 4K |

| Case | Corsair 570x RBG Tempered Glass |

| Audio Device(s) | Onboard / Corsair Virtuoso XT Wireless RGB |

| Power Supply | Corsair HX850w Platinum Series |

| Mouse | Logitech G604s |

| Keyboard | Corsair K70 Rapidfire |

| Software | Windows 11 x64 Professional |

| Benchmark Scores | Firestrike - 23520 Heaven - 3670 |

Ever since I first bought a 2500k I havent been able to run prime95 even at stock due to temps going ridiculously high. Basically use Aida64 these days.

MxPhenom 216

ASIC Engineer

- Joined

- Aug 31, 2010

- Messages

- 13,179 (2.45/day)

- Location

- Loveland, CO

| System Name | Main Stack (in progress) |

|---|---|

| Processor | AMD Ryzen 7 9800X3D |

| Motherboard | Asus X870 ROG Strix-A - White |

| Cooling | Air (temporary until 9070xt blocks are available) |

| Memory | G. Skill Royal 2x24GB 6000Mhz C26 |

| Video Card(s) | Powercolor Red Devil Radeon 9070XT 16G |

| Storage | Samsung 9100 Gen5 1TB | Samsung 980 Pro 1TB (Games_1) | Lexar NM790 2TB (Games_2) |

| Display(s) | Asus XG27ACDNG 360Hz QD-OLED | Gigabyte M27Q-P 165Hz 1440P IPS | LG 24" 1440 IPS 1440p |

| Case | HAVN HS420 - White |

| Audio Device(s) | FiiO K7 | Sennheiser HD650 + Beyerdynamic FOX Mic |

| Power Supply | Corsair RM1000x ATX 3.1 |

| Mouse | Razer Viper v3 Pro |

| Keyboard | Corsair K65 Plus 75% Wireless - USB Mode |

| Software | Windows 11 Pro 64-Bit |

And Intels response to all this was "stop overclocking"

- Joined

- Jun 30, 2008

- Messages

- 337 (0.05/day)

Didnt read one post... are these AVX/FMA instructions doing it? I dont get what the story is from the occt graphs and lack of direct links...

@fullinfusion

@newtekie1

@Raevenlord

Just updated my bios on my MSI Mortar Z270M and noticed there is a new feature called 'Reduce cpu ratio while running AVX' and theres a list of negative offset values and the description states its to keep temperatures low while running AVX instruction set programs. So I set it to -4 and ran the latest version of Prime95 that uses the AVX feature set and the temps were like ten degrees lower than before in the 70's and the cpu ratio was 4 points lower at a clock frequency of 3800 mhz vs 4200 mhz. So then I ran the older non-avx version of Prime95 ver 26.x and this time temps were a little higher in mid 70's at higher frequency of 4200 mhz while all cores loaded at 100 percent. So this tells me that the motherboard manufacturer is aware of the high temps. Also noticed lower voltages being used since the update. Using a thermalright muscle-AXP100R heatsink. Not the best heatsink but intended this system to be a htpc machine Plex server.

- Joined

- Mar 6, 2017

- Messages

- 3,385 (1.13/day)

- Location

- North East Ohio, USA

| System Name | My Ryzen 7 7700X Super Computer |

|---|---|

| Processor | AMD Ryzen 7 7700X |

| Motherboard | Gigabyte B650 Aorus Elite AX |

| Cooling | DeepCool AK620 with Arctic Silver 5 |

| Memory | 2x16GB G.Skill Trident Z5 NEO DDR5 EXPO (CL30) |

| Video Card(s) | XFX AMD Radeon RX 7900 GRE |

| Storage | Samsung 980 EVO 1 TB NVMe SSD (System Drive), Samsung 970 EVO 500 GB NVMe SSD (Game Drive) |

| Display(s) | Acer Nitro XV272U (DisplayPort) and Acer Nitro XV270U (DisplayPort) |

| Case | Lian Li LANCOOL II MESH C |

| Audio Device(s) | On-Board Sound / Sony WH-XB910N Bluetooth Headphones |

| Power Supply | MSI A850GF |

| Mouse | Logitech M705 |

| Keyboard | Steelseries |

| Software | Windows 11 Pro 64-bit |

| Benchmark Scores | https://valid.x86.fr/liwjs3 |

What the f**k, what the actual f**k?! Really Intel? That's your response?

Shit man, I was on the fence of whether or not I would go Ryzen and this just made my decision easier. F**k off Intel.

Shit man, I was on the fence of whether or not I would go Ryzen and this just made my decision easier. F**k off Intel.