-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Reason Why NVIDIA's GeForce RTX 3080 GPU Uses 19 Gbps GDDR6X Memory and not Faster Variants

- Thread starter AleksandarK

- Start date

- Joined

- Sep 19, 2017

- Messages

- 203 (0.07/day)

- Location

- Germany

| Processor | 7800X3D |

|---|---|

| Motherboard | Asus Strix X670E-F |

| Cooling | MO-RA3 420 LT | 4x NF-A20 |

| Memory | F5-6400J3239G16GX2-TZ5NR |

| Video Card(s) | Asus 4090 TUF |

| Storage | 1TB NM790 | 2TB SN850X | 1TB SN750 |

| Display(s) | AW3423DW | S2721DGFA |

| Case | Hyte Y70 |

| Audio Device(s) | FiiO K11 | DT1990 Pro | Rode NT-USB |

| Power Supply | FSP Hydro Ti Pro 1000W |

| Mouse | G502 X Plus | GPX Superlight 2 |

| Keyboard | Keychron Q6 Max |

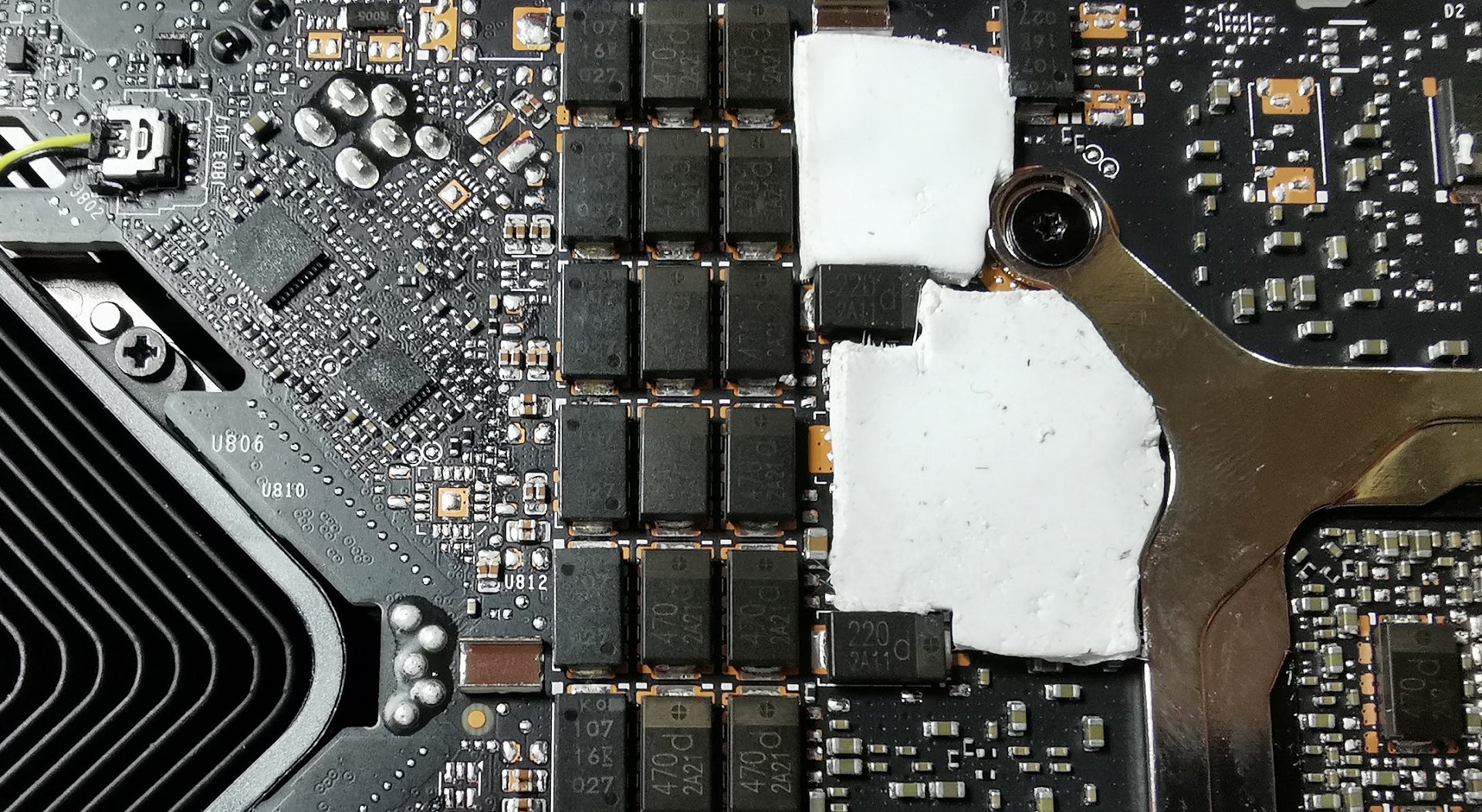

Externally and internally. Igor somehow has access to Nvidia engineering software which can display G6X temperatures.It was measured externally, which means the chips run even hotter in reality now that I think about it.

Simple pad mod for the GeForce RTX 3080 Founders Edition lowers the GDDR6X temperature by 8 degrees | igor´sLAB

I had recently already measured it in the article “GDDR6X at the limit? Over 100 degrees measured inside of the chip with the GeForce RTX 3080 FE!” and noted, that in extreme cases temperatures of…

- Joined

- Apr 16, 2013

- Messages

- 584 (0.13/day)

- Location

- Bulgaria

| System Name | Black Knight | White Queen |

|---|---|

| Processor | Intel Core i9-10940X (28 cores) | Intel Core i7-5775C (8 cores) |

| Motherboard | ASUS ROG Rampage VI Extreme Encore X299G | ASUS Sabertooth Z97 Mark S (White) |

| Cooling | Noctua NH-D15 chromax.black | Xigmatek Dark Knight SD-1283 Night Hawk (White) |

| Memory | G.SKILL Trident Z RGB 4x8GB DDR4 3600MHz CL16 | Corsair Vengeance LP 4x4GB DDR3L 1600MHz CL9 (White) |

| Video Card(s) | ASUS ROG Strix GeForce RTX 4090 OC | KFA2/Galax GeForce GTX 1080 Ti Hall of Fame Edition |

| Storage | Samsung 990 Pro 2TB, 980 Pro 1TB, 850 Pro 256GB, 840 Pro 256GB, WD 10TB+ (incl. VelociRaptors) |

| Display(s) | Dell Alienware AW2721D 240Hz| LG OLED evo C4 48" 144Hz |

| Case | Corsair 7000D AIRFLOW (Black) | NZXT ??? w/ ASUS DRW-24B1ST |

| Audio Device(s) | ASUS Xonar Essence STX | Realtek ALC1150 |

| Power Supply | Enermax Revolution 1250W 85+ | Super Flower Leadex Gold 650W (White) |

| Mouse | Razer Basilisk Ultimate, Razer Naga Trinity | Razer Mamba 16000 |

| Keyboard | Razer Blackwidow Chroma V2 (Orange switch) | Razer Ornata Chroma |

| Software | Windows 10 Pro 64bit |

Watch 3080 Ti/Super is getting announced after AMD's keynote and it's equipped with those faster chips.

- Joined

- Jul 10, 2015

- Messages

- 755 (0.21/day)

- Location

- Sokovia

| System Name | Alienation from family |

|---|---|

| Processor | i7 7700k |

| Motherboard | Hero VIII |

| Cooling | Macho revB |

| Memory | 16gb Hyperx |

| Video Card(s) | Asus 1080ti Strix OC |

| Storage | 960evo 500gb |

| Display(s) | AOC 4k |

| Case | Define R2 XL |

| Power Supply | Be f*ing Quiet 600W M Gold |

| Mouse | NoName |

| Keyboard | NoNameless HP |

| Software | You have nothing on me |

| Benchmark Scores | Personal record 100m sprint: 60m |

Asus tuf is good solution to that, thermal readings are the proof of that.At 320W+ TDP I'm a bit surprised that nvidia hasn't released a water cooled version of the FE. I believe AMD did that for Vega (or was it Fury?).

- Joined

- Feb 18, 2005

- Messages

- 6,396 (0.86/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

Once again people are making a mountain out of what may not even be a molehill.

Firstly, nobody knows what safe temperatures are for GDDR6X, since that information isn't publicly available. 110 °C is the maximum temp for GDDR6 non-X, for all we know G6X could be rated to 125 °C.

Secondly, even if G6X is only rated to 110 °C, the modules have thermal throttling built in, so they shouldn't be damaged.

Thirdly, Igor himself states:

Finally, if you really have a problem with this, do what everyone sane does: buy an AIB version with a proper cooler.

Firstly, nobody knows what safe temperatures are for GDDR6X, since that information isn't publicly available. 110 °C is the maximum temp for GDDR6 non-X, for all we know G6X could be rated to 125 °C.

Secondly, even if G6X is only rated to 110 °C, the modules have thermal throttling built in, so they shouldn't be damaged.

Thirdly, Igor himself states:

But even such a high value is no reason for hasty panic when you understand the interrelationships of all temperatures.

Finally, if you really have a problem with this, do what everyone sane does: buy an AIB version with a proper cooler.

- Joined

- Apr 23, 2020

- Messages

- 12 (0.01/day)

They could go with 21Gbps if they went with 7nm TSMC. The chip would have had lower TDP, consequently allowing less robust VR and cooler PCB around the VR, allowing the use of faster memory. I guess that is going to happen with 3080 Super.

- Joined

- May 15, 2020

- Messages

- 697 (0.38/day)

- Location

- France

| System Name | Home |

|---|---|

| Processor | Ryzen 3600X |

| Motherboard | MSI Tomahawk 450 MAX |

| Cooling | Noctua NH-U14S |

| Memory | 16GB Crucial Ballistix 3600 MHz DDR4 CAS 16 |

| Video Card(s) | MSI RX 5700XT EVOKE OC |

| Storage | Samsung 970 PRO 512 GB |

| Display(s) | ASUS VA326HR + MSI Optix G24C4 |

| Case | MSI - MAG Forge 100M |

| Power Supply | Aerocool Lux RGB M 650W |

It doesn't work like this, if you change the node you must do a complete redesign of the die. The Supers will be on Samsung, too.They could go with 21Gbps if they went with 7nm TSMC. The chip would have had lower TDP, consequently allowing less robust VR and cooler PCB around the VR, allowing the use of faster memory. I guess that is going to happen with 3080 Super.

- Joined

- Jul 16, 2014

- Messages

- 8,253 (2.08/day)

- Location

- SE Michigan

| System Name | Dumbass |

|---|---|

| Processor | AMD Ryzen 7800X3D |

| Motherboard | ASUS TUF gaming B650 |

| Cooling | Artic Liquid Freezer 2 - 420mm |

| Memory | G.Skill Sniper 32gb DDR5 6000 |

| Video Card(s) | GreenTeam 4070 ti super 16gb |

| Storage | Samsung EVO 500gb & 1Tb, 2tb HDD, 500gb WD Black |

| Display(s) | 1x Nixeus NX_EDG27, 2x Dell S2440L (16:9) |

| Case | Phanteks Enthoo Primo w/8 140mm SP Fans |

| Audio Device(s) | onboard (realtek?) - SPKRS:Logitech Z623 200w 2.1 |

| Power Supply | Corsair HX1000i |

| Mouse | Steeseries Esports Wireless |

| Keyboard | Corsair K100 |

| Software | windows 10 H |

| Benchmark Scores | https://i.imgur.com/aoz3vWY.jpg?2 |

Big OLD Mammaries?do you know there's a BOM budget..

On topic/

When cards get designed right side up heat will actually travel away from the chips naturally.

- Joined

- Sep 17, 2014

- Messages

- 24,004 (6.14/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Once again people are making a mountain out of what may not even be a molehill.

Firstly, nobody knows what safe temperatures are for GDDR6X, since that information isn't publicly available. 110 °C is the maximum temp for GDDR6 non-X, for all we know G6X could be rated to 125 °C.

Secondly, even if G6X is only rated to 110 °C, the modules have thermal throttling built in, so they shouldn't be damaged.

Thirdly, Igor himself states:

Finally, if you really have a problem with this, do what everyone sane does: buy an AIB version with a proper cooler.

No mountains in sight, but I did nearly break my ankle a few times now with all those molehills on my path. Definitely not a problem free gen, this, and hot memory on an FE is a new thing now. So the core doesn't throttle anymore, yay, now the memory does.

- Joined

- Dec 31, 2009

- Messages

- 19,399 (3.45/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

Perhaps... but that requires a complete retooling of PCIe spacing. The space is available below the slot, not above it. At most you have room for a 1.5 slot card above the top PCIe slot as it stands.When cards get designed right side up heat will actually travel away from the chips naturally.

- Joined

- Jul 16, 2014

- Messages

- 8,253 (2.08/day)

- Location

- SE Michigan

| System Name | Dumbass |

|---|---|

| Processor | AMD Ryzen 7800X3D |

| Motherboard | ASUS TUF gaming B650 |

| Cooling | Artic Liquid Freezer 2 - 420mm |

| Memory | G.Skill Sniper 32gb DDR5 6000 |

| Video Card(s) | GreenTeam 4070 ti super 16gb |

| Storage | Samsung EVO 500gb & 1Tb, 2tb HDD, 500gb WD Black |

| Display(s) | 1x Nixeus NX_EDG27, 2x Dell S2440L (16:9) |

| Case | Phanteks Enthoo Primo w/8 140mm SP Fans |

| Audio Device(s) | onboard (realtek?) - SPKRS:Logitech Z623 200w 2.1 |

| Power Supply | Corsair HX1000i |

| Mouse | Steeseries Esports Wireless |

| Keyboard | Corsair K100 |

| Software | windows 10 H |

| Benchmark Scores | https://i.imgur.com/aoz3vWY.jpg?2 |

yea, yea, likely excuses....Perhaps... but that requires a complete retooling of PCIe spacing. The space is available below the slot, not above it. At most you have room for a 1.5 slot card above the top PCIe slot as it stands.

- Joined

- Feb 18, 2005

- Messages

- 6,396 (0.86/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

It doesn't work like this, if you change the node you must do a complete redesign of the die. The Supers will be on Samsung, too.

Yup, NVIDIA has really split Ampere this gen - the lower-volume compute chips (GA100) are on TSMC 7nm, the consumer chips are Samsung.

No mountains in sight, but I did nearly break my ankle a few times now with all those molehills on my path. Definitely not a problem free gen, this, and hot memory on an FE is a new thing now. So the core doesn't throttle anymore, yay, now the memory does.

Again, there is no way to know if these temperatures are problematic because we don't yet know what safe G6X operating temperatures are. So making a fuss about said temperatures is premature at best and FUD at worst.

Should evidence emerge showing that these temps are a problem, I will join in rightly criticising NVIDIA for putting form over function. But not before. There's far too much fanboyism and idiot brigades on these forums, I reject such nonsense wholeheartedly.

- Joined

- Sep 17, 2014

- Messages

- 24,004 (6.14/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Again, there is no way to know if these temperatures are problematic because we don't yet know what safe G6X operating temperatures are. So making a fuss about said temperatures is premature at best and FUD at worst.

Should evidence emerge showing that these temps are a problem, I will join in rightly criticising NVIDIA for putting form over function. But not before.

Mhm in the same way, Intel's current CPU operating temps are also not problematic, but they still urge them to limit all sorts of stuff, come up with 2810 ways to boost, and throttle like nobody's business. Come on, smoke > fire, its not hard. Even if they spec them for 120 C its a horrible temp figure to look at. There are lots of parts that will suffer around this temperature and those boards are cramped as hell. And lets not forget that even if they spec them for a very high 125C, you're still looking at major degradation risk for anything over 100C.

Why do you think these specs aren't public? Coincidence? Materials don't magically suddenly take more heat. They're just stretching up the limits of what's safe and what's not. As long as it makes the warranty period, right?

Time to put two and two together.

- Joined

- Feb 18, 2005

- Messages

- 6,396 (0.86/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

Mhm in the same way, Intel's current CPU operating temps are also not problematic, but they still urge them to limit all sorts of stuff, come up with 2810 ways to boost, and throttle like nobody's business. Come on, smoke > fire, its not hard. Even if they spec them for 120 C its a horrible temp figure to look at. There are lots of parts that will suffer around this temperature and those boards are cramped as hell. And lets not forget that even if they spec them for a very high 125C, you're still looking at major degradation risk for anything over 100C.

Why do you think these specs aren't public? Coincidence? Materials don't magically suddenly take more heat. They're just stretching up the limits of what's safe and what's not. As long as it makes the warranty period, right?

Time to put two and two together.

Now you are getting into conspiracy theory land, which is even worse than FUD. Please, use your brain to explain to me how it benefits NVIDIA to tarnish their reputation by purposefully shipping defective products that they know will get them into trouble down the road.

- Joined

- Aug 5, 2020

- Messages

- 201 (0.11/day)

| System Name | BUBSTER |

|---|---|

| Processor | I7 13700K (6.1 GHZ XTU OC) |

| Motherboard | Z690 Gigabyte Aorus Elite Pro |

| Cooling | Arctic Freezer II 360 RGB |

| Memory | 32GB G.Skill Trident Z RGB DDR4 4800MHz 2x16GB |

| Video Card(s) | Asus GeForce RTX 3070 Super Dual OC |

| Storage | Kingston KC 3000 PCIE4 1Tb + 2 Kingston KC 3000 1TB PCIE4 RAID 0 + 4 TB Crucial gen 4 +12 TB HDD |

| Display(s) | Sony Bravia A85 j OLED |

| Case | Corsair Carbide Air 540 |

| Audio Device(s) | Asus Xonar Essence STX II |

| Power Supply | Corsair AX 850 Titanium |

| Mouse | Corsair Gaming M65 Pro RGB + Razr Taipan |

| Keyboard | Asus ROG Strix Flare Cherry MX Red + Corsair Gaming K65 lux RGB |

| Software | Windows 11 Pro x64 |

Engineering Compromises...still fast enough

- Joined

- Jan 8, 2017

- Messages

- 9,831 (3.21/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

explain to me how it benefits NVIDIA to tarnish their reputation by purposefully shipping defective products

No one said anything is defective but it might be bordering on becoming defective.

- Joined

- Feb 18, 2005

- Messages

- 6,396 (0.86/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

I really dont understand why they crammed all of that stuff on such a small PCB. its not like the PCB prices skyrocketed or something, and they needed to cut expenses. It just seems stupid.

Form over function. NVIDIA's FE designs are sadly copying the iPhone trend.

No one said anything is defective but it might be bordering on becoming defective.

Do you waste time worrying that your phone or monitor or car or toaster might become defective? If not, why is the RTX 3080 FE an exception?

- Joined

- Jan 8, 2017

- Messages

- 9,831 (3.21/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

Do you waste time worrying that your phone or monitor or car or toaster might become defective?

I do if there is a known issue, obliviously I can only worry about things that I know of.

- Joined

- Oct 22, 2014

- Messages

- 14,636 (3.78/day)

- Location

- Sunshine Coast Australia

| System Name | H7 Flow 2024 |

|---|---|

| Processor | AMD 5800X3D |

| Motherboard | Asus X570 Tough Gaming |

| Cooling | Custom liquid |

| Memory | 32 GB DDR4 |

| Video Card(s) | Intel ARC A750 |

| Storage | Crucial P5 Plus 2TB. |

| Display(s) | AOC 24" Freesync 1m.s. 75Hz |

| Mouse | Lenovo |

| Keyboard | Eweadn Mechanical |

| Software | W11 Pro 64 bit |

Operating temperature range is 0 - 95CAgain, there is no way to know if these temperatures are problematic because we don't yet know what safe G6X operating temperatures are. So making a fuss about said temperatures is premature at best and FUD at worst.

Should evidence emerge showing that these temps are a problem, I will join in rightly criticising NVIDIA for putting form over function. But not before. There's far too much fanboyism and idiot brigades on these forums, I reject such nonsense wholeheartedly.

Absolute Maximum ratings, storage temperature: -55C Min. +125C Max.

can be found under data sheet in this link.

MT61K256M32JE-19

- Joined

- Aug 27, 2011

- Messages

- 296 (0.06/day)

| System Name | Gaming PC/ EDU PC/ HFS PC |

|---|---|

| Processor | Intel i9-9900KF/ Dual Ryzen 7 2700X |

| Motherboard | Asrock Z390 Taichi Ultimate/ Dual Asrock X370 Proffesional Gaming |

| Cooling | Noctua NH-C14S/ Arctic Xtreme Freezer/ Ryzen Wraith Prysm RGB |

| Memory | 64GB Corsair Vengeance PRO RGB 3200/ 32GB Corsair Dominator 3000/ 16GB Corsair Vengeance Pro 3200 |

| Video Card(s) | EVGA RTX 3080 FTW3 Ultra / MSI RTX 2080Ti Ventus / EVGA GTX 1060 SC Gaming |

| Storage | Dual 970 EVO Plus 1TB + 6Tb 860 EVO/ 960 EVO 500GB + 18Tb R0/ 840EVO 250Gb + 16Tb R0 |

| Display(s) | Samsung 32" U32R590 Curved 3480x2160 / Samsung 32" LC32H711 Curved 2560x1440 Freesync |

| Case | Cooler Master Stacker 830 NV Edition/ Dual Cooler Master 690 Advance II |

| Audio Device(s) | Creative X-Fi Surround 5.1 SBX/ Creative X-Fi Titanium Pci-E/ On-board Realtek |

| Power Supply | Triple Corsair Platinum HX850i |

| Mouse | Logitech G7 WL / Logitech G903 Lightspeed / MS BT 8000 |

| Keyboard | Dual Logitech G19s |

| Software | Win10 Pro |

uhh, can see again the problem with overheated micron chips like this happened already in some 20xx series cards...

- Joined

- Sep 17, 2014

- Messages

- 24,004 (6.14/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Now you are getting into conspiracy theory land, which is even worse than FUD. Please, use your brain to explain to me how it benefits NVIDIA to tarnish their reputation by purposefully shipping defective products that they know will get them into trouble down the road.

planned obscolescence is a conspiracy theory now? I think you need to get real.

Nvidias cards generally aged just fine.

The hot ones however really didnt. Also on the AMD side. I dont see why this would be an exception to that rule. But you are welcome to provide examples of VRAM running close to 100C doing just fine after 4-5 years. I do have some hands full of examples showing the opposite.

And ehh tarnish reputation? The card made it past warranty right?

- Joined

- Jun 2, 2017

- Messages

- 284 (0.10/day)

- Location

- Iran

| Processor | Intel Core i5-8600K @4.9GHz |

|---|---|

| Motherboard | MSI Z370 Gaming Pro Carbon |

| Cooling | Cooler Master MasterLiquid ML240L RGB |

| Memory | XPG 8GBx2 - 3200MHz CL16 |

| Video Card(s) | Asus Strix GTX 1080 OC Edition 8G 11Gbps |

| Storage | 2x Samsung 850 EVO 1TB |

| Display(s) | BenQ PD3200U |

| Case | Thermaltake View 71 Tempered Glass RGB Edition |

| Power Supply | EVGA 650 P2 |

planned obscolescence is a conspiracy theory now? I think you need to get real.

Nvidias cards generally aged just fine.

The hot ones however really didnt. Also on the AMD side. I dont see why this would be an exception to that rule. But you are welcome to provide examples of VRAM running close to 100C doing just fine after 4-5 years. I do have some hands full of examples showing the opposite.

And ehh tarnish reputation? The card made it past warranty right?

If you care about your card aging a million years just get a decent AIB card and everything will be fine. It's just one card out of tens avaliable.

I'm definitely disappointed that Nvidia has designed such a good looking card that cools the GPU itself just fine but somehow fails to keep the memory chips cool enough, I'd avoid the FE and look somewhere else.

Last edited:

- Joined

- Sep 17, 2014

- Messages

- 24,004 (6.14/day)

- Location

- The Washing Machine

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

If you care about your card aging a million years just get a decent AIB card and everything will be fine. It's just one card out of tens avaliable.

Obviously but that is not what this topic is about, is it... Nobody ever said 'buy an FE'. The article here is specifically talking about temps on the FE.

And we both know expecting 5-6 years of life out of a GPU is not a strange idea at all. Obviously it won't run everything beautifully, but it certainly should not be defective before then. Broken or crappy fan over time? Sure. Chip and memory issues? Bad design.

Now, when it comes to those AIB cards... the limitations of the FE do translate to those as well, since they're also 19 Gbps cards because 'the FE has it'.

![GTC 2018 Live Keynote | Page 2 | [H]ard|Forum GTC 2018 Live Keynote | Page 2 | [H]ard|Forum](/forums/proxy.php?image=https%3A%2F%2Fhardforum.com%2Fdata%2Fattachment-files%2F2018%2F03%2F113065_2018-03-27.jpg&hash=57594254f3018a2e1e47329c7594470c)