NVIDIA Announces OptiX 5.0 SDK - AI-Enhanced Ray Tracing

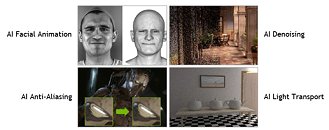

At SIGGRAPH 2017, NVIDIA introduced the latest version of their AI-based, GPU-enabled ray-tracing OptiX API. The company has been at the forefront of GPU-powered AI endeavors in a number of areas, including facial animation, anti-aliasing, denoising, and light transport. OptiX 5.0 brings a renewed focus on AI-based denoising.

AI training is still a brute-force scenario with finesse applied at the end: basically, NVIDIA took tens of thousands of image pairs of rendered images with one sample per pixel and a companion image of the same render with 4,000 rays per pixel, and used that to train the AI to predict what a denoised image looks like. Basically (and picking up the numbers NVIDIA used for its AI training), this means that in theory, users deploying OptiX 5.0 only need to render one sample per pixel of a given image, instead of the 4,000 rays per pixel that would be needed for its final presentation. Based on its learning, the AI will then be able to fill in the blanks towards finalizing the image, saving the need to render all that extra data. NVIDIA quotes a 157x improvement in render time using a DGX station with Optix 5.0 deployed against the same render on a CPU-based platform (2 x E5-2699 v4 @ 2.20GHz). The Optix 5.0 release also includes provisions for GPU-accelerated motion blur, which should do away with the need to render a frame multiple times and then applying a blur filter through a collage of the different frames. NVIDIA said OptiX 5.0 will be available in November. Check the press release after the break.

AI training is still a brute-force scenario with finesse applied at the end: basically, NVIDIA took tens of thousands of image pairs of rendered images with one sample per pixel and a companion image of the same render with 4,000 rays per pixel, and used that to train the AI to predict what a denoised image looks like. Basically (and picking up the numbers NVIDIA used for its AI training), this means that in theory, users deploying OptiX 5.0 only need to render one sample per pixel of a given image, instead of the 4,000 rays per pixel that would be needed for its final presentation. Based on its learning, the AI will then be able to fill in the blanks towards finalizing the image, saving the need to render all that extra data. NVIDIA quotes a 157x improvement in render time using a DGX station with Optix 5.0 deployed against the same render on a CPU-based platform (2 x E5-2699 v4 @ 2.20GHz). The Optix 5.0 release also includes provisions for GPU-accelerated motion blur, which should do away with the need to render a frame multiple times and then applying a blur filter through a collage of the different frames. NVIDIA said OptiX 5.0 will be available in November. Check the press release after the break.