Compal Optimizes AI Workloads with AMD Instinct MI355X at AMD Advancing AI 2025 and International Supercomputing Conference 2025

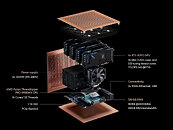

As AI computing accelerates toward higher density and greater energy efficiency, Compal Electronics (Compal; Stock Ticker: 2324.TW), a global leader in IT and computing solutions, unveiled its latest high-performance server platform: SG720-2A/ OG720-2A at both AMD Advancing AI 2025 in the U.S. and the International Supercomputing Conference (ISC) 2025 in Europe. It features the AMD Instinct MI355X GPU architecture and offers both single-phase and two-phase liquid cooling configurations, showcasing Compal's leadership in thermal innovation and system integration. Tailored for next-generation generative AI and large language model (LLM) training, the SG720-2A/OG720-2A delivers exceptional flexibility and scalability for modern data center operations, drawing significant attention across the industry.

With generative AI and LLMs driving increasingly intensive compute demands, enterprises are placing greater emphasis on infrastructure that offers both performance and adaptability. The SG720-2A/OG720-2A emerges as a robust solution, combining high-density GPU integration and flexible liquid cooling options, positioning itself as an ideal platform for next-generation AI training and inference workloads.

With generative AI and LLMs driving increasingly intensive compute demands, enterprises are placing greater emphasis on infrastructure that offers both performance and adaptability. The SG720-2A/OG720-2A emerges as a robust solution, combining high-density GPU integration and flexible liquid cooling options, positioning itself as an ideal platform for next-generation AI training and inference workloads.