Saturday, November 12th 2011

Sandy Bridge-E Benchmarks Leaked: Disappointing Gaming Performance?

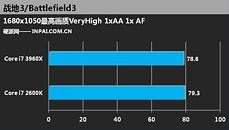

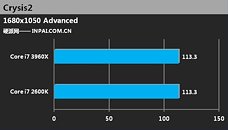

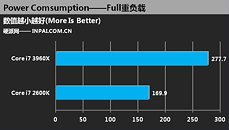

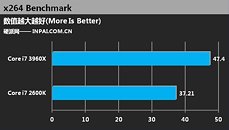

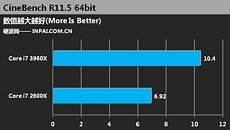

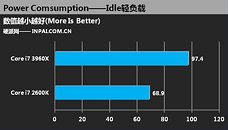

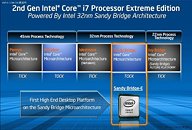

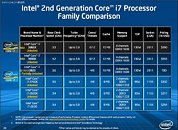

Just a handful of days ahead of Sandy Bridge-E's launch, a Chinese tech website, www.inpai.com.cn (Google translation) has done what Chinese tech websites do best and that's leak benchmarks and slides, Intel's NDA be damned. They pit the current i7-2600K quad core CPU against the upcoming i7-3960X hexa core CPU and compare them in several ways. The take home message appears to be that gaming performance on BF3 & Crysis 2 is identical, while the i7-3960X uses considerably more power, as one might expect from an extra two cores. The only advantage appears to come from the x264 & Cinebench tests. If these benchmarks prove accurate, then gamers might as well stick with the current generation Sandy Bridge CPUs, especially as they will drop in price, before being end of life'd. While this is all rather disappointing, it's best to take leaked benchmarks like this with a (big) grain of salt and wait for the usual gang of reputable websites to publish their reviews on launch day, November 14th. Softpedia reckons that these results are the real deal, however. There's more benchmarks and pictures after the jump.

Source:

wccftech.com

171 Comments on Sandy Bridge-E Benchmarks Leaked: Disappointing Gaming Performance?

www.hardwarecanucks.com/charts/index.php?pid=70,76&tid=3

Then this;

I would also have agree with Wile E in regards to gaming performance does not represent a CPU’s True Potential, and IMO the blame needs to go to the lazy gaming developers.

What I told already is, the people, who buy this cpu will already have a top GPU, if not having a multiple card configuration. So they won't get any performance boost when comparing SB-E with an i3 even. But that is what the story we already know.

The people who buy this CPU, will only buy this for bragging rights(having world fastes CPU) or to work with a heavy threaded environment like photoshop works, encordings, encryptions, etc., (like they did on i7 980x/990x). But one thing is for sure, this thing is not for me. :D

And on a strictly gaming perspective, I'm not disappointed either. Should be able to get four users running at a decent performance on a quad GPU, 32GB RAM, 6-core SB-E box. ;)

These are chips for those who want to set new benchmark records, video editing/encoding, run distributed computing projects, run Virtual Machines... Which these benchmarks don't really show much of.

For gamers, the only realistic criteria is: how well does it run multiple-GPU setups. Which again.. these benchmarks aren't showing.

The one thing this does show is that per-core performance is as expected. So when the NDA is lifted, we should start seeing more meaningful results. And those who have money to spend for fun, won't care either way. If anyone thinks this is disappointing they really need to reconsider their point of view and vent their frustration at game devs ;)

And it doesn't matter if one CPU achieves 200fps and the other 1200fps (6 times faster) you're measuring performance differences between them. This difference will become plenty obvious as time goes by and games become more demanding, giving the faster one a longer useful life. So for example, when the slower one achieves only a useless 10fps, the faster one will still be achieving 60fps and be highly useable.

Of course, it's also a good idea to supplement these tests with real-world resolutions too, as there can be unexpected results sometimes.

Thanks to John Doe for replying with some excellent answers to this misunderstanding. :toast:Will do. :)It isn't, as I've explained above in this post.Yes, of course, lol. Benchmark a bunch of older games with vsync on and they'll all peg at a solid 60fps.

Um guys....en·thu·si·ast. CHIP. ENTHUSIAST as in sr2-ed quad sli 580 or tri fired 6990s water cooled mountain mod case...you get the idea. We have more money/credit than common sense will spend an extra $300 for a 3% increase... we want the best period. Not price/performance... just the highest high end. yeah we know the speed limit is 65 and the mustang is an adequate sports car but no...give me that Bugatti. Thats the market for this thing. People who run six screens eyefinity. Our 3 screened 6870 xfired logic don't apply.

An 8core version would cost me a house mortgage.

www.cpu-world.com/news_2011/2011102701_Prices_of_Xeon_E5-2600-series_CPUs.html

I'm waiting for the SB-E reviews tomorrow to decide. If it really doesn't provide a significant gaming boost and/or prices are sky high (they likely will) then I'll just get a 2700K and be done with it.

Note that while Ivy Bridge is an improvement over SB and has those high res integrated graphics, it's not considered an enthusiast platform by Intel.

Gaming is almost always GPU limited anyway, especially when you compare Sandy Bridge to Sandy Bridge-E.

Hey chinese people, leak something that actually matters.

Sandy Bridge was already an awesome architecture performance wise, 6 core Sandy Bridge-E will be more than awesome :)

I'm not talking gaming wise though, can't really expect much improvement on them unless games start using 6+ threads :laugh:

Again, I don't care how many people here buy chip specifically for gaming. That has absolutely no bearing on the fact that gaming is still a TERRIBLE cpu benchmark. SB-E isn't the only thing I mention this about.

All current chips will allow you to have essentially the same gaming experience, because they are not the main bottleneck. What I want to know is, who actually expected the 2 extra cores of SB-E to make a difference in gaming?

These results are not disappointing at all, they are expected. Games do not take advantage of this much cpu power. Anybody that expected a significant difference obviously hasn't been paying attention to the gaming industry.My negativity towards BD had nothing to do with gaming. I am disappointed in it's per core IPC.

Westmere vs Bloomfield/lynnfield.

Unless you are doing something that requires the extra cores there usually isn't much of a benefit.

Why this is a surprise to anyone is beyond me.

Nothing to see here move along folks!

Triangle and setup data is sent from the CPU to the GPU for every single frame, so really, by lowering resolution and increasing framerate, you are not exactly getting the same effect as it's portrayed by reviewers. You need to lower resolution, and NOT use a high powered-VGA, unless you are just testing CPU-GPU communication. It is NOT 100% just testing CPU performance, and this way of testing is SERIOUSLY flawed.

Falsely increasing the workload turns a real-world benchmark into a synthetic benchmark. Except this synthetic benchmarking practice has no correlation to real-world workloads, at all.

This is why I don't do CPU reviews. I WILL NOT review perforamcne for a CPU in the manner it is currently, by nearly every reviewer out there.

The 3960X for all intents and purposes is a slightly slower version of the 2600K if the app uses up to 4C/8T (3.3G vs 3.4G)

Gaming would depend heavily on how much of the coding load is handled by the CPU. Typically compute-heavy games (i.e. RTS- once the maps start filling up esp.) would likely show a small benefit for the hexcore.

Of the three commonly used methods to test CPU gaming performance, all could be said to be in some way flawed:

Testing a low res and/or 0xAA/0xAF takes the GPU out of the equation but isn't indicative of "real world" use, and not likely a scenario that anyone would encounter in actual gaming.

Testing at common resolution and default game i.q. more often than not presents a GPU-bound result...in which case a Core i3 makes as much sense as BD, i7 or Xeon

Testing at common res and standard game i.q. with CFX/SLI removes some of the GPU limiting factor...but can also introduce new factors - drivers and PCI-E bandwidth constraints- the latter probably minor, but would be an influence if you were testing quad GPU (say dual HD6990/GTX590) in a heads-up comparison ( X79 PCI-E @ 2 x 16 versus Z68 PCI-E @ 2 x 8)...adding a lane multiplier such as the NF200 would possibly open the system to increased latency also from what I understand.

From a personal PoV, I would use my system as much for content creation (Handbrake, Photoshop etc.) and productivity (Excel, PowerPoint etc.) as I do gaming. If the performance metric is in favour of all the apps I use then I would definitely consider the platform. I would like a better understanding of where the launch stepping/revision stands before I'd commit - My C0 step for Bloomfield was noticeably inferior to D0 I upgraded to.

You all forget that there is no z68 Motherboard that will support 4way-sli!

so those of you who want to do only 3 way, than this processor is a waste.

remember that this processor can handle 40 PCIE lanes.

its funny everyones posiutive praise when intel releases early benchies and when AMD does it EVERYONE is critical, typical human population though, unintelligent

All I saw is everyone discussing the validity of how the benchmarks are presented, according to their own uses and needs. Nothing here is disappointing, as those htat tend to know hiow hardware works, realize that CPUs mean very little in day-to-day tasks, and most of us here game @ 1920x1080, not 1680x1050 or lower.