Monday, November 12th 2012

AMD Introduces the FirePro S10000 Server Graphics Card

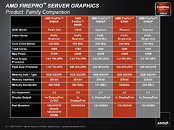

AMD today launched the AMD FirePro S10000, the industry's most powerful server graphics card, designed for high-performance computing (HPC) workloads and graphics intensive applications.

The AMD FirePro S10000 is the first professional-grade card to exceed one teraFLOPS (TFLOPS) of double-precision floating-point performance, helping to ensure optimal efficiency for HPC calculations. It is also the first ultra high-end card that brings an unprecedented 5.91 TFLOPS of peak single-precision and 1.48 TFLOPS of double-precision floating-point calculations. This performance ensures the fastest possible data processing speeds for professionals working with large amounts of information. In addition to HPC, the FirePro S10000 is also ideal for virtual desktop infrastructure (VDI) and workstation graphics deployments."The demands placed on servers by compute and graphics-intensive workloads continues to grow exponentially as professionals work with larger data sets to design and engineer new products and services," said David Cummings, senior director and general manager, Professional Graphics, AMD. "The AMD FirePro S10000, equipped with our Graphics Core Next Architecture, enables server graphics to play a dual role in providing both compute and graphics horsepower simultaneously. This is executed without compromising performance for users while helping reduce the total cost of ownership for IT managers."

Equipped with AMD next-generation Graphics Core Next Architecture, the FirePro S10000 brings high performance computing and visualization to a variety of disciplines such as finance, oil exploration, aeronautics, automotive design and engineering, geophysics, life sciences, medicine and defense. With dual GPUs at work, professionals can experience high throughput, low latency transfers allowing for quick compute of complex calculations requiring high accuracy.

Responding to IT Manager Needs

With two powerful GPUs in one dual-slot card, the FirePro S10000 enables high GPU density in the data center for VDI and helps increase overall processing performance. This makes it ideal for IT managers considering GPUs to sustain compute and facilitate graphics intensive workloads. Two on-board GPUs can help IT managers reap significant cost savings, replacing the need to purchase two single ultra-high-end graphics cards, and can help reduce total cost of ownership (TCO) due to lower power and cooling expenses.

Key Features of AMD FirePro S10000 Server Graphics

● Compute Performance: The AMD FirePro S10000 is the most powerful dual-GPU server graphics card ever created, delivering up to 1.3 times the single precision and up to 7.8 times peak double-precision floating-point performance of the competition's comparable dual-GPU product. It also boasts an unprecedented 1.48 TFLOPS of peak double-precision floating-point performance;

● Increased Performance-Per-Watt: The AMD FirePro S10000 delivers the highest peak double-precision performance-per-watt -- 3.94 gigaFLOPS -- up to 4.7 times more than the competition's comparable dual-GPU product;

● High Memory Bandwidth: Equipped with a 6GB GDDR5 frame buffer and a 384-bit interface, the AMD FirePro S10000 delivers up to 1.5 times the memory bandwidth of the comparable competing dual-GPU solution;

● DirectGMA Support: This feature removes CPU bandwidth and latency bottlenecks, optimizing communication between both GPUs. This also enables P2P data transfers between devices on the bus and the GPU, completely bypassing any need to traverse the host's main memory, utilize the CPU, or incur additional redundant transfers over PCI Express, resulting in high throughput low-latency transfers which allow for quick compute of complex calculations requiring high accuracy;

● OpenCL Support: OpenCL has become the compute programming language of choice among developers looking to take full advantage of the combined parallel processing capabilities of the FirePro S10000. This has accelerated computer-aided design (CAD), computer-aided engineering (CAE), and media and entertainment (M&E) software, changing the way professionals work thanks to performance and functionality improvements.

Please visit AMD at SC12, booth #2019, to see the AMD FirePro S10000 power the latest in graphics technology.

The AMD FirePro S10000 is the first professional-grade card to exceed one teraFLOPS (TFLOPS) of double-precision floating-point performance, helping to ensure optimal efficiency for HPC calculations. It is also the first ultra high-end card that brings an unprecedented 5.91 TFLOPS of peak single-precision and 1.48 TFLOPS of double-precision floating-point calculations. This performance ensures the fastest possible data processing speeds for professionals working with large amounts of information. In addition to HPC, the FirePro S10000 is also ideal for virtual desktop infrastructure (VDI) and workstation graphics deployments."The demands placed on servers by compute and graphics-intensive workloads continues to grow exponentially as professionals work with larger data sets to design and engineer new products and services," said David Cummings, senior director and general manager, Professional Graphics, AMD. "The AMD FirePro S10000, equipped with our Graphics Core Next Architecture, enables server graphics to play a dual role in providing both compute and graphics horsepower simultaneously. This is executed without compromising performance for users while helping reduce the total cost of ownership for IT managers."

Equipped with AMD next-generation Graphics Core Next Architecture, the FirePro S10000 brings high performance computing and visualization to a variety of disciplines such as finance, oil exploration, aeronautics, automotive design and engineering, geophysics, life sciences, medicine and defense. With dual GPUs at work, professionals can experience high throughput, low latency transfers allowing for quick compute of complex calculations requiring high accuracy.

Responding to IT Manager Needs

With two powerful GPUs in one dual-slot card, the FirePro S10000 enables high GPU density in the data center for VDI and helps increase overall processing performance. This makes it ideal for IT managers considering GPUs to sustain compute and facilitate graphics intensive workloads. Two on-board GPUs can help IT managers reap significant cost savings, replacing the need to purchase two single ultra-high-end graphics cards, and can help reduce total cost of ownership (TCO) due to lower power and cooling expenses.

Key Features of AMD FirePro S10000 Server Graphics

● Compute Performance: The AMD FirePro S10000 is the most powerful dual-GPU server graphics card ever created, delivering up to 1.3 times the single precision and up to 7.8 times peak double-precision floating-point performance of the competition's comparable dual-GPU product. It also boasts an unprecedented 1.48 TFLOPS of peak double-precision floating-point performance;

● Increased Performance-Per-Watt: The AMD FirePro S10000 delivers the highest peak double-precision performance-per-watt -- 3.94 gigaFLOPS -- up to 4.7 times more than the competition's comparable dual-GPU product;

● High Memory Bandwidth: Equipped with a 6GB GDDR5 frame buffer and a 384-bit interface, the AMD FirePro S10000 delivers up to 1.5 times the memory bandwidth of the comparable competing dual-GPU solution;

● DirectGMA Support: This feature removes CPU bandwidth and latency bottlenecks, optimizing communication between both GPUs. This also enables P2P data transfers between devices on the bus and the GPU, completely bypassing any need to traverse the host's main memory, utilize the CPU, or incur additional redundant transfers over PCI Express, resulting in high throughput low-latency transfers which allow for quick compute of complex calculations requiring high accuracy;

● OpenCL Support: OpenCL has become the compute programming language of choice among developers looking to take full advantage of the combined parallel processing capabilities of the FirePro S10000. This has accelerated computer-aided design (CAD), computer-aided engineering (CAE), and media and entertainment (M&E) software, changing the way professionals work thanks to performance and functionality improvements.

Please visit AMD at SC12, booth #2019, to see the AMD FirePro S10000 power the latest in graphics technology.

69 Comments on AMD Introduces the FirePro S10000 Server Graphics Card

Whats one of the difference between PCIe Gen 2.0 and 2.1/3.0 more power flexability. So yes if you get more recent parts you get more options. I'm sure you'll see them in G8 series of that HP server you linked. The lower numerical versions already have updated MB with Gen 3 slots added. So there is one possibility.

Only ones that can currently take advantage of it are Intel and AMD cards since they are PCIe gen 3.0 spec. All Nvidias K20x & K20 are PCIe gen 2.0 spec.I see pural and specifications. I'd like to see the information your refering to for myself thats all.Obviously something taken into consideration when these machines were built

So how about that specification link ? ;)

You have custom cooling, custom power delivery etc.. You can see that if you look at Cray's HPC's...

Refitting in general would be a considerable initial expenditure- Titan for instance, retained the bulk of the hardware from Jaguar, but the upgrade still took a year (Oct 2011-Nov 2012) and cost $96 million- the principle difference seems to be an upgrading of power delivery and swapping out Fermi 225W TDP boards for K20X (235W)- the CPU side of the compute node remains untouched.

And IIRC Jaguar didn't have any GPU's before.

*IIRC, The Intel Xeon + Xeon Phi Stampede also uses Tesla K20X for the same reason

Have you ever heard of people who overclock a math co-processor ? Kind of defeats the purpose of using ECC RAM and placing an emphasis on FP64 don't ya think?Taking your lead ?....:shadedshu

i find it funny you keep on arguing, but anyways it was in relation as how those parts cant reach the maximum voltage level because of precautions. I know certain models of Quadro and FirePro are for mission critical, just as Much as Itanium/SPARC etc are. I do realize that OC can cause ECC to corrupt the data. But anyways im just saying be respectful of the users here dude.

HPCWire is of the same opinion- that is to say that Nvidia's GK110 has superior efficiency to that of the S10000 and Xeon Phi when judged on their own performance. Moreover, they believe that Beacon (Xeon Phi) and SANAM (S10000) only sit at the top of the Green500 list because of their asymmetrical configuration (very low CPU to GPU ratio)- something I also noted earlier.

(Source: HPCWire podcast link]225W through a PCI-E slot ? whatever. :roll: (150W is max for a PCI-E slot. Join up and learn something)Incorrect. K20/K20X are at present limited to PCI-E2.0 because of the AMD Opteron CPU's they are paired with (which of course are PCIe 2.0 limited). Validation for Xeon E5(which is PCIe 3.0 capable) means GK110 is a PCIe 3.0 board...in much the same way that all the other Kepler parts are (K5000 and K10 for example). In much the same vein, you can't validate a HD 7970 or GTX 680 for PCI-E 3.0 operation on an AMD motherboard/CPU - all validation for AMD's HD 7000 series and Kepler was accomplished on Intel hardware.

Nvidia GPU Accelerator Board Specifications

Tesla K20X

Tesla K20How many times is it now?

It seams you'll do and make up anything to cheerlead on Nvidias side even when its on there own website proving you wrong. I hope they are paying you because if they arent its sad.

:shadedshu

Whos the troll now ? :D:laugh:

P.S.

-Still waiting on that 225w server specification link. ;)

Now if you don't think that server racks largely cater for a 225W TDP specced board I suggest you furnish some proof to the contrary (Hey, you could find all the vendors who spec their blades for 375W TDP boards for extra credit)...c'mon make a name for yourself, prove Ryan Smith at Anandtech wrong. :rolleyes: While your at it try to find where I made any reference about 225W being a server specification for add in boards. The only mention I made was regarding boards with a 225W specification being generally standardized for server racks.

Y'know nevermind. You made my ignore list

Do you only read what you want ?.You just can't own up to the fact that there is no specification and you implied as if there is one.

I was just asking to provide a link to such a specification since if there was one it be available to be referance from various crediable sources.

No link. no such thing.Really ? Still ? Even after you included this in the same post ?Let me remind you of previous post in this thread I have made just to enlighten you since it seams you only read what you want :DHmm... I referance PCIe Gen 2 power output + 6-pin power and mention there is a power difference from PCIe 2.0 to 2.1 & 3.0. Oh yeah i'm also linking to Nvidias own web-site with specifications of 2 cards with diagrams of AuX connectors and how they should be used

And your conclusion is that I thought PCIe slot was the sole source of power :laugh:

Like I said several times before. Follow your own advise cause your something else.Speculation is fine but if I have to choose between your speculation to what Nvidia has posted on there specification sheets.

I'll believe Nvidia :laugh:Classic troll move :toast: I cant provide proof to what I say so why dont you disprove it. :laugh:

There is more than just one company. Its a shame you spent all your time just trolling for Nvidia.

You shouldnt get mad when your wrong. When your wrong your wrong. Move on dont make up stuff or lash out at people who pointed something you didnt like. Provide credable links to back up your views.

Being hostle towards others with a different view then yours is no way to enhance the community in this forum. No reason to jump into non-Nvidia threads and start disparaging it or its posters because you didnt like its content or someone doesnt like the same company you do as much as you.

Think i'll go have me some hot coco. :toast:

not that old and completely wrong argument repeating again. :banghead: