Wednesday, April 2nd 2014

Radeon R9 295X2 Press Deck Leaked

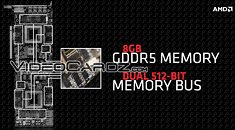

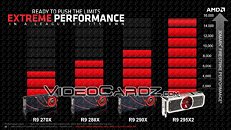

Here are some of the key slides from AMD's press-deck (presentation) for reviewers, for the Radeon R9 295X2 dual-GPU graphics card, ahead of its April 8 launch. The slides confirm specifications that surfaced earlier this week, which describe the card as bearing the codename "Vesuvius," having two 28 nm "Hawaii" GPUs, and all 2,816 stream processors on the chips being enabled, next to 176 TMUs, 64 ROPs, and 512-bit wide GDDR5 memory interfaces. Two such chips are wired to a PLX PEX8747 PCI-Express 3.0 x48 bridge chip. There's a total of 8 GB of memory on board, 4 GB per GPU. Lastly, clock speeds are revealed. The GPUs are clocked as high as 1018 MHz, and memory at 5.00 GHz (GDDR5-effective). The total memory bandwidth of the card is hence 640 GB/s.

The Radeon R9 295X2 indeed looks like the card which was pictured earlier this week, by members of the ChipHell tech community. It features an air+liquid hybrid cooling solution, much like the ROG ARES II by ASUS. The cooling solution is co-developed by AMD and Asetek. It features a couple of pump-blocks cooling the GPUs, which are plumbed with a common coolant channel running through a single 120 mm radiator+reservoir unit. A 120 mm fan is included. A centrally located fan on the card ventilates heatsinks that cool the VRM, memory, and the PCIe bridge chip.The card draws power from two 8-pin PCIe power connectors, and appears to use a 12-phase VRM to condition power. The VRM appears to consist of CPL-made chokes, and DirectFETs by International Rectifier. Display outputs include four mini-DisplayPort 1.2, and a dual-link DVI (digital only). The total board power of the card is rated at 500W, and so AMD is obviously over-drawing power from each of the two 8-pin power connectors. You may require PSUs with strong +12V rails driving them. Looking at these numbers, we'd recommend at least an 800W PSU for a single-card system, ideally with a single +12V rail design. The card is 30.7 cm long, and its coolant tubes shoot out from its top. AMD expects the R9 295X2 to be at least 60 percent faster than the R9 290X at 3DMark FireStrike (performance).

Source:

VideoCardz

The Radeon R9 295X2 indeed looks like the card which was pictured earlier this week, by members of the ChipHell tech community. It features an air+liquid hybrid cooling solution, much like the ROG ARES II by ASUS. The cooling solution is co-developed by AMD and Asetek. It features a couple of pump-blocks cooling the GPUs, which are plumbed with a common coolant channel running through a single 120 mm radiator+reservoir unit. A 120 mm fan is included. A centrally located fan on the card ventilates heatsinks that cool the VRM, memory, and the PCIe bridge chip.The card draws power from two 8-pin PCIe power connectors, and appears to use a 12-phase VRM to condition power. The VRM appears to consist of CPL-made chokes, and DirectFETs by International Rectifier. Display outputs include four mini-DisplayPort 1.2, and a dual-link DVI (digital only). The total board power of the card is rated at 500W, and so AMD is obviously over-drawing power from each of the two 8-pin power connectors. You may require PSUs with strong +12V rails driving them. Looking at these numbers, we'd recommend at least an 800W PSU for a single-card system, ideally with a single +12V rail design. The card is 30.7 cm long, and its coolant tubes shoot out from its top. AMD expects the R9 295X2 to be at least 60 percent faster than the R9 290X at 3DMark FireStrike (performance).

78 Comments on Radeon R9 295X2 Press Deck Leaked

Strange how you only express negative views in AMD threadsHypocrite much?

I'm flattered though really. Never had a Hobbit pay so much attention to me.

With that said TitanZ i think was just Nvidia putting a shot across AMD nose to get them to respond and they did. Probably won't be long til see Nvidia fire back with something soon.

Even if the board will clock to its max 1018MHz, I'd think that any overclocking will pull significantly more current than the PCI specification, so any prospective owner might want to check their PSU's power delivery per rail (real or virtual). I really couldn't see this card getting to stable 290X overclocks.

8+8 card can pull more than just 375W, as proved with 7990. Just make sure your PSU is single rail, or each 8 pin is on a separated healthy 30A rail.

By the way, 780ti is a 6+8 card, and it can pull a lot more than just 300W.

[Source]

Is it too much to ask to have a civilised thread without everyone sharpening their pitchforks and getting all potty mouthed?

I think I prefered it when the worse thing said in one of these threads is "that card looks ugly". I'd almost welcome Jorge at this point (this is a filthy lie).

software to limit tdp so amd/nvidia could upsell youpowertune and the like many volt-modded 4850's way out of spec. The same game was played in reverse when nvidia's 500 line essentially was clocked to the balls end of a pci-e spec for their plugs and overclocking brought them substantially over. The fact of the matter is while you could say pci-e plugs are an evolution of the guidelines of the old 'molex' connector, which is all well and good, they are over-engineered by a factor of around 2-3. This card just seems to bring it down to around 2.Very interesting! Thanks for this. So it's a factor of around 2 then, if not the card simply limited to that power draw (to stay in the 75+75w spec) and it's even higher.Well then, I guess you could take 936 + at least 141w then. When you factor in the vrm rated at (taking his word for it) 1125w, it gives an idea of what the card was built to withstand (which is insane). It seems engineered for at least 2x spec, which sounds well within reason for anything anybody is realistically going to be able to do with it. I doubt many people have a power supply that could even pull that with a (probably overclocked) system under load, even with an ideal cooling solution.

Waiting for the good ol' XS/coolaler gentleman to hook one up to a bare-bones cherry-picked system and prove me wrong (while busting some records). That seems pretty much what it was built to do.

On a weird sidenote, their clocks kind of reveal something interesting inherent to the design. First, 1018 is probably where 5ghz runs out of bandwidth to feed the thing. Second, 1018 seems like where 28nm would run at 1.05v, as it's in tune with binning of past products (like 1.218 for the 1200mhz 7870 or 1.175 for the 1150mhz 7970). It's surely a common voltage/power scaling threshold on all processes, but in this case half-way between the .9v spec and 1.2v where 28nm scaling seems to typically end. Surely they aren't running them at 1.05v and using 1.35v 5ghz ram, but it's interesting that they *could* and probably conserve a bunch of power, if not even dropping down to .9v and slower memory speed. If I were them I would bring back the UBER AWSUM RAD TOOBULAR switch that toggled between 1.05/1.35v for said clocks and 1.2v/1.5v-1.55v (because 1.35v 5ghz ram is 7ghz ram binned from hynix/samsung at that spec). That would actually be quite useful for both the typical person that spends $1000 on a videocard (like we all do from time to time) and those that want the true most out of it.

They were more practical in their design. If you would take a look at the PCB, you would understand why they went with the dual 8 pin power connectors.

Because there isn't place for a third, and the card is long as it it.

What i would like to see is how ASUS would design their ARES III. They would probably use a wider PCB and triple 8 pin power plugs. Even so, i don't think there would be place for 8 GB per GPU.

Thats why i would be personally interested in this card.

Yes, the Titan Z is viable for gaming, and is probably very good at it. This is not it's primary purpose. Please research.

Before anybody says anything, I would never buy a Titan, this isn't "lol you must work for NVidia", this is common sense, because I actually watched the release event where it's primary purpose was specifically outlined.

Cheap DPCompute servers. For the millionth time.

"The GTX Titan Z packs two Kepler-class GK110 GPUs with 12GB of memory and a whopping 5,760 CUDA cores, making it a "supercomputer you can fit under your desk," according to Nvidia CEO Jen-Hsun Huang"

Anybody who buys a Titan Z for gaming probably needs to rethink their life, and apply for the Darwin Award.

In other news, I heard this is an AMD thread regarding the 295X2?

But the bottleneck is the connector, not the conductor.

Oh my god.

www.chiphell.com/thread-1002731-2-1.html

AMD's Top Secret Mission

Nvidia should grow some and get a proper dual 780ti on the market, without all the fuss about DP compute and so, 6GB per GPU for less than 1399 USD and it would have a winner.

Likely, Nvidia could tune the board frequencies for performance once the 295X2 arrives...although it wouldn't surprise me for AMD to counter with an lower cost air cooled dual 290 (no-X) either.

Haven't owned a dual board since a GeForce 6600GT duallie (early adopter-itis) , and I don't see any of these offerings convincing me to return to a dual card.

If all these SKUs come to pass, it seems like both camps are treading water until 20/16FF comes onstream, and they more boards they launch, the less likely it seems that we'll see an early appearance of the new process.