Tuesday, February 28th 2017

NVIDIA Announces DX12 Gameworks Support

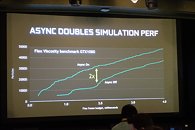

NVIDIA has announced DX12 support for their proprietary GameWorks SDK, including some new exclusive effects such as "Flex" and "Flow." Most interestingly, NVIDIA is claiming that simulation effects get a massive boost from Async Compute, nearly doubling performance on a GTX 1080 using that style of effects. Obviously, Async Compute is a DX12 exclusive technology. The performance gains in an area where NVIDIA normally is perceived to not do so well are indeed encouraging, even if only in their exclusive ecosystem. Whether GCN powered cards will see similar gains when running GameWorks titles remains to be seen.

31 Comments on NVIDIA Announces DX12 Gameworks Support

On the other hand you have AMD technology like TressFX that works well on both AMD and Nvidia.

GameWorks is equivalent to that one time Intel "asked" software devs to compile code using a specially provided compiler that gimped AMD CPUs. The only difference is Nvidia GameWorks is long running and Nvidia Fanboys don't seem to care the damage it is doing to the industry.

"Whether GCN powered cards will see similar gains when running GameWorks titles remains to be seen"

Let me answer that for you, no. I can answer with 100% confidence that Nvidia has never and will never let AMD get a boost from GameWorks.

The reason why some Gameworks features impacted other vendor hardware more than Nvidia's is because features like Hairworks, Furworks, Volumetric Lighting, etc.. make heavy use of tessellation, which is something Nvidia GPUs excel at.HBAO+ has a lower performance impact on AMD's Fury X than it does on a 980 Ti is one example.

WorksBroke) won't run at all on GCN.Especially NVIDIA and Intel. But NVIDIA, you know, has to sell you that bridge to no where.Maybe NVIDIA do an about-face because NVIDIA has to pony up to get any developers to use it the way they want them to but I certainly won't be holding my breath for that. It's at least plausible because Direct3D 12 is a standard API so it may use standard compute calls to operate on the GPU instead of specifically CUDA code. I mean, they are touting that Direct3D 12 did something right, aren't they? They never claimed 200% increase in compute + graphics before, did they? Maybe that's incentive enough to let Intel and AMD accelerate it.

I hope some games (like Witcher 3) and game engines (UE3/UE4) get retroactively patched for GCN support.

If amd had no power over what runs on their cards, nvidia could easily check the gpu vendor and run whatever inefficient ssao method they could come up with.

Luckily, your story is 100% wrong and ignores the entire purpose of a gpu driver.

The level of instant Nvidia hate is amusing.

As W1zzard has said, GW has been opened up to a degree but even before that they've released SDKs for their ecosystem.

AMD have played open source because of their position, not because they love everyone. I don't fully trust their partnership with Bethesda to fully optimise their games for AMD.

there has been shit all nada nothing with new or just even better visuals in dx12.

go get a console you will love the performance and looks

Either way, this just means were moving closer and closer to DX12 replacing DX11 as the main DirectX being used which is also a good thing.