Monday, August 20th 2018

GALAX Confirms Specs of RTX 2080 and RTX 2080 Ti

GALAX spilled the beans on the specifications of two of NVIDIA's upcoming high-end graphics cards, as it's becoming increasingly clear that the company could launch the GeForce RTX 2080 and the GeForce RTX 2080 Ti simultaneously, to convince GeForce "Pascal" users to upgrade. The company's strategy appears to be to establish 40-100% performance gains over the previous generation, along with a handful killer features (such as RTX, VirtuaLink, etc.,) to trigger the upgrade-itch.

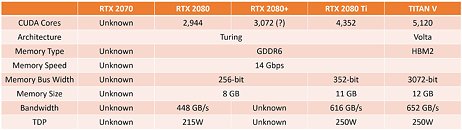

Leaked slides from GALAX confirm that the RTX 2080 will be based on the TU104-400 ASIC, while the RTX 2080 Ti is based on the TU102-300. The RTX 2080 will be endowed with 2,944 CUDA cores, and a 256-bit wide GDDR6 memory interface, holding 8 GB of memory; while the RTX 2080 Ti packs 4,352 CUDA cores, and a 352-bit GDDR6 memory bus, with 11 GB of memory. The memory clock on both is constant, at 14 Gbps. The RTX 2080 has its TDP rated at 215W, and draws power from a combination of 6-pin and 8-pin PCIe power connectors; while the RTX 2080 Ti pulls 250W TDP, drawing power through a pair of 8-pin PCIe power connectors. You also get to spy GALAX' triple-fan non-reference cooling solution in the slides below.

Leaked slides from GALAX confirm that the RTX 2080 will be based on the TU104-400 ASIC, while the RTX 2080 Ti is based on the TU102-300. The RTX 2080 will be endowed with 2,944 CUDA cores, and a 256-bit wide GDDR6 memory interface, holding 8 GB of memory; while the RTX 2080 Ti packs 4,352 CUDA cores, and a 352-bit GDDR6 memory bus, with 11 GB of memory. The memory clock on both is constant, at 14 Gbps. The RTX 2080 has its TDP rated at 215W, and draws power from a combination of 6-pin and 8-pin PCIe power connectors; while the RTX 2080 Ti pulls 250W TDP, drawing power through a pair of 8-pin PCIe power connectors. You also get to spy GALAX' triple-fan non-reference cooling solution in the slides below.

29 Comments on GALAX Confirms Specs of RTX 2080 and RTX 2080 Ti

But yes, I've had Nvidia ever since my 6600GT, yet the 960 was one card I skipped without thinking twice. My 660Ti carried me all the way to my current 1060.They won't be cheap, because they have no competition. But pre-release prices are always inflated.

Lol, looking at these specs - I highly doubt that. No intention to switch from 1080 so far. Especially with all the cards being showed as 3 slots so far. neither my Define C nor Node 202 cases are even fit for hot gpus. Upgrade after this gen? That's more likely.

I still run a build with a 6600 agp x8 and as for as the 900 card goes I grabbed one cause it was the last of the cards that offered me full support of all my needs not having to buy new monitors or junk well working software cause it was not win-10 only , ect....

this 900 card is plug and play with all I run and use. a 10 series or newer cant say or do that at all , and funny you still pay as much or even more for it to limit you and your needs / cant do as much [I guess they know fools and there money are soon parted ] ya, computer building has become a money grab joke . I'm pretty well done with it

In my case, I skipped the 760 because it was only like 10% faster than my 660Ti and then the 960 was 10% faster than the 760. That would have never allowed me to play with more AA, let alone at higher resolutions. Going from the 660Ti to the 1060, however, meant that a mostly maxed out Witcher 3 no longer looked like a slideshow ;)

but you know it like I buy a 500 buck card that does it all like say a 900 or older series xp, analog , ect ,,,, now they think I'm going to pay 500 bucks for a card that cant ? they got to be nutts thinking I'm spending cash on that deal , but then I guess you do get 20 way RBG lighting with the latest that well worth the price in its self , right ?

1070 replaced by 2080

1070ti replaced by 2080+

1080 replaced by 2080ti

Till recently it had to be Nvidia because AMD sucked on Linux. Now AMD is an option for Linux, but their current lineup sucks. So...Yes, you can totally get all that from a + :kookoo:

I had great luck with my mid priced / range cards . then if it goes bad you out only 200+/-$ not 500+$ . I look at it like a curve in the lines of cards like my 7850 amd card I liked it and did me a great job as far as a nice looking display and just fine FPS , but the support was getting crap like some games would not work or no longer supported over 12.6 like I said above and after I think like 14.xx got black screening and what not I never got in the older drivers doing the same things , its a shame it ended up that way with it . not to say NVidia is so great but at least everything I do works under it and one good solid driver I use [ and I don't use there latest ones either 355.82 on this 900 series today . AMD got to where I had to use this driver to do this and that driver to do that . that got old quick .