Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

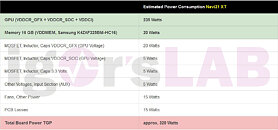

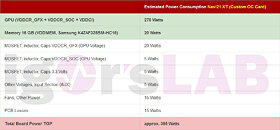

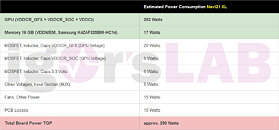

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

And, if the performance/watt numbers for this generation hold, we should get a decent upgrade in performance in the same 75W envelope.

Some people are grumpy with AMD for supposedly not competing with the 3080, while some thought 3070 - and now speculation that one of the higher end cards looks as though it's getting a bit more juice (for whatever competitive reason) - people seem grumpy with that too...

We could practically do that already but never did, if you think about it - without major investments and price hikes. Just drag out 14nm a while longer and make it bigger?

The reality is, we're seeing the cost of RT and 4K added to the pipeline. Efficiency gains don't translate to lower res due to engine or CPU constraints. We're moving into a new era, in that sense. It doesn't look good right now because we're used to a very efficient era in resolutions. Hardly anyone plays at 4K yet, but their GPUs slaughter 1080p and sometimes 1440p. Basically these are new GPUs waiting to solve new problems we don't really have.

Don't get me wrong, I think efficiency should be the paramount concern considering the impending ecological collapse and all, but I think because Nvidia opened the door for a complete disregard for efficiency this time around, I think AMD is following suit and going all out with clocks because they realize they don't have to care about efficiency.

That being said, I wouldn't be surprised if you downclock and undervolt RDNA2, it'll probably be extremely efficient, much more than Ampere could or can be.

People, unhappy with high TDB GPUs? Buy a mid-tier one. Really interested in efficiency? Buy the biggest die, undervolt, underclock.

But how about waiting to see some actual numbers (performance, consumption, prices) before getting the pitchforks out?

Who am I kidding, those pitchforks are always out...You're assuming that based on Igor assumptions about his leak and you see no flaw in your reasoning? :)

*PS: when 1 kernel is running, when multiple kernels are running this increases linearly, 4 workgroups increase idle time to ~18%.

Underclockers and undervolters will likely be running their cards at 2GHz and sacrificing ~15% of the potential performance to get Sub-200W total board powers.

I am looking forward to reviews but looking forward even more to see what the undervolting potential is. Nothing says "quiet computing" more than an overengineered cooling system for 350+ Watts that barely breaks a sweat at 200W.