Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

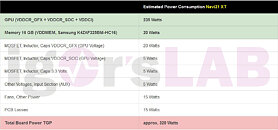

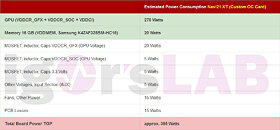

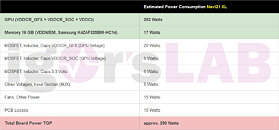

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

I thought GPU were made to stream data in and out not do one job at a time.

The command processor and scheduling keep the flow going..

sALU processes Scalar instructions (loops, branching, booleans), where sGPRs are primarily booleans, but also function-pointers, the call stack, and things of that nature.

vALUs process vector instructions, which include those "packed" instructions. If we wanted to get more specific, there are also LDS, load/store, and DPP instructions going to different units. But by and large, the two instructions that constitute the majority of AMD GPUs are classified as vector, or scalar.

You're right in that the fixed-function pipeline (not shown in the above diagram), in particular rasterization ("ROPs") constitute a significant portion of the modern GPU. But you can see that the command-processor is very far away from the vALUs / sALUs inside of the compute units.AMD's command processors are poorly documented. I can't find anything that describes their operation very well. (Well... I could read the ROCm source code, but I'm not THAT curious...)

But from my understanding: the command processor simply launches wavefronts. That is: it sets up the initial sGPRs for a workgroup (x, y, and z coordinate of the block), as well as VGPR0, VGPR1, and VGPR2 (for the x, y, and z coordinate of the thread). Additional parameters go into sGPRs (shared between all threads). Then, it issues a command to jump (or function call) the compute unit to a location in memory. AMD command processors have a significant amount of hardware scheduling logic for events and ordering of wavefronts: priorities and the like.

But the shader has already been converted into machine code by the OpenCL or Vulkan or DirectX driver, and loaded somewhere. The command processor only has to setup the parameters, and issue a jump command to get a compute unit to that code (once all synchronization functions, such as OpenCL Events, have proven that this particular wavefront is ready to run).

RDNA2 is on a better (from RDNA1) 7nm node (the 7NP DUV and not 7nm+ EUV) that (by rumors) offers a 10-15% higher density and combined with the improvements in RDNA2 architecture it is “said” to have +50% better perf/W.

If true, where exactly is going to place 6900 against Ampere, is yet to be seenI was expecting it... the 300~320W TBP. It couldn’t be anything else in order to offer similar 3080 perf. Less watts didn’t add up, and why AMD shouldn’t use all Watts up to Ampere. Again, my thoughts.

—————————————

Personally I don’t care about a GPU drawing 350 or 400W. I used to have a R9 390X OC model with 2.5 slot cooler and it was just fine. That was rated 375W TBP.

The 5700XT now is more than x2 the perf with 240W peaks and 220W avg power draw.

Every flagship GPU is set to work (when maxed) out of the efficiency curve. Unless there is no competition.

AMD Drivers, except from power, perf bars, also offer the function “chill”. You can set a min/max FPS target. In most games if I use this feature to cap FPS at min/max 40/60 the avg draw of the card is less than 100W.

60Hz is my monitor, and that the target within movement. If you stop moving in game the FPS drops to 40. I can set it 60/60 if I like.

My monitor is 13,5year old 1920x1200 16:10 and I was planing to switch to ultra wide 6 months ago but the human malware changed that, along other aspects of my(our) life(s).

There is no point for me to complain about the amount of power GPU are drawing. Buy a lower tier model. And perf/W is a continuously improved matter. We just can’t use flagship models as examples, sometimes.

Unofficial ROCm is beginning to happen in ROCm 3.7 (released in August 2020). But there's been over a year where compute-fans were unable to use ROCm at all on NAVI. To be fair: AMD never promised ROCm support on all of their cards. But it really knocks the wind from people's sails when they're unable to "play" with their cards. Even older cards like the RX 550 never really got ROCm support (only RX 580 got official support).

For now, my recommendation for AMD GPU-compute fans is to read the documentation carefully before buying. Wait for a Radeon Machine Intelligence card, like MI25 (aka: Vega64) to come out before buying that model. AMD ROCm is clearly aimed at their MI-platform and not really their consumer cards. MI8 (aka: RX 580) and MI6 (aka: Rx Fury) have good support, but not necessarily other cards.

---------

ROCm suddenly getting support for NAVI in 3.7 suggests that this new NAVI 2x series might have a MI-card in the works, and therefore might be compatible with ROCm.

There are ways to to keep consumption down. You can limit fps in some games, you can activate v-sync so you have 60 fps and keep's gpu load down or you can download example msi afterburner. There is this little slider Called power target. With that you can limit the maximum power the card is allowed to to use. What I know, rtx 3080 can be limited all the way down to only 100 watt. Also under volting can save you some watt. Again it seems rtx 3080 can be good for up to 100 watt saving just by limiting max voltage to gpu, with out offering to much performance loss. I used the power target slider for years to adjust a fitting power consumption.

I am not expecting to get RDNA2 based card. But I do hope amd can still provide a good amount of resistance to rtx 3080, cause we all know. Competition is good for consumer pricing.

If you are shader-launch constrained, it isn't a big deal to have a for(int i=0; i<16; i++){} statement wrapping your shader code. Just loop your shader 16 times before returning.Yeah, I remember seeing the slide but I couldn't remember where to find it. Thanks for the reminder. You'd think something like that would be in the ISA. Really, AMD needs to put out a new optimization guide that contains information like this (which they haven't written one since the 7950 series)

slideplayer.com/slide/17173687/

There is also, "AMD GPU Hardware Basics".

Basically, a hodge-podge of why we cannot keep gpus on duty. Pretty funny stuff, an engineered list of excuses why their hardware don't work.

Of course, there will be many people happy with the current situation as well. So, I can see and understand both sides of the argument.

Absolutely not. I bitched about Nvidia and their wattage vs performance and when this card comes out I will bitch about that one too.

This has nor ever will be a Nivida vs AMD. This is and always will be the Best bang for the buck

Again, the power draw numbers are not real so relax until we have actual real numbers. But don't be surprised they will be in the same range as Nvidia's because it will take MORE POWER to run realistic framerates at 4K because the pixel density is really steep!

Same applied for a 105watt AM4 cpu and the rest of the system.

Unless you have everything running fully loaded non stop you won't hit those maximum power numbers you are trying to use to make your argument. Current hardware is very good at quickly dropping in lower power states when needed. And pretty much everything out today is very good at idle power draw.