Wednesday, May 19th 2021

Intel to Detail "Alder Lake" and "Sapphire Rapids" Microarchitectures at Hot Chips 33, This August

Intel will detail its 12th Gen Core "Alder Lake" client and "Sapphire Rapids" server CPU microarchitectures at the Hot Chips 33 conclave, this August. In fact, Intel's presentation leads the CPU sessions on the opening day of August 23. "Alder Lake" will be the session opener, followed by AMD's presentation of the already-launched "Zen 3," and IBM's 5 GHz Z processor powering its next-gen mainframes. A talk on Xeon "Sapphire Rapids" follows this. Hot Chips is predominantly an engineering conclave, where highly technical sessions are presented by engineers from major semiconductor firms; and so the sessions on "Alder Lake" and "Sapphire Rapids" are expected to be very juicy.

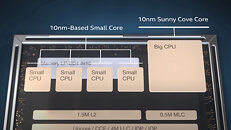

"Alder Lake" is Intel's attempt at changing the PC ecosystem by introducing hybrid CPU cores, a concept introduced to the x86 machine architecture with "Lakefield." The processor will also support next-generation I/O, such as DDR5 memory. The "Sapphire Rapids" server CPU microarchitecture will see an increase in CPU core counts, next-gen I/O such as PCI-Express 5.0, CXL 1.1, DDR5 memory, and more.

Source:

Hot Chips 33

"Alder Lake" is Intel's attempt at changing the PC ecosystem by introducing hybrid CPU cores, a concept introduced to the x86 machine architecture with "Lakefield." The processor will also support next-generation I/O, such as DDR5 memory. The "Sapphire Rapids" server CPU microarchitecture will see an increase in CPU core counts, next-gen I/O such as PCI-Express 5.0, CXL 1.1, DDR5 memory, and more.

14 Comments on Intel to Detail "Alder Lake" and "Sapphire Rapids" Microarchitectures at Hot Chips 33, This August

You should look up the latency of Alder Lake on DDR5. This is why they consider supporting DDR4 as well.

With the above split currently, each application will just use how much resources it needs and you'll really have no issues whatsoever unless you start getting bottlenecked by the actual CPU. But wouldn't big.LITTLE start stealing threads from other applications which might actually need those because it thinks it knows what's best for the user?

I don't see how an "intelligent" hardware scheduler is this gonna handle this decently anytime soon.

Then the big cores are used fro indeed your gaming or rendering etc.

Actually its probably so that the little cores by default dont do anything and that programmes etc are added via updates that then allow themselves to be ran on the little cores and with speculation that those little cores are actually pretty decent, that could end up being quite a few programmes.

The moment the CPU detects heavy loads coming in it will switch or assign the threads in this case to the bigger cores. Nothing new really.

Windows is infamous for messing up NUMA which is essentially "Big.Big", so I am not keeping my hopes up for M$.

On the few Lakefield systems I got my hands on, the default behavior when I ran Cinebench was to ignore the Ice Lake core and run it on the 4 Atom cores.

If I change the CPU affinity to force it to run on all cores, it sometimes does the oppsite, and just runs it on the single Ice Lake core.

One concern in latency sensitive situation; e.g. a game have one of its threads with low load but still synchronized, so other threads may have to wait for it causing stutter etc.

Another problem is the increased load on the OS scheduler in general, having to do shuffle threads around more, especially if they suddenly increase their load. This will probably cause stutter. I know the whole OS can come to a crawl if you overload the OS scheduler with many thousands of light threads, with a little load on each.This is completely untrue.

64-bit software has nothing to do with maximum memory size, and since people in 2021 still keep repeating this nonsense, the fact police have to step in once again.

When talking about 64-bit CPUs we are referring to the register width/data width of the CPU, contrary to the address width which determines directly addressable memory.

Let's take some historical examples;

Intel 8086: 16-bit CPU with 20-bit address width (1 MB)

Intel 80286: 16-bit CPU with 24-bit address width (16 MB)

MOS 6502 (used in Commodore 64, Apple 2, NES etc.): 8-bit CPU with 16-bit address width (64 kB)

It's just a coincidence that consumer systems at time when most people switched to 64-bit CPUs and software were just supporting 4 GB RAM, which has lead people to believe there is a relation between the two. At the time, 32-bit Xeons were supporting up to 64 GB in both Windows and Linux.

Regarding usage of 64-bit software, you will find very little 32-bit software running on a modern system.

While 64-bit software certainly makes addressing >4GB easier, the move to 64-bit was primarily motivated by computational intensive applications with got much greater performance. Recompiling anything but assembly code to 64-bit is trivial, and the only significant "cost" is a very marginal increase in memory usage (pointers are twice as large), but this disadvantage is small compared to the benefits of faster computation and easier access beyond 4GB.

And FYI, most applications and services running in both Windows and Linux today run 2 or more threads.The problems you describe about JS is real, but have nothing to do with hardware. JS is a bloated piece of crap both due to the horrible design of the ECMAScript standards and due to "everyone" writing extremely bloated things in JS.

As someone who has programmed for decades I look in horror how even a basic web page can consume a CPU using any of the common JS frameworks. :fear: