Friday, May 27th 2016

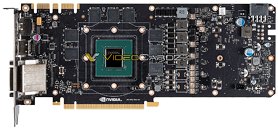

NVIDIA GeForce GTX 1070 Reference PCB Pictured

Here's the first picture of an NVIDIA reference-design PCB for the GeForce GTX 1070. The PCB (PG411) is similar to that of the GTX 1080 (PG413), except for two major differences, VRM and memory. The two PCBs are pictured below in that order. The GTX 1070 PCB features one lesser VRM phase compared to the GTX 1080. The other major difference is that it features larger GDDR5 memory chips, compared to the smaller GDDR5X memory chips found on the GTX 1080. These are 8 Gbps chips, and according to an older article, its clock speed is maxed out to specifications, at which the memory bandwidth works out to be 256 GB/s. The GeForce GTX 1070 will be available by 10th June.

Source:

VideoCardz

37 Comments on NVIDIA GeForce GTX 1070 Reference PCB Pictured

Just for knowledge, the Schedulers have moved to the SMs, I believe this started in Fermi. So each SM is responsible for issuing its own math instructions as well as its own memory load/store requests. When an SM is disabled, so it the schedulers in it. There is no reserving of memory bandwidth for SMs that aren't active, because the schedulers that would be trying to use that bandwidth are disabled as well.

Furthermore, how you explained it is not how a scheduler works even if they aren't included in the SMs. The scheduler receives memory load/store requests, and then it executes them in the order it feels best. It doesn't make request just sit there and wait because it hasn't heard from SM3 in a while and thinks it should reserve some time so SM3 can access the memory bus. If there are requests in the queue, it processes them as fast as it can.

It has a few reasons, not to blow the interconnects of the PCB with a too high current going through, and making sure it does'nt get a shitload of dead cards in return because people OC'ed them extremely high.

AMD has them as well, inside their GPU's & CPU's. The CPU's have a bios setting that disabled the Overcurrent protection allowing you to make the CPU even consume more for higher clocks, but going past the OVP means that you can fry your CPU because of a too high current.

Oc'ers just solder their own VRM onto their GPU:

I wonder if that VRM on that card can be 're-enabled' by just solder the missing components on top of it.

I'm not saying your observations are wrong, but it is just the reality of the current state of the market.

AMD are currently trying to flog a $1500 Radeon Pro Duo after all, Prosumer or not, the card makes zero sense right now.

Anyway, I can't wait to revisit this conversationion when the 1180 Golden Founder's Edition is $799.

Thank you.