Sunday, April 30th 2017

NVIDIA to Support 4K Netflix on GTX 10 Series Cards

Up to now, only users with Intel's latest Kaby Lake architecture processors could enjoy 4K Netflix due to some strict DRM requirements. Now, NVIDIA is taking it upon itself to allow users with one of its GTX 10 series graphics cards (absent the 1050, at least for now) to enjoy some 4K Netflix and chillin'. Though you really do seem to have to go through some hoops to get there, none of these should pose a problem.

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

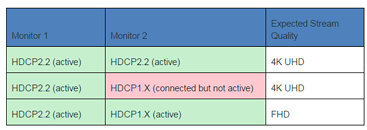

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

Sources:

NVIDIA Customer Help Portal, Eteknix

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

- NVIDIA Driver version exclusively provided via Microsoft Windows Insider Program (currently 381.74).

- No other GeForce driver will support this functionality at this time

- If you are not currently registered for WIP, follow this link for instructions to join: insider.windows.com/

- NVIDIA Pascal based GPU, GeForce GTX 1050 or greater with minimum 3GB memory

- HDCP 2.2 capable monitor(s). Please see the additional section below if you are using multiple monitors and/or multiple GPUs.

- Microsoft Edge browser or Netflix app from the Windows Store

- Approximately 25Mbps (or faster) internet connection.

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

59 Comments on NVIDIA to Support 4K Netflix on GTX 10 Series Cards

And it's not just OpenGL. Your pics will have banding in them even with 30 bit display enabled in Adobe unless you have a Quadro.

For 10bit support, you need the video card+OS+monitor to work hand in hand. This isn't new, it's been known for ages. Most likely test1test2 made an uniformed buy and is now venting over here.Again, how exactly is Nvidia screwing us? If you do professional work, you buy a professional card. If you play games/watch Netflix, a GeForce is enough. Where's the foul play? How is Nvidia different from AMD? And once again, how is this related to the topic at hand?

Adobe only added support for 30-bit in 2015.

The limitations are on the TV side with HDMI, and not the GPU (HDMI cant do 4:4:4/RGB with the 12 bit color only 4:2:2 12 bit, DP can but my TV doesnt have that)