Sunday, December 3rd 2017

Onward to the Singularity: Google AI Develops Better Artificial Intelligences

The singularity concept isn't a simple one. It has attached to it not only the idea of Artificial Intelligence that is capable of constant self-improvement, but also that the invention and deployment of this kind of AI will trigger ever accelerating technological growth - so much so that humanity will see itself changed forever. Now, really, there are some pieces of technology already that have effectively changed the fabric of society. We've seen this happen with the Internet, bridging gaps in time and space and ushering humanity towards frankly inspiring times of growth and development. Even smartphones, due to their adoption rates and capabilities, have seen the metamorphosis of human interaction and ways to connect with each other, even sparking some smartphone-related psychological conditions. But all of those will definitely, definitely, pale in comparison to what changes might ensue following the singularity.

The thing is, up to now, we've been shackled in our (still tremendous growth) by our own capabilities as a species: our world is built on layers upon layers of brilliant minds that have developed the framework of technologies our society is now interspersed with. But this means that as fast as development has been, it has still been somewhat slowed down by humanity's ability to evolve, and to develop. Each development has come with almost perfect understanding of what came before it: it's a cohesive whole, with each step being provable and verifiable through the scientific method, a veritable "standing atop the shoulders of giants". What happens, then, when we lose sight of the thought process behind developments: when the train of thought behind them is so exquisite that we can't really understand it? When we deploy technologies and programs that we don't really understand? Enter the singularity, an event to which we've stopped walking towards: it's more of a hurdle race now, and perhaps more worryingly, it's no longer fully controlled by humans.To our forum-dwellers: this article is marked as an Editorial

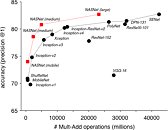

Google has been one of the companies at the forefront of AI development and research, much to the chagrin of AI-realists Elon Musk and Stephen Hawking, who have been extremely vocal on the dangers they believe that unchecked development in this field could bring to humanity. One of Google's star AI projects is AutoML, announced by the company in May 2017. It's purpose: to develop other, smaller-scale, "child" AIs which are dependent on AutoML's role of controller neural network. And that it did: in smaller deployments (like CIFAR-10 and Penn Treebank), Google engineers found that AutoML could develop AIs that performed on par with custom designs by AI development experts. The next step was to look at how AutoML's designs would deliver in greater datasets. For that purpose, Google told AutoML to develop an AI specifically geared for image recognition of objects - people, cars, traffic lights, kites, backpacks - in live video. This AutoML brainchild was named by google engineers NASNet, and brought about better results than other human-engineered image recognition software.According to the researchers, NASNet was 82.7% accurate at predicting images - 1.2% better than any previously published results based on human-developed systems. NASNet is also 4% more efficient than the best published results, with a 43.1% mean Average Precision (mAP). Additionally, a less computationally demanding version of NASNet outperformed the best similarly-sized models for mobile platforms by 3.1%. This means that an AI-designed system is actually better than any other human-developed one. Now, luckily, AutoML isn't self-aware. But this particular AI has been increasingly put to work in improving its own code.AIs are some of the most interesting developments in recent years, and have been the actors of countless stories of humanity being removed from the role of creators to that of mere resources. While doomsday scenarios may be too far removed from the realm of possibility as of yet, they tend to increase in probability the more effort is put towards the development of AIs. There are some research groups that are focused on the ethical boundaries of developed AIs, such as Google's own DeepMind, and the Future of Life Institute, which counts with Stephen Hawking, Elon Musk, and Nick Bostrom on its scientific advisory board, among other high-profile players in the AI space. The "Partnership on AI to benefit people and society" is another one of these groups worth mentioning, as is the Institute of Electrical and Electronics Engineers (IEEE) which has already proposed a set of ethical rules to be followed by AI.Having these monumental developments occurring so fast in the AI field is certainly inspiring as it comes to humanity's ingenuity; however, there must exist some security measures around this. For one, I myself ponder on how fast these AI-fueled developments can go, and should go, in the face of human scientists finding in increasingly difficult to keep up with these developments, and what they entail. What happens when human engineers see that AI-developed code is better than theirs, but they don't really understand it? Should it be deployed? What happens after it's been integrated with our systems? It would certainly be hard for human scientists to revert some changes, and fix some problems, in lines of code they didn't fully understand in the first place, wouldn't it?

And what to say regarding an acceleration of progress fueled by AIs - so fast and great that the changes it brings about in humanity are too fast for us to be able to adapt to them? What happens when the fabric of society is so plied with changes and developments that we can't really internalize these, and adapt to how society should work? There have to be ethical and deployment boundaries, and progress will have to be kept in check - progress on progress's behalf would simply be self-destructive if the slower part of society - humans themselves - don't know how, and aren't given time to, adapt. Even for most of us enthusiasts, how our CPUs and graphics cards work are just vague ideas and incomplete schematics in our minds already. What to say of systems and designs that were thought and designed by machines and bits of code - would we really understand them? I'd like to cite Arthur C. Clarke's third law here: "Any sufficiently advanced technology is indistinguishable from magic." Aren't AI-created AIs already blurring that line, and can we trust ourselves to understand everything that entails?This article isn't meant to be a doomsday-scenario planner, or even a negative piece on AI. These are some of the most interesting times - and developments - that most of us have seen, with steps taken here having the chance of being some of the most far-reaching ones in our history - and future - as a species. The way from Homo Sapiens to Homo Deus is ripe with dangers, though; debate and conscious thought of what these scenarios might entail can only better prepare us for what developments may occur. Follow the source links for various articles and takes on this issue - it really is a world out there.

Sources:

DeepMind, Futurism, The Guardian, The Ethics of Artificla Intelligence pdf - Nick Bostrom, Future of Life Institute, Google Blog: AutoML, IEEE @ Tech Republic

The thing is, up to now, we've been shackled in our (still tremendous growth) by our own capabilities as a species: our world is built on layers upon layers of brilliant minds that have developed the framework of technologies our society is now interspersed with. But this means that as fast as development has been, it has still been somewhat slowed down by humanity's ability to evolve, and to develop. Each development has come with almost perfect understanding of what came before it: it's a cohesive whole, with each step being provable and verifiable through the scientific method, a veritable "standing atop the shoulders of giants". What happens, then, when we lose sight of the thought process behind developments: when the train of thought behind them is so exquisite that we can't really understand it? When we deploy technologies and programs that we don't really understand? Enter the singularity, an event to which we've stopped walking towards: it's more of a hurdle race now, and perhaps more worryingly, it's no longer fully controlled by humans.To our forum-dwellers: this article is marked as an Editorial

Google has been one of the companies at the forefront of AI development and research, much to the chagrin of AI-realists Elon Musk and Stephen Hawking, who have been extremely vocal on the dangers they believe that unchecked development in this field could bring to humanity. One of Google's star AI projects is AutoML, announced by the company in May 2017. It's purpose: to develop other, smaller-scale, "child" AIs which are dependent on AutoML's role of controller neural network. And that it did: in smaller deployments (like CIFAR-10 and Penn Treebank), Google engineers found that AutoML could develop AIs that performed on par with custom designs by AI development experts. The next step was to look at how AutoML's designs would deliver in greater datasets. For that purpose, Google told AutoML to develop an AI specifically geared for image recognition of objects - people, cars, traffic lights, kites, backpacks - in live video. This AutoML brainchild was named by google engineers NASNet, and brought about better results than other human-engineered image recognition software.According to the researchers, NASNet was 82.7% accurate at predicting images - 1.2% better than any previously published results based on human-developed systems. NASNet is also 4% more efficient than the best published results, with a 43.1% mean Average Precision (mAP). Additionally, a less computationally demanding version of NASNet outperformed the best similarly-sized models for mobile platforms by 3.1%. This means that an AI-designed system is actually better than any other human-developed one. Now, luckily, AutoML isn't self-aware. But this particular AI has been increasingly put to work in improving its own code.AIs are some of the most interesting developments in recent years, and have been the actors of countless stories of humanity being removed from the role of creators to that of mere resources. While doomsday scenarios may be too far removed from the realm of possibility as of yet, they tend to increase in probability the more effort is put towards the development of AIs. There are some research groups that are focused on the ethical boundaries of developed AIs, such as Google's own DeepMind, and the Future of Life Institute, which counts with Stephen Hawking, Elon Musk, and Nick Bostrom on its scientific advisory board, among other high-profile players in the AI space. The "Partnership on AI to benefit people and society" is another one of these groups worth mentioning, as is the Institute of Electrical and Electronics Engineers (IEEE) which has already proposed a set of ethical rules to be followed by AI.Having these monumental developments occurring so fast in the AI field is certainly inspiring as it comes to humanity's ingenuity; however, there must exist some security measures around this. For one, I myself ponder on how fast these AI-fueled developments can go, and should go, in the face of human scientists finding in increasingly difficult to keep up with these developments, and what they entail. What happens when human engineers see that AI-developed code is better than theirs, but they don't really understand it? Should it be deployed? What happens after it's been integrated with our systems? It would certainly be hard for human scientists to revert some changes, and fix some problems, in lines of code they didn't fully understand in the first place, wouldn't it?

And what to say regarding an acceleration of progress fueled by AIs - so fast and great that the changes it brings about in humanity are too fast for us to be able to adapt to them? What happens when the fabric of society is so plied with changes and developments that we can't really internalize these, and adapt to how society should work? There have to be ethical and deployment boundaries, and progress will have to be kept in check - progress on progress's behalf would simply be self-destructive if the slower part of society - humans themselves - don't know how, and aren't given time to, adapt. Even for most of us enthusiasts, how our CPUs and graphics cards work are just vague ideas and incomplete schematics in our minds already. What to say of systems and designs that were thought and designed by machines and bits of code - would we really understand them? I'd like to cite Arthur C. Clarke's third law here: "Any sufficiently advanced technology is indistinguishable from magic." Aren't AI-created AIs already blurring that line, and can we trust ourselves to understand everything that entails?This article isn't meant to be a doomsday-scenario planner, or even a negative piece on AI. These are some of the most interesting times - and developments - that most of us have seen, with steps taken here having the chance of being some of the most far-reaching ones in our history - and future - as a species. The way from Homo Sapiens to Homo Deus is ripe with dangers, though; debate and conscious thought of what these scenarios might entail can only better prepare us for what developments may occur. Follow the source links for various articles and takes on this issue - it really is a world out there.

39 Comments on Onward to the Singularity: Google AI Develops Better Artificial Intelligences

It is true, it is a rat race now. I hope the USA wins this race. It is the biggest one of them all. I live in the USA, so I am a little biased. lol

I don't think AI is scary at all, AI is still creating AI within its own confines of its original intent (its not just randomly writing code for a video game out of nowhere) and there in lies the definition of something we need to define, what does it mean to be a self-aware entity?

As seen of today, the level of "AI" is still beyond what is mentioned and explained, it's all still "man" controlled to a certain degree.

All AIs have to do is sit back and wait.

The point is, like how air particles form a hurricane, the physical scaling of these weather phenomena transcends that of the size of air particles. It is my opinion that general intelligence is an emergent property of neuronal connections, so that when we can replicate the hardware to a similar complexity, intelligence should arise.

I don't think we've sorted out the mind body problem, but I do think emergence is a strong component of it.

"In philosophy, systems theory, science, and art, emergence is a phenomenon whereby larger entities arise through interactions among smaller or simpler entities such that the larger entities exhibit properties the smaller/simpler entities do not exhibit."

If intelligence is an emergent phenomena then the only thing left to do is to analyze the neurons and then study the resulting systems, therefore neuroscience is where the answer would be found.I don't know if you realize it but you're arguing against your own opinion.

If we apply what you quoted to your statement - neuronal interactions produces higher order structures like intelligence. Therefore, the study of neuron structures and communications are within the field of neuroscience, as it is currently. As such, the study of neuronal interactions and the higher structures are subjects of another field that is one order of level higher. Similar to chemistry and biology, or physics and chemistry. In my example, you can't study cyclone-genesis on the basis of particle physics, or chemistry on the basis of physics or biology at the level of chemistry, with exceptions of course.

If you start to muck around with the current definitions of the fields, i.e., neuroscience stretches to incorporate psychology and psychiatry, then of course neuroscience will incorporate all those things you describe. I subscribe to a more rigid definition of neuroscience where it is the field that studies neuronal structures, neurons and their communications.

As for arguing against my own opinion - emergence works the way you quoted, but the study of its effects are not the way you argued. The whole reason that we have dynamical complexity and the entire field of chaos and emergence is a good example. For a man made example, look at Wolfram's work on cellular automatons and how their chaotic behavior arises from simple rules that are in the short term predictive but the higher order patterns are not studied within the domain of cellular automatons.

"it just gets easier to study the whole instead of all the little parts" I want to point out that this is not just "easier" but "possible". It is actually impossible to study the individual parts when the problem is one of n-body interaction in any system. These kinds of problems scale exponentially in computation time with additions of more individual parts, which means that when confined within the originating field and level, complex multi-body interactions are not even possible to be studied. We can't simulate a society even if we understood every last bit of biology. A good example would be multi-body collisions or even a game of Go. This began even with Newton, who wondered about the stability of the planet orbits and was never able to answer this question from within Newtonian physics. This issue was eventually studied (and continue to be studied) from a higher level of dynamical systems and chaos. We can simulate fluid dynamics using particles, too, but that behavior and its associated fluid dynamical laws occur at a higher level, not at the particle level. We are simulating neurons now, which then produces cognitive behavior.

Back to the topic at hand, "no matter how many neurons you have or how complex their connections are the network can't move because it contains no movable parts" is just not true. Those neurons are interacting and those complex interactions yield thoughts, which form "life of their own". An analogy would be that optical fibers communicate information, but the world's worth of communication patterns are better studied outside of photonics. Another one is that although the field of semiconductor physics brought us modern transistors, the originating field is useless to study the IPC behavior of say, Intel CPUs. That task is better left to a higher complexity (in terms of behavior, not workload) field - engineering. Similar situation with traffic flow made of cars but congestion on a city-wide scale is a complexity higher than just dynamics of two cars. Those higher order structures, "vortices" to borrow a term from fluid dynamics have behavioral laws that are one order of complexity higher than the originating order. Turbulence, for example, is a continuum concept and has no equivalence in particle-level physics. Law and order, for another example, is a social construct that has no equivalence in the originating, biological-level. That's the idea of emergence of higher-order structures and their associated, new laws. The neurons don't have to move, but they build a network on which higher order processes, which we call thoughts, do move and take lives of their own. That's my best guess at how massive networks of simulated neurons may one day eventually give way to true machine intelligence, through emergence.

Those are good old algorithms from the 1970's reworked and applied on today's massively parallel compute architectures, and that's great, neural networks learn faster, detect faster and are correct more often.

The real starting point of AI is in the next step, detecting relations between detected objects - spatial, kinetic, physics in general (and emotional if humans/animals involved) and from that resolving a context, using memory of previous visual-relational contexts.

Here lies a trap, how much will we intermingle our classic procedural approach with neural networks for all these tasks.

Interesting stuff.

"there is still not a clue as to how these elements spark intelligence".

There is a missing Piece we are yet to discover. And that is the Spiritform of the human.

A bit more on this. Every animal has a Spiritform located in the central nervous system, (in the human is the Superior Colliculus), what differentiates HUMANS from animals, is that Humans have a spirit form through which the human is aware of itself. This is the very definition of humanity, which will be later established later on, once the Human Spirit form will be detected, inspected, researched, etc. A robot, or AI in this case can never be aware of themselves, like humans are, since they lack a Spiritform. The only exception to this will be ofcourse an artificial construct so complex that will allow the residence of a spiritform within its structures, like the spiritform resides in the structures of our body. But that is future music alltogether.

By this definition alone, any biological entity aware of itself, can be classifieds as a human, even if it has different shape size, construction and adapted to different planetary conditions (temperature, pressure gravity, etc). This way for example, all the species in star trek (cardasians, klingons, bajoran, etc) are humans.

By contrast to humans, animals, allthough they have a spiritform, they are not aware of themselves, like the humans are aware of themselves.

When an animal dies, or when a human dies, The Spiritform exits the body, and an animal Spiritform cannot enter the next time in a human body and viceversa.

I must underline, that this is a totally new domain of science, we dont even know exists, because we dont have yet the technological means to detect the fine spiritual energies, and the Spirit form in living beings.

This whole new Science Domain, is the missing piece that we lack understanding of, that tells us, what the human mind is, the human psyche, where the thoughts come from, what enacts inteligence, etc.

AI Scientist should start to familiarize themselves with these new concepts, allthough accurate info regarding this is extremly rare, and most is bullshit, there is only one and only one individual which provides accurate information regarding this new area of science. I estimate it will take at least 100 years before humanity familiarize itself with this new domain of scientific discovery.

en.wikipedia.org/wiki/Billy_Meier

www.ufofacts.me.uk/index.php/billy-meier/b1/who-is-billy-meier

More info on the Spirit here

www.ufofacts.me.uk/index.php/creaton/investigation/immortal-spirit

One might not probably grasp it by reading this post, but this new Domain of science, SpiritScience, has implications in Cosmology, Physics, Planetary Science, Age of the universe, temporal/quantum mechanics, and what not.

The problem is for now the "Spirit" term, is cast in a magical light, by the "Church and Co. aka Religion" which are nothing but a bunch of connmen, thiefs, liers, and murderers and obstructors of truth.

But Science and Truth will prevail some day. Mark my word for it.

Ai is so far off from the singularity imho that i will be dead before it happens, and so will most that work upon it.

To me Ai can and will be made to seam intellectual but just like Google lets you down via rubbish voice recognition now and again Ai will always hit a wall of wtf occasionally and not know the corret way to procede.

I am very much of the trecky mindset where we will be able to converse and reason with Ai's but they don't get to be Captain and will be ignored if they disagree with the bossman ,and then wouldn't throw an Ai strop teleporting the captain off the ship as i might do if you pinned my mind into a ship.