- Joined

- Sep 7, 2011

- Messages

- 247 (0.05/day)

- Location

- Pekanbaru - Riau - Indonesia - Earth - Universe

| System Name | My Best Friend... |

|---|---|

| Processor | Qualcomm Snapdragon 650 |

| Motherboard | Made By Xiaomi |

| Cooling | Air and My Hands :) |

| Memory | 3GB LPDDR3 |

| Video Card(s) | Adreno 510 |

| Storage | Sandisk 32GB SDHC Class 10 |

| Display(s) | 5.5" 1080p IPS BOE |

| Case | Made By Xiaomi |

| Audio Device(s) | Snapdragon ? |

| Power Supply | 2A Adapter |

| Mouse | On Screen |

| Keyboard | On Screen |

| Software | Android 6.0.1 |

| Benchmark Scores | 90339 |

As much as I'd love to be proven wrong, I don't think there will be a consumer-segment Volta card with HBM2, only expensive Tesla or Quadro. NVIDIA will pull through using GDDR5X or GDDR6 for GeForce.

Same thought..

Thats why I wonder.. Some rumors said volta will with HBM, some said with GDDR. If Vega used GDDR, maybe the price not this high, despite all miners craziness..

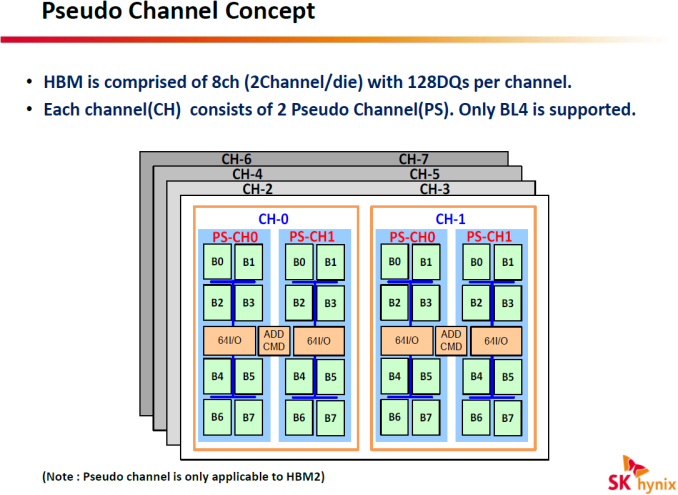

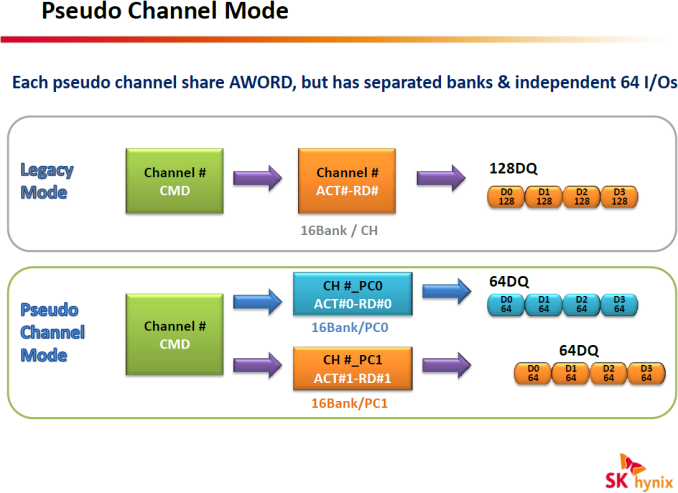

actually, what was AMD purpose with HBM ?