- Joined

- Apr 28, 2011

- Messages

- 320 (0.06/day)

| System Name | VENTURI |

|---|---|

| Processor | 2x AMD8684X Epyc (192/384 cores) |

| Motherboard | Gigabyte MZ73-LM0 Dual socket motherboard |

| Cooling | Air, noctua, heatsinks, silent/low noise |

| Memory | 1.5 TB 2 LRDIMM ECC REG |

| Video Card(s) | 2x 4090 FE RTX |

| Storage | Raid 0 Micron 9300 Max (15.4TB each / 77TB array - overprovisioned to 64TB) & 8TB OS nvme |

| Display(s) | Asus ProArt PAU32UCG-K |

| Case | Modified P3 Thermaltake |

| Audio Device(s) | harmon Kardon speakers / apple |

| Power Supply | 2050w 2050r |

| Mouse | Mad Catz pro X |

| Keyboard | KeyChron Q6 Pro |

| Software | MS 2022/ 2025 Data Center Server, Ubuntu |

| Benchmark Scores | Gravity mark (high score) |

The specs:

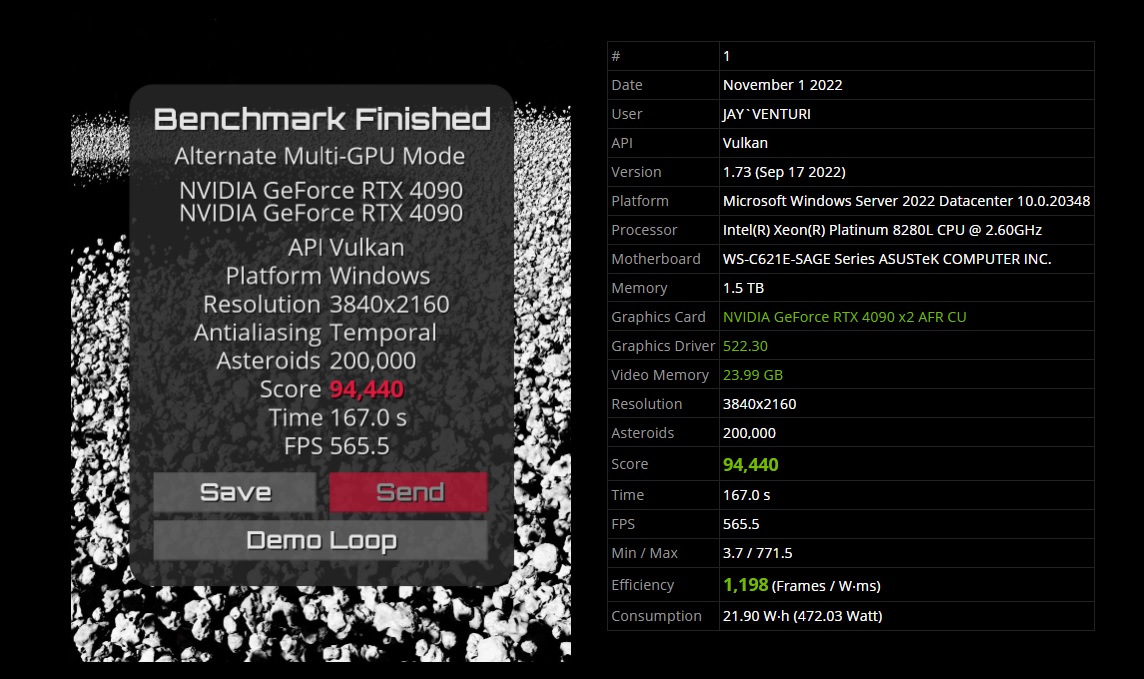

2x 4090 RTX Founders Edition

2x 8280L (56/112 cores), Asus c621 Sage Dual socket motherboard

1.5 TB ram. DDR4 ECC LRDIMMs

1600W digital power supply

(Data drive) 4x VROC Raid 0 Micron 9300 Max (12.8TB each / 51.2TB array) VROC Premium key

(OS Drive) Sabrent Rocket 4 Plus (8TB). 4x Samsung 860 Pro 4TB each (16TB array)

Asus PA32UCG-K monitor, TT SFF case, MS Data Center 2022 & Ubuntu

The build:

CPU run at about 41C under load, and RAM hovers at 55C under load.

NVME drives run 28C idle and 49C at the end of a full drive copy.

I'm using 1.5TB of ram, LRDIMMS

upgraded to 2x intel Xeon 8280L processors, the L have higher memory management

Dual 4090 RTX FE

The motherboard is revision 2.1x

Bios 9904 with Resizable Bar

C drive is a Sabrent TLC Rocket 4 plus 8TB

D drive is 4 Micron 9300 Max 12.8TB each (51.2 TB volume) using the U.2 connectors - Using VROC premium key

I have built in (hidden in the case) backup of 4x Samsung 860pro 4TB, as well as a network backup to a NAS in the house

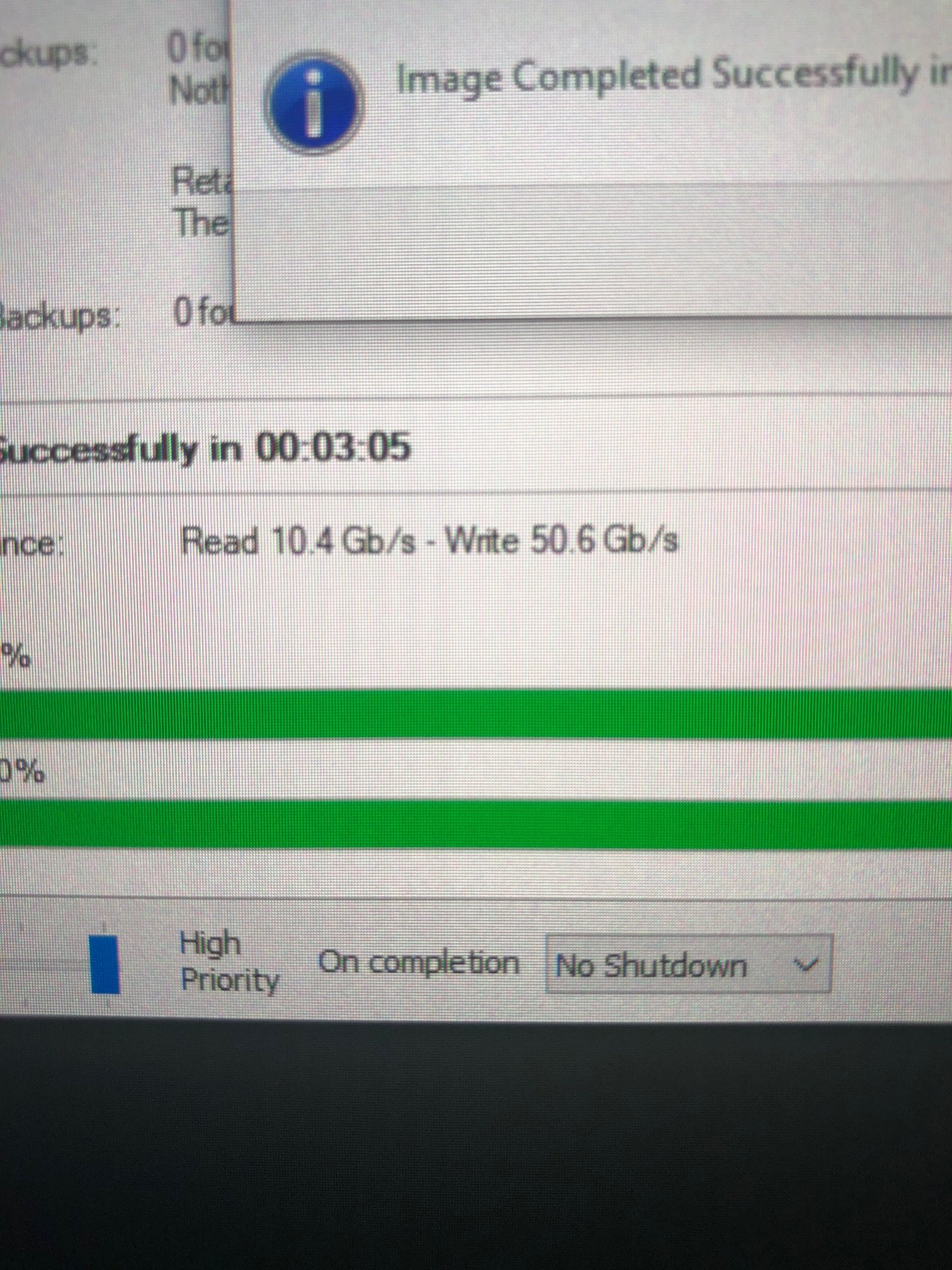

Using VROC premium key, direct raid control and paths - please see pics of the WRITE speed of the internal array

Running MS Data Center 2022 as the OS and Ubuntu

To make it all work and have that in place it took a complete redesign just to have it look similar to what I had before but a lot of base design was changed.

The dual 4090 FE RTXs sit several centimeters above the board compared to where cards normally rest. This required changing all the mounting points and supports for the cards. The video cards needed a custom bracket to hold them stable as they are several centimeters above the board.

This allowed for 4x u.2 connections for the micron 9300 nvme drives in raid to fit to the board 4x u.2 plugs

The C drive now has a large pure copper heatsink on it as well, under load it only reaches 40C, idle is 26C.

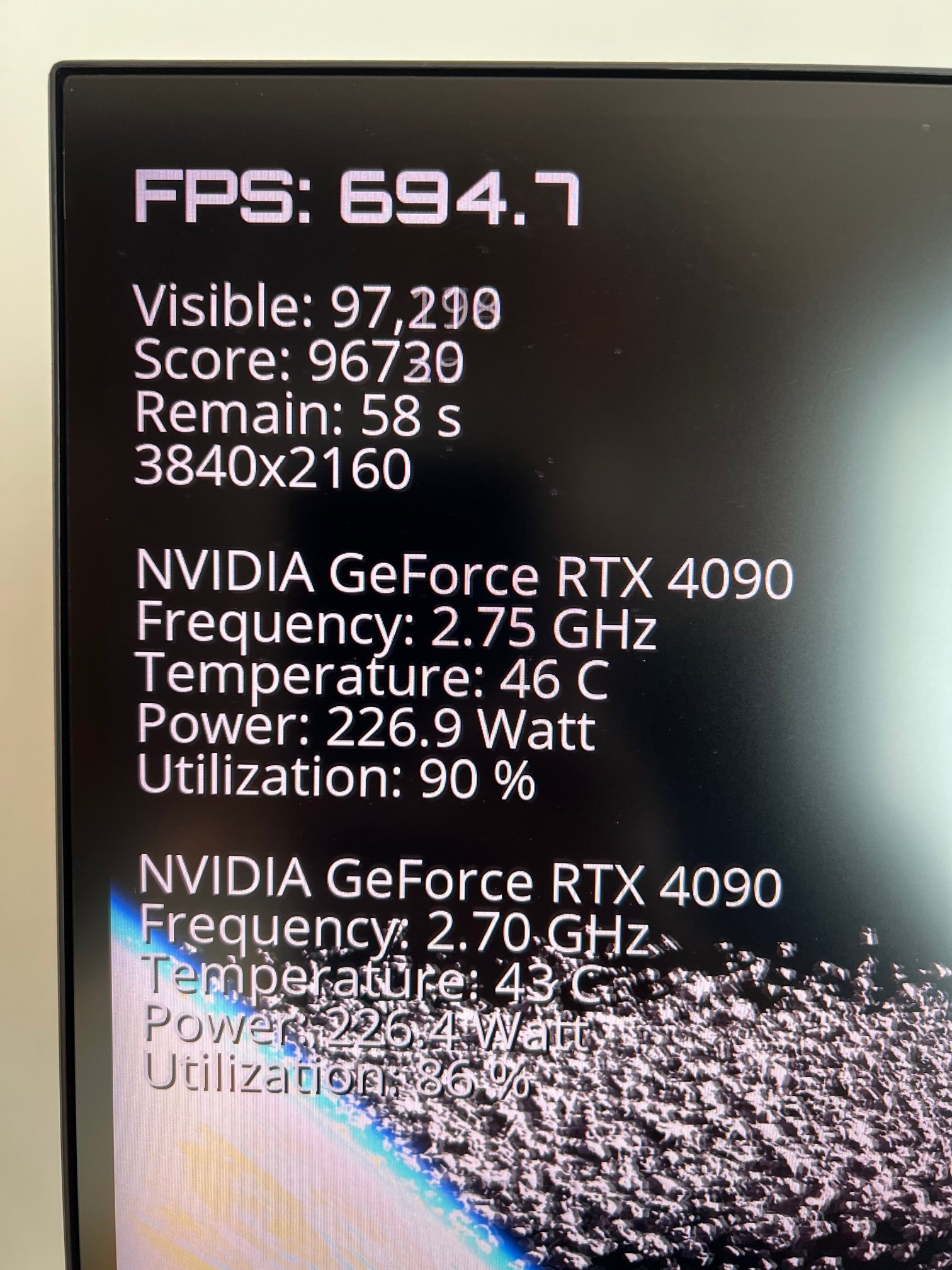

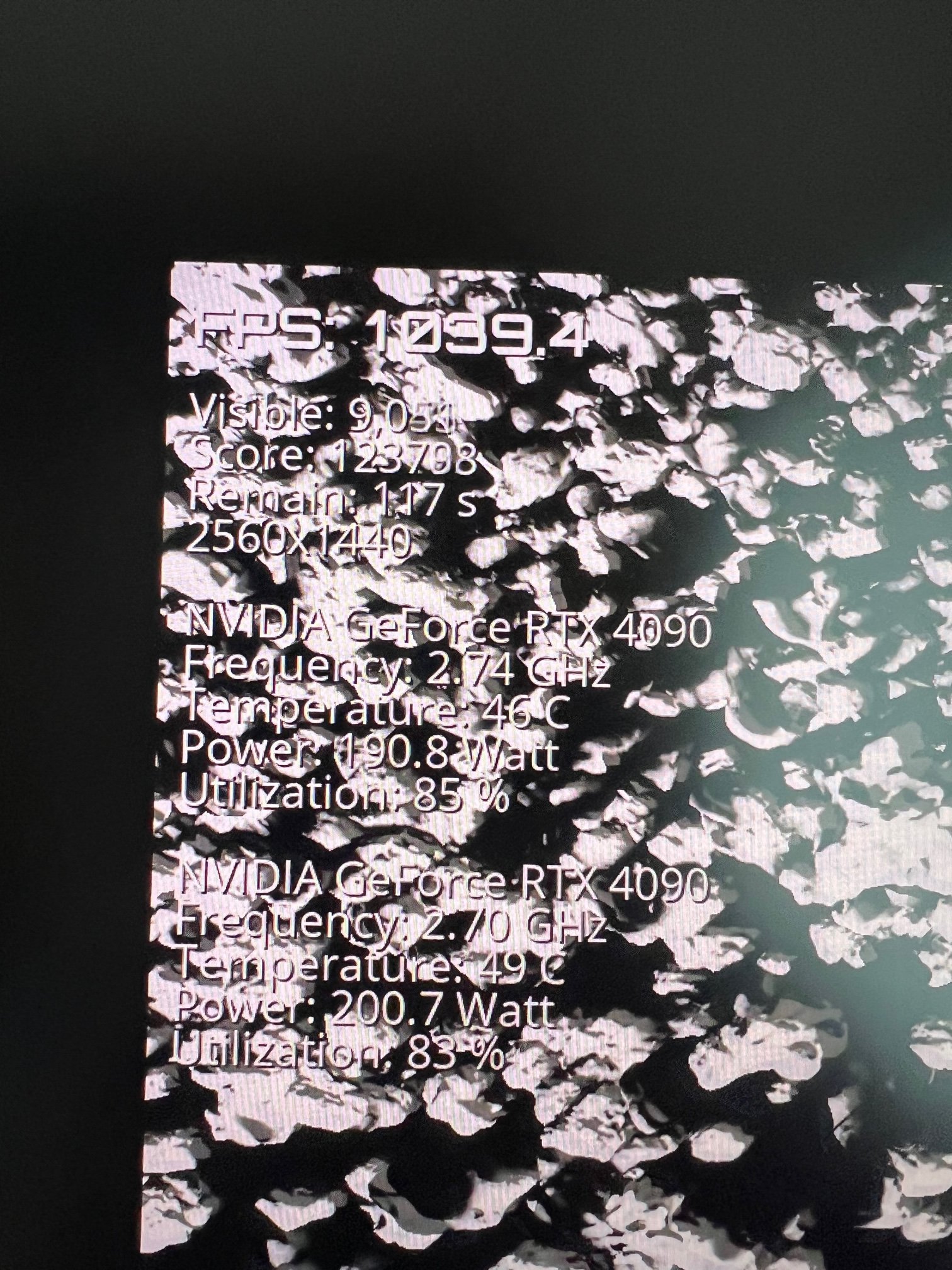

The 4090 FE RTXs also operate about 29C in idle. Under load they really don't go past 51C (so far)

ever, most game play hover around 41-47C, application DL/ML may run the cards at max around 51C.

The decrease in temperature is from the additional air channel between the video cards (3.3Cm) and the motherboard. Other temp decreases were inn the CPU, Ram and chipset.

Machine runs very quietly.

Most fans ( including the GPUs) turn off at stable temps (auto settings from motherboard control other fans. CPUs at idle run about 23C (73F) in a 21C house (69-70). Under load they may reach as high as 44C, but not often.

To accommodate the 12.8 TB each 9300 nvme drives, I had to install two cooling fans internally to the design so as to have a quiet airflow over the 4 drives. (see pics) This keeps the 51.2 TB nvme drives drives at around 39-41C under load and 33C at idle. The fans are quiet and invisible to the outside but I will also include a picture of that design.

There is a picture of a Macrium backup report showing the READ speed of the C drive (Sabrent rocket 4 plus) to WRITE the backup to the NVME raid (Micron 9300 4x) at 50.6 Gb/s

PC runs very silently, nice and cool, on average components under load are basically at body temp (feels organic when described as such).

I hope this answers core questions here is a benchmark post to show some of the capability -still first on leaderboard

https://gravitymark.tellusim.com/leaderboard/

and

https://gravitymark.tellusim.com/leaderboard/?size=4k

2x 4090 RTX Founders Edition

2x 8280L (56/112 cores), Asus c621 Sage Dual socket motherboard

1.5 TB ram. DDR4 ECC LRDIMMs

1600W digital power supply

(Data drive) 4x VROC Raid 0 Micron 9300 Max (12.8TB each / 51.2TB array) VROC Premium key

(OS Drive) Sabrent Rocket 4 Plus (8TB). 4x Samsung 860 Pro 4TB each (16TB array)

Asus PA32UCG-K monitor, TT SFF case, MS Data Center 2022 & Ubuntu

The build:

CPU run at about 41C under load, and RAM hovers at 55C under load.

NVME drives run 28C idle and 49C at the end of a full drive copy.

I'm using 1.5TB of ram, LRDIMMS

upgraded to 2x intel Xeon 8280L processors, the L have higher memory management

Dual 4090 RTX FE

The motherboard is revision 2.1x

Bios 9904 with Resizable Bar

C drive is a Sabrent TLC Rocket 4 plus 8TB

D drive is 4 Micron 9300 Max 12.8TB each (51.2 TB volume) using the U.2 connectors - Using VROC premium key

I have built in (hidden in the case) backup of 4x Samsung 860pro 4TB, as well as a network backup to a NAS in the house

Using VROC premium key, direct raid control and paths - please see pics of the WRITE speed of the internal array

Running MS Data Center 2022 as the OS and Ubuntu

To make it all work and have that in place it took a complete redesign just to have it look similar to what I had before but a lot of base design was changed.

The dual 4090 FE RTXs sit several centimeters above the board compared to where cards normally rest. This required changing all the mounting points and supports for the cards. The video cards needed a custom bracket to hold them stable as they are several centimeters above the board.

This allowed for 4x u.2 connections for the micron 9300 nvme drives in raid to fit to the board 4x u.2 plugs

The C drive now has a large pure copper heatsink on it as well, under load it only reaches 40C, idle is 26C.

The 4090 FE RTXs also operate about 29C in idle. Under load they really don't go past 51C (so far)

ever, most game play hover around 41-47C, application DL/ML may run the cards at max around 51C.

The decrease in temperature is from the additional air channel between the video cards (3.3Cm) and the motherboard. Other temp decreases were inn the CPU, Ram and chipset.

Machine runs very quietly.

Most fans ( including the GPUs) turn off at stable temps (auto settings from motherboard control other fans. CPUs at idle run about 23C (73F) in a 21C house (69-70). Under load they may reach as high as 44C, but not often.

To accommodate the 12.8 TB each 9300 nvme drives, I had to install two cooling fans internally to the design so as to have a quiet airflow over the 4 drives. (see pics) This keeps the 51.2 TB nvme drives drives at around 39-41C under load and 33C at idle. The fans are quiet and invisible to the outside but I will also include a picture of that design.

There is a picture of a Macrium backup report showing the READ speed of the C drive (Sabrent rocket 4 plus) to WRITE the backup to the NVME raid (Micron 9300 4x) at 50.6 Gb/s

PC runs very silently, nice and cool, on average components under load are basically at body temp (feels organic when described as such).

I hope this answers core questions here is a benchmark post to show some of the capability -still first on leaderboard

https://gravitymark.tellusim.com/leaderboard/

and

https://gravitymark.tellusim.com/leaderboard/?size=4k

Last edited:

well done bud.

well done bud.

Mine however, I think kangaroos and buffalos feel quite at home if there were in my room.

Mine however, I think kangaroos and buffalos feel quite at home if there were in my room.