what kind of noobs do write articles here in tpu, what epic failure article do write btarunr.. just make the ridicolous.. ROFLMAO ¬¬

AMD reaffirms HDR abilities, HDMI 2.0 is the limitation

Read more:

http://www.tweaktown.com/news/55012/amd-reaffirms-hdr-abilities-hdmi-2-limitation/index.html

Just as I was laying down to hopefully fall asleep after a massive 18-hour work day, I read a story over at TechPowerUp sourced from German tech site Heise.de, that AMD Radeon graphics cards were limited in their HDR abilities... well, click bait can be bad sometimes, and we now know the truth.

amd-reaffirms-hdr-abilities-hdmi-limitation_09 The original story can be read here, which claimed that Radeon graphics cards were reducing the color depth to 8 bits per cell (16.7 million colors) or 32-bit, if the display was connected to HDMI 2.0, and not DisplayPort 1.2 - something that spiked my interest.

10 bits per cell (1.07 billion colors) is a much more desired height to reach for HDR TVs, but the original article made it out to seem like this was a limitation of AMD, and not that of HDMI 2.0 and its inherent limitations. Heise.de said that AMD GPUs reduce output sampling from the "desired Full YCrBr 4: 4: 4 color scanning to 4: 2: 2 or 4: 2: 0 (color-sub-sampling / chroma sub-sampling), when the display is connected over HDMI 2.0. The publication also suspects that the limitation is prevalent on all AMD 'Polaris' GPUs, including the ones that drive game consoles such as the PS4 Pro," reports TPU.

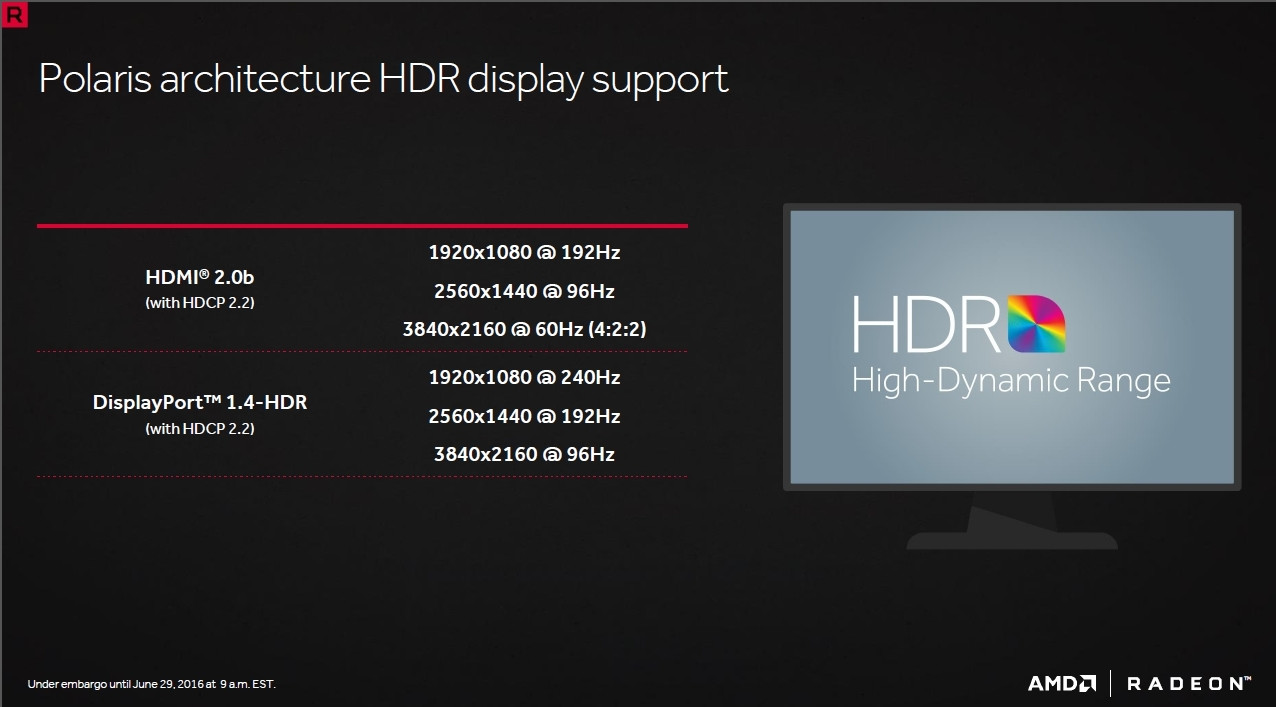

I reached out to AMD for clarification, with Antal Tungler - the Senior Manager of Global Technology Marketing, who said t hat this was a limitation of HDMI bandwidth. Tungler said "we have no issues whatsoever doing 4:4:4 10b/c HDR 4K60Hz over Displayport, as it's offering more bandwidth".

Tungler added: "4:4:4 10b/c @4K60Hz is not possible through HDMI, but possible and we do support over Displayport. Now, over HDMI we do 4:2:2 12b 4K60Hz which Dolby Vision TVs accept, and we do 4:4:4 8b 4K60Hz, which TVs also can accept as an input. So we support all modes TVs accept. In fact you can switch between these modes in our Settings".

amd-reaffirms-hdr-abilities-hdmi-limitation_10 Now, that's settled. This isn't a limitation of AMD Radeon graphics cards or the APU inside of the PS4 Pro, but instead its a limitation of bandwidth from the HDMI 2.0 standard. DP 1.2 has no issues throwing up HDR at the right 4:4:4 10b/c at 4K60, with AMD supporting it all - as long as you are careful when buying your TV or display, and want the best experience from it - HDMI 2.0 is a limitation right now.

Click bait articles aren't good, and it tarnishes the reputation of people in its path. TPU ran the story without fact checking it either, and while I'm not personally calling Heise.de or TPU out personally, it would be nice to not have sensationalist headlines for something that has been explained in detail (the limitations of HDMI 2.0 and the superiority of DisplayPort).

DisplayPort offers more bandwidth, and will be driving 4K120 in 2017, as well as 1080p and 1440p at 240Hz. AMD is on the bleeding edge of that, but don't fall for the non-hype of this story. Our original story on Radeon Technologies Group event in Sonoma, CA last year. The same article, discussing DP1.3 supporting 5K60, 4K120, 1080p/1440 at 240Hz.

Read more:

http://www.tweaktown.com/news/55012/amd-reaffirms-hdr-abilities-hdmi-2-limitation/index.html