- Joined

- Feb 22, 2009

- Messages

- 786 (0.13/day)

| Processor | Ryzen 7 5700X3D |

|---|---|

| Motherboard | Asrock B550 PG Velocita |

| Cooling | Thermalright Silver Arrow 130 |

| Memory | G.Skill 4000 MHz DDR4 32 GB |

| Video Card(s) | XFX Radeon RX 7800XT 16 GB |

| Storage | Plextor PX-512M9PEGN 512 GB |

| Display(s) | 1920x1200; 100 Hz |

| Case | Fractal Design North XL |

| Audio Device(s) | SSL2 |

| Software | Windows 10 Pro 22H2 |

| Benchmark Scores | i've got a shitload of them in 15 years of TPU membership |

Greetings all!

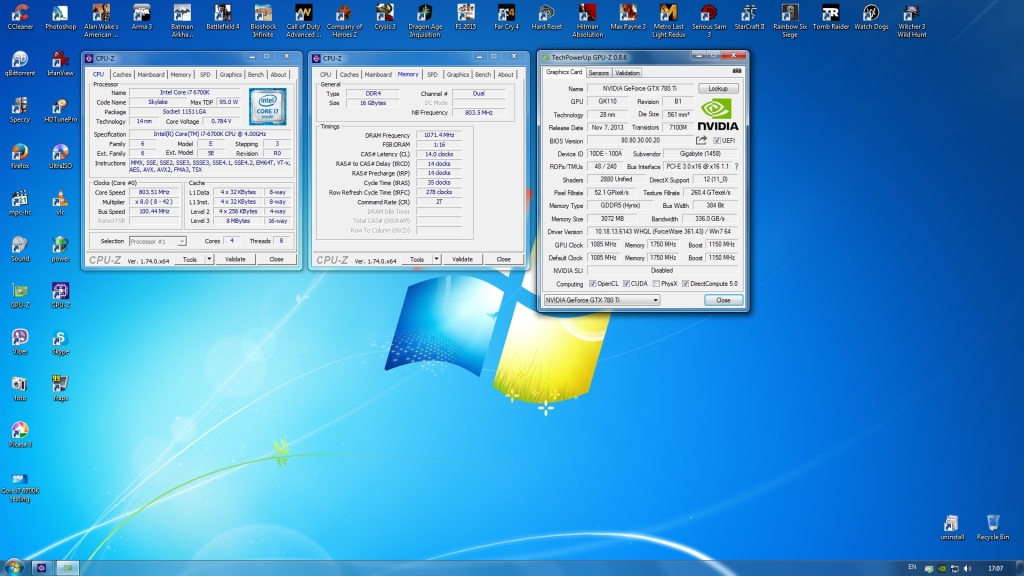

According to my previous benchmark Intel Ivy Bridge Core i7 processor offers no hyper-threading (HT) performance in gaming. On the contrary, HT turned on on the Core i7 3770 did hurt the performance slightly when compared to just the 4 physical cores of Core i7 3770. This time i've put the Intel Skylake Core i7 6700K to the shooting wall for "execution". This test can not be compared to any other tests i've made before, based on my profile, due to new NVIDIA drivers, updated game versions, different PC, some different game settings, some different testing methods.

I've tested 21 game on 1920x1080 resolution with all graphical settings set on maximum, turned on, except: no anti-aliasing was used. Some exclusive NVIDIA features in games like Far Cry 4 and Witcher 3 were turned off. Physics effects were turned on, except for NVIDIA's exclusively based Physx effects, which were turned off.

Some games have their own build-in benchmarks, while for others i used Fraps custom 15 seconds benchmarks.

TEST SETUP

Intel Core i7 6700K 4 - 4.2 GHz

Asus Maximus 8 Ranger

Kingston Hyperx Fury 2X8 GB DDR4 2133 MHz C14

Patriot Pyro 120 GB sata3 Windows drive

WD Red 2 TB sata3 game drive

Gigabyte GeForce GTX780 Ti GHz Edition 3 GB

Windows 7 Pro 64 bit

NVIDIA Forceware 361.43

For those who prefer video presentation:

Let's begin.

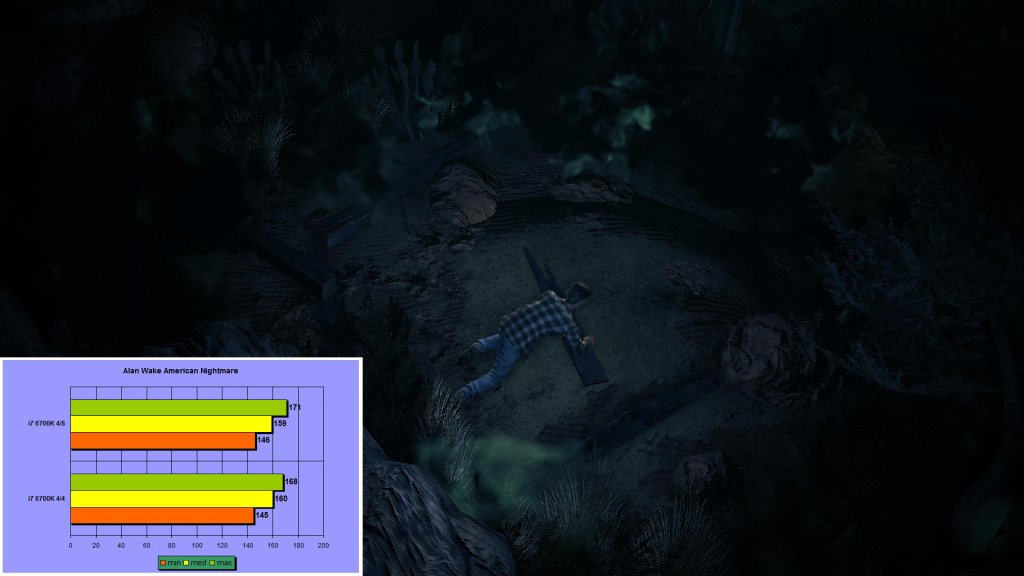

Alan Wake American Nightmare

There is no difference between i7 mode and i5 mode.

Arma 3

There is no difference between i7 mode and i5 mode in this most demanding FPS game i've ever tested.

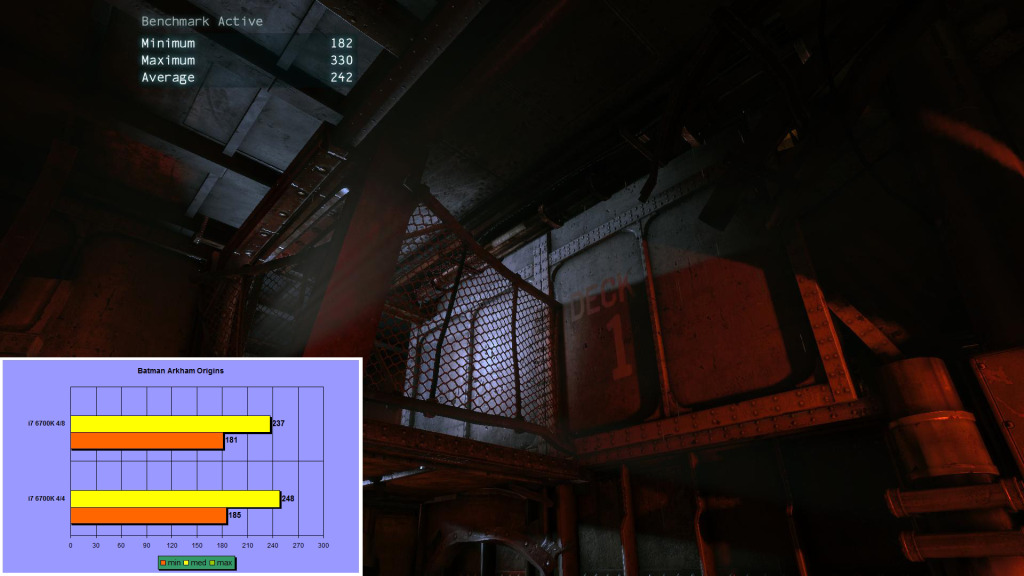

Batman Arkham Origins

I've deleted maximum FPS bar, since it was pulling over 300 and was irrelative.

Battlefield 4

There is almost no difference between i7 mode and i5 mode.

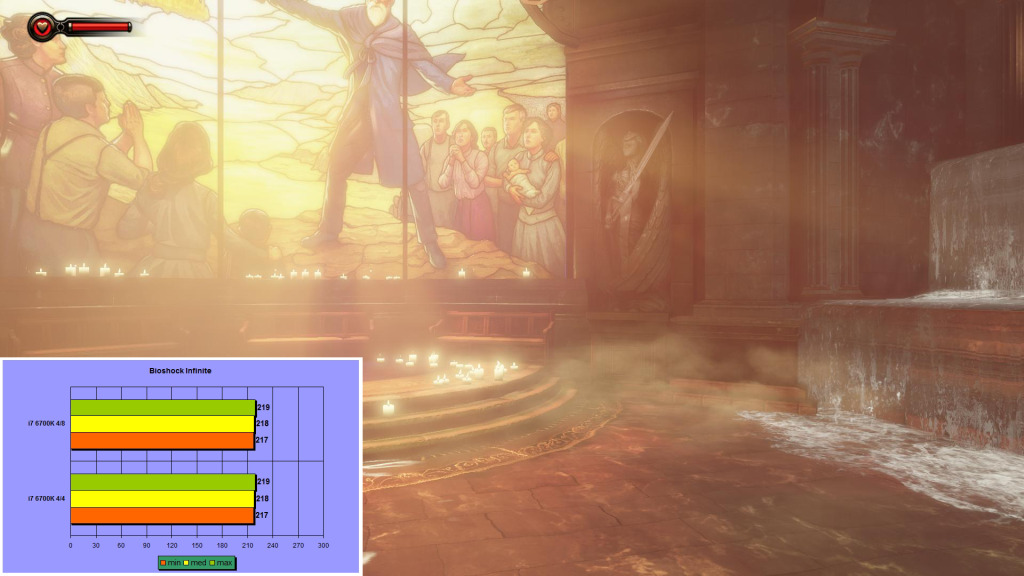

Bioshock Infinite

There is no difference between i7 mode and i5 mode.

Call of Duty Advanced Warfare

HT slightly decreases performance.

Company of Heroes 2

HT clearly hurts performance in this very demanding RTS

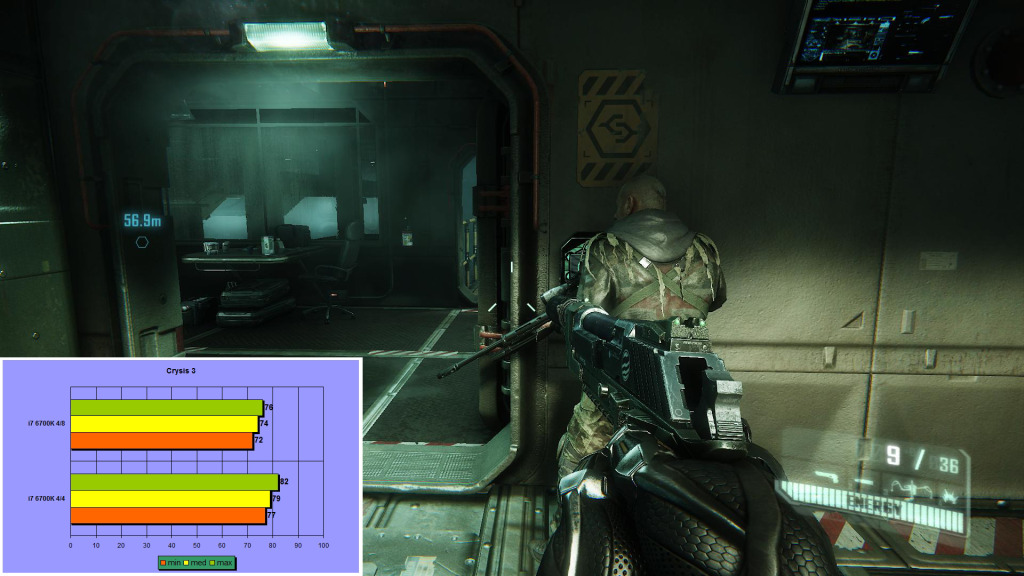

Crysis 3

There is quite a notable performance drop with HT on.

Dragon Age Inquisition

HT hurts minimal frame rate performance, while average and maximum remain the same.

F1 2015

HT slightly decreases performance.

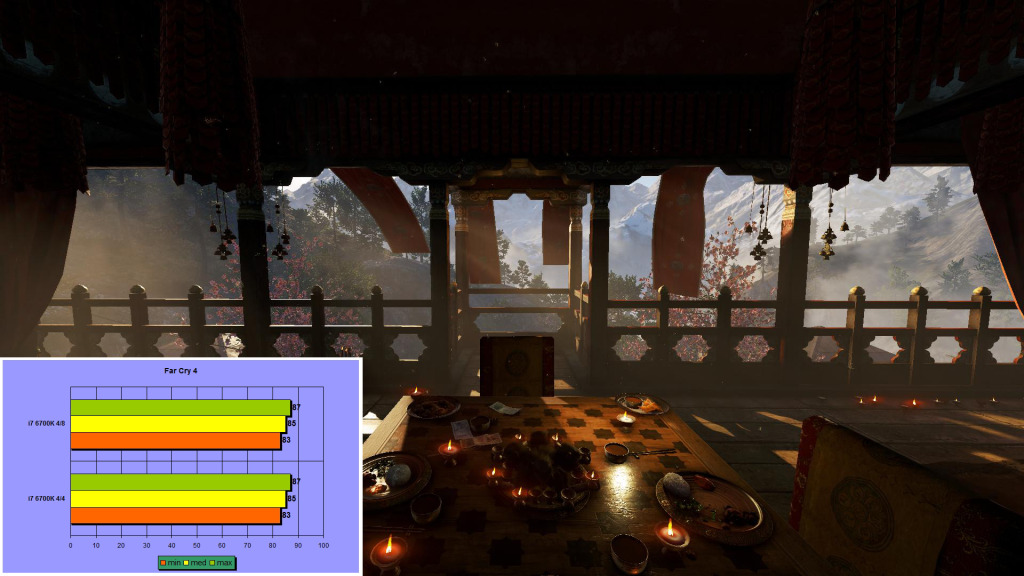

Far Cry 4

There is no difference between i7 mode and i5 mode.

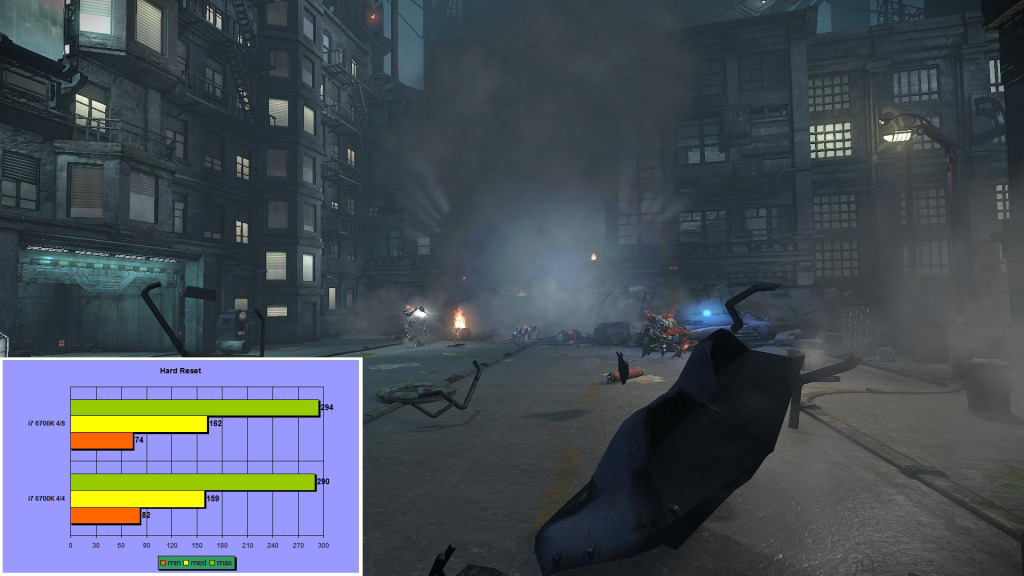

Hard Reset

Once again HT hurts minimal frame rate performance, while average and maximum remain competent.

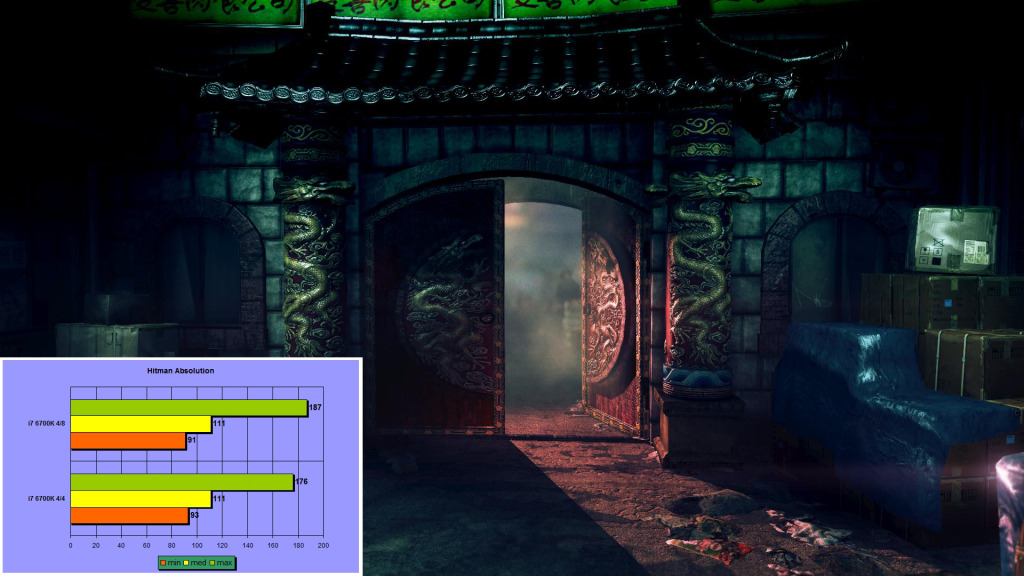

Hitman Absolution

HT only improves maximum frame rate performance in this game - the same pattern was observed with Core i7 3770.

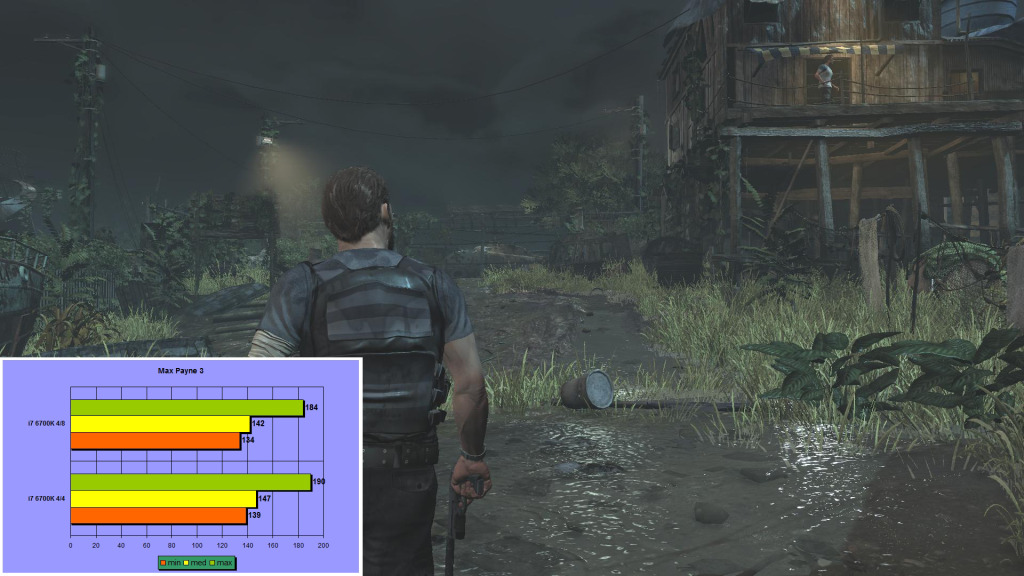

Max Payne 3

HT slightly decreases performance.

Metro Last Light Redux

Once again HT hurts minimal frame rate performance the most in this demanding game - the the same pattern was observed with Core i7 3770.

Rainbow Six Siege

There is a very small decrease in performance with HT on.

Serious Sam 3

HT decreases performance slightly.

Starcraft 2 Legacy of the Void

HT decreases performance slightly, yet constantly.

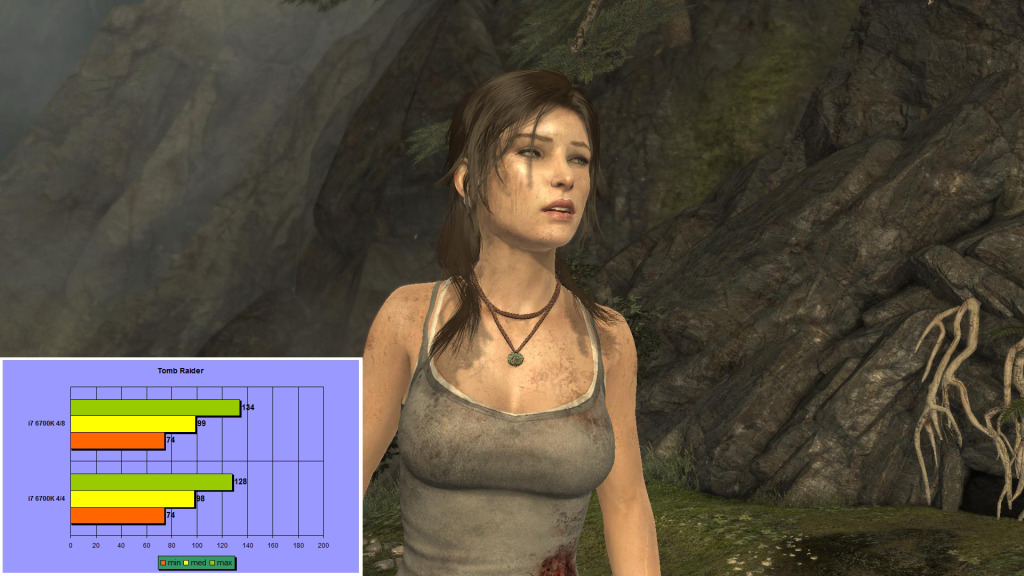

Tomb Raider

Like in Hitman Absolution, HT slightly increases maximum frame rates.

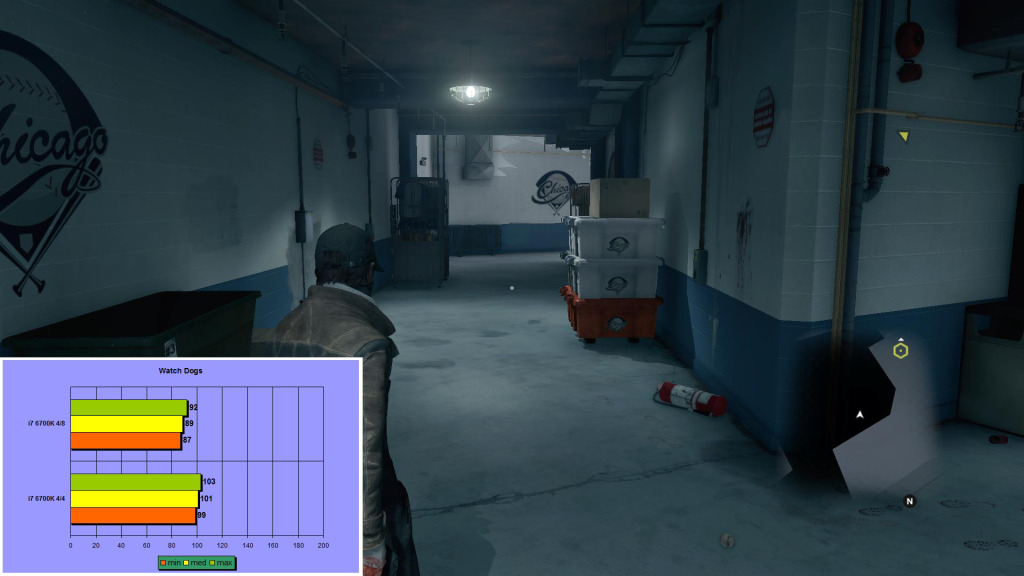

Watch Dogs

The performance drop with HT in this game is just too big to justify Core i7 over Core i5.

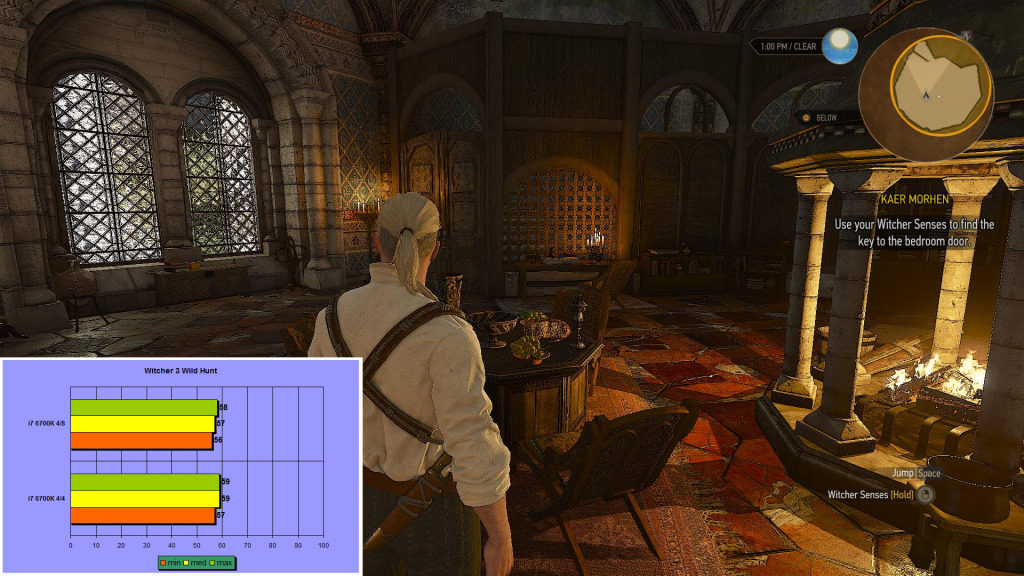

Witcher 3 Wild Hunt

Let's call it a draw.

-------------------------------------------------------------------

I've made these benchmarks 5 times in a row and they are as real as you can get.

CONCLUSIONS

Ever since i've got my first Nehalem Core i7 920, i've noticed no performance improvement in games with hyper-threading turned on. I have "cementified" these observations with testing my Core i7 3770 and now i do the same with a Core i7 6700K at my friends place. It's a pattern that continues for 6 years now... HT is not worthless in games however - it delivers awesome performance in Core i3 processors, but not in Core i7. Also HT might significantly improve online game performance, but that is not my domain.

1. Intel Core i7 HT offers no improvement in single player games.

2. Intel Core i7 HT slightly hurts gaming performance in most of the tested single player games.

I wish HT would improve gaming performance, but it actually hurts!!! It's a big disappointment and my friend made a mistake by replacing his Core i5 3570K with Core i7 6700K, because all he does is game, and nothing more.

According to my previous benchmark Intel Ivy Bridge Core i7 processor offers no hyper-threading (HT) performance in gaming. On the contrary, HT turned on on the Core i7 3770 did hurt the performance slightly when compared to just the 4 physical cores of Core i7 3770. This time i've put the Intel Skylake Core i7 6700K to the shooting wall for "execution". This test can not be compared to any other tests i've made before, based on my profile, due to new NVIDIA drivers, updated game versions, different PC, some different game settings, some different testing methods.

I've tested 21 game on 1920x1080 resolution with all graphical settings set on maximum, turned on, except: no anti-aliasing was used. Some exclusive NVIDIA features in games like Far Cry 4 and Witcher 3 were turned off. Physics effects were turned on, except for NVIDIA's exclusively based Physx effects, which were turned off.

Some games have their own build-in benchmarks, while for others i used Fraps custom 15 seconds benchmarks.

TEST SETUP

Intel Core i7 6700K 4 - 4.2 GHz

Asus Maximus 8 Ranger

Kingston Hyperx Fury 2X8 GB DDR4 2133 MHz C14

Patriot Pyro 120 GB sata3 Windows drive

WD Red 2 TB sata3 game drive

Gigabyte GeForce GTX780 Ti GHz Edition 3 GB

Windows 7 Pro 64 bit

NVIDIA Forceware 361.43

For those who prefer video presentation:

Let's begin.

Alan Wake American Nightmare

There is no difference between i7 mode and i5 mode.

Arma 3

There is no difference between i7 mode and i5 mode in this most demanding FPS game i've ever tested.

Batman Arkham Origins

I've deleted maximum FPS bar, since it was pulling over 300 and was irrelative.

Battlefield 4

There is almost no difference between i7 mode and i5 mode.

Bioshock Infinite

There is no difference between i7 mode and i5 mode.

Call of Duty Advanced Warfare

HT slightly decreases performance.

Company of Heroes 2

HT clearly hurts performance in this very demanding RTS

Crysis 3

There is quite a notable performance drop with HT on.

Dragon Age Inquisition

HT hurts minimal frame rate performance, while average and maximum remain the same.

F1 2015

HT slightly decreases performance.

Far Cry 4

There is no difference between i7 mode and i5 mode.

Hard Reset

Once again HT hurts minimal frame rate performance, while average and maximum remain competent.

Hitman Absolution

HT only improves maximum frame rate performance in this game - the same pattern was observed with Core i7 3770.

Max Payne 3

HT slightly decreases performance.

Metro Last Light Redux

Once again HT hurts minimal frame rate performance the most in this demanding game - the the same pattern was observed with Core i7 3770.

Rainbow Six Siege

There is a very small decrease in performance with HT on.

Serious Sam 3

HT decreases performance slightly.

Starcraft 2 Legacy of the Void

HT decreases performance slightly, yet constantly.

Tomb Raider

Like in Hitman Absolution, HT slightly increases maximum frame rates.

Watch Dogs

The performance drop with HT in this game is just too big to justify Core i7 over Core i5.

Witcher 3 Wild Hunt

Let's call it a draw.

-------------------------------------------------------------------

I've made these benchmarks 5 times in a row and they are as real as you can get.

CONCLUSIONS

Ever since i've got my first Nehalem Core i7 920, i've noticed no performance improvement in games with hyper-threading turned on. I have "cementified" these observations with testing my Core i7 3770 and now i do the same with a Core i7 6700K at my friends place. It's a pattern that continues for 6 years now... HT is not worthless in games however - it delivers awesome performance in Core i3 processors, but not in Core i7. Also HT might significantly improve online game performance, but that is not my domain.

1. Intel Core i7 HT offers no improvement in single player games.

2. Intel Core i7 HT slightly hurts gaming performance in most of the tested single player games.

I wish HT would improve gaming performance, but it actually hurts!!! It's a big disappointment and my friend made a mistake by replacing his Core i5 3570K with Core i7 6700K, because all he does is game, and nothing more.

Last edited: