GeForce NOW Gains NVIDIA DLSS 2.0 Support In Latest Update

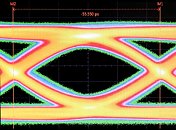

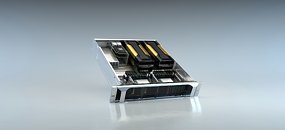

NVIDIA's game streaming service GeForce NOW has gained support for NVIDIA Deep Learning Super Sampling (DLSS) 2.0 in the latest update. DLSS 2.0 uses the tensor cores found in RTX series graphics cards to render games at a lower resolution and then use custom AI to construct sharp, higher resolution images. The introduction of DLSS 2.0 to GeForce NOW should allow for graphics quality to be improved on existing server hardware and deliver a smoother stutter-free gaming experience. NVIDIA announced that Control would be the first game on the platform to support DLSS 2.0, with additional games such as MechWarrior 5: Mercenaries and Deliver Us The Moon to support the feature in the future.