AMD Announces Ambitious Goal to Increase Energy Efficiency of Processors Running AI Training and High Performance Computing Applications 30x by 2025

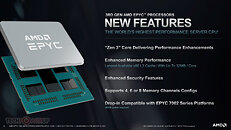

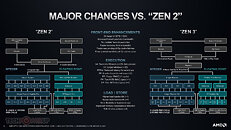

AMD today announced a goal to deliver a 30x increase in energy efficiency for AMD EPYC CPUs and AMD Instinct accelerators in Artificial Intelligence (AI) training and High Performance Computing (HPC) applications running on accelerated compute nodes by 2025.1 Accomplishing this ambitious goal will require AMD to increase the energy efficiency of a compute node at a rate that is more than 2.5x faster than the aggregate industry-wide improvement made during the last five years.

Accelerated compute nodes are the most powerful and advanced computing systems in the world used for scientific research and large-scale supercomputer simulations. They provide the computing capability used by scientists to achieve breakthroughs across many fields including material sciences, climate predictions, genomics, drug discovery and alternative energy. Accelerated nodes are also integral for training AI neural networks that are currently used for activities including speech recognition, language translation and expert recommendation systems, with similar promising uses over the coming decade. The 30x goal would save billions of kilowatt hours of electricity in 2025, reducing the power required for these systems to complete a single calculation by 97% over five years.

Accelerated compute nodes are the most powerful and advanced computing systems in the world used for scientific research and large-scale supercomputer simulations. They provide the computing capability used by scientists to achieve breakthroughs across many fields including material sciences, climate predictions, genomics, drug discovery and alternative energy. Accelerated nodes are also integral for training AI neural networks that are currently used for activities including speech recognition, language translation and expert recommendation systems, with similar promising uses over the coming decade. The 30x goal would save billions of kilowatt hours of electricity in 2025, reducing the power required for these systems to complete a single calculation by 97% over five years.