88

88

GPU Test System Update March 2021

Performance Results »Introduction

TechPowerUp is one of the most highly cited graphics card review sources on the web, and we strive to keep our testing methods, game selection, and, most importantly, test bench up to date. Today, I am pleased to announce our newest March 2021 VGA test system, which has one of many firsts for TechPowerUp. This is our first graphics card test bed powered by an AMD CPU. We are using the Ryzen 7 5800X 8-core processor based on the "Zen 3" architecture. The new test setup fully supports the PCI-Express 4.0 x16 bus interface to maximize performance of the latest generation of graphics cards by both NVIDIA and AMD. The platform also enables the Resizable BAR feature by PCI-SIG, allowing the processor to see the whole video memory as a single addressable block, which could potentially improve performance.

A new test system heralds completely re-testing every single graphics card used in our performance graphs. It allows us to kick out some of the older graphics cards and game tests to make room for newer cards and games. It also allows us to refresh our OS, testing tools, update games to the latest version, and explore new game settings, such as real-time raytracing, and newer APIs.

A VGA rebench is a monumental task for TechPowerUp. This time, I'm testing 26 graphics cards in 22 games at 3 resolutions, or 66 game tests per card, which works out to 1,716 benchmark runs in total. In addition, we have doubled our raytracing testing from two to four titles. We also made some changes to our power consumption testing, which is now more detailed and more in-depth than ever.

In this article, I'll share some thoughts on what was changed and why, while giving you a first look at the performance numbers obtained on the new test system.

Hardware

Below are the hardware specifications of the new March 2021 VGA test system.| Test System - VGA 2021.1 | |

|---|---|

| Processor: | AMD Ryzen 7 5800X @ 4.8 GHz (Zen 3, 32 MB Cache) |

| Motherboard: | MSI B550-A Pro BIOS 7C56vA5 / AGESA 1.2.0.0 |

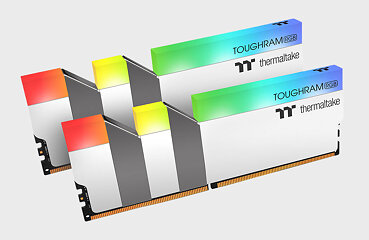

| Memory: | Thermaltake TOUGHRAM, 16 GB DDR4 @ 4000 MHz 19-23-23-42 1T Infinity Fabric @ 2000 MHz (1:1) |

| Cooling: | Corsair iCue H100i RGB Pro XT 240 mm AIO |

| Storage: | Crucial MX500 2 TB SSD |

| Power Supply: | Seasonic Prime Ultra Titanium 850 W |

| Case: | darkFlash DLX22 |

| Operating System: | Windows 10 Professional 64-bit Version 20H2 (October 2020 Update) |

| Drivers: | AMD: 21.2.3 Beta NVIDIA: 461.72 WHQL |

The AMD Ryzen 7 5800X has emerged as the fastest processor we can recommend to gamers for play at any resolution. We could have gone with the 12-core Ryzen 9 5900X or even maxed out this platform with the 16-core 5950X, but neither would be faster at gaming, and both would be significantly more expensive. AMD certainly wants to sell you the more expensive (overpriced?) CPU, but the Ryzen 7 5800X is actually the fastest option because of its single CCD architecture. Our goal with GPU test systems over the past decade has consistently been to use the fastest mainstream-desktop processor. Over the years, this meant a $300-something Core i7 K-series LGA115x chip making room for the $500 i9-9900K. The 5900X doesn't sell for anywhere close to this mark, and we'd rather not use an overpriced processor just because we can. You'll also notice that we skipped upgrading to the 10-core "Comet Lake" Core i9-10900K processor from the older i9-9900K because we saw no significant increases and negligible gaming performance gains, especially considering the large overclock on the i9-9900K. The additional two cores do squat for nearly all gaming situations, which is the second reason besides pricing that had us decide against the Ryzen 9 5900X.

We continue using our trusted Thermaltake TOUGHRAM 16 GB dual-channel memory kit that served us well for many years. 32 GB isn't anywhere close to needed for gaming, so I didn't want to hint at that, especially to less experienced readers checking out the test system. We're running at the most desirable memory configuration for Zen 3 to reduce latencies inside the processor: Infinity Fabric at 2000 MHz, memory clocked at DDR4-4000, in 1:1 sync with the Infinity Fabric clock. Timings are at a standard CL19 configuration that's easily found on affordable memory modules—spending extra for super-tight timings usually is overkill and not worth it for the added performance.

The MSI B550-A PRO was an easy choice for a motherboard. We wanted a cost-effective motherboard for the Ryzen 7 5800X and don't care at all about RGB or other bling. The board can handle the CPU and memory settings we wanted for this test bed, the VRM barely gets warm. It also doesn't come with any PCIe gymnastics—a simple PCI-Express 4.0 x16 slot wired to the CPU without any lane switches along the way. The slot is metal-reinforced and looks like it can take quite some abuse over time. Even though I admittedly swap cards hundreds of times each year, probably even 1000+ times, it has never been any issue—insertion force just gets a bit softer, which I actually find nice.

Software and Games

- Windows 10 was updated to 20H2

- The AMD graphics driver used for all testing is now 21.2.3 Beta

- All NVIDIA cards use 461.72 WHQL

- All existing games have been updated to their latest available version

- Anno 1800: old, not that popular, CPU limited

- Assassin's Creed Odyssey: old, DX11, replaced by Assassin's Creed Valhalla

- Hitman 2: old, replaced by Hitman 3

- Project Cars 3: not very popular, DX11

- Star Wars: Jedi Fallen Order: horrible EA Denuvo makes hardware changes a major pain, DX11 only, Unreal Engine 4, of which we have several other titles

- Strange Brigade: old, not popular at all

- Assassin's Creed Valhalla

- Cyberpunk 2077

- Hitman 3

- Star Wars Squadrons

- Watch Dogs: Legion

The full list of games now consists of Assassin's Creed Valhalla, Battlefield V, Borderlands 3, Civilization VI, Control, Cyberpunk 2077, Death Stranding, Detroit Become Human, Devil May Cry 5, Divinity Original Sin 2, DOOM Eternal, F1 2020, Far Cry 5, Gears 5, Hitman 3, Metro Exodus, Red Dead Redemption 2, Sekiro, Shadow of the Tomb Raider, Star Wars Squadrons, The Witcher 3, and Watch Dogs: Legion.

Raytracing

We previously tested raytracing using Metro Exodus and Control. For this round of retesting, I added Cyberpunk 2077 and Watch Dogs Legion. While Cyberpunk 2077 does not support raytracing on AMD, I still felt it's one of the most important titles to test raytracing with.While Godfall and DIRT 5 support raytracing, too, neither has had sufficient commercial success to warrant inclusion in the test suite.

Power Consumption Testing

The power consumption testing changes have been live for a couple of reviews already, but I still wanted to detail them a bit more in this article.After our first Big Navi reviews I realized that something was odd about the power consumption testing method I've been using for years without issue. It seemed the Radeon RX 6800 XT was just SO much more energy efficient than NVIDIA's RTX 3080. It definitely is more efficient because of the 7 nm process and AMD's monumental improvements in the architecture, but the lead just didn't look right. After further investigation, I realized that the RX 6800 XT was getting CPU bottlenecked in Metro: Last Light at even the higher resolutions, whereas the NVIDIA card ran without a bottleneck. This of course meant NVIDIA's card consumed more power in this test because it could run faster.

The problem here is that I used the power consumption numbers from Metro for the "Performance per Watt" results under the assumption that the test loaded the card to the max. The underlying reason for the discrepancy is AMD's higher DirectX 11 overhead, which only manifested itself enough to make a difference once AMD actually had cards able to compete in the high-end segment.

While our previous physical measurement setup was better than what most other reviewers use, I always wanted something with a higher sampling rate, better data recording, and a more flexible analysis pipeline. Previously, we recorded at 12 samples per second, but could only store minimum, maximum, and average. Starting and stopping the measurement process was a manual operation, too.

The new data acquisition system also uses professional lab equipment and collects data at 40 samples per second, which is four times faster than even NVIDIA's PCAT. Every single data point is recorded digitally and stashed away for analysis. Just like before, all our graphics card power measurement is "card only", not the "whole system" or "GPU chip only" (the number displayed in the AMD Radeon Settings control panel).

Having all data recorded means we can finally chart power consumption over time, which makes for a nice overview. Below is an example data set for the RTX 3080.

The "Performance per Watt" chart has been simplified to "Energy Efficiency" and is now based on the actual power and FPS achieved during our "Gaming" power consumption testing run (Cyberpunk 2077 at 1440p, see below).

The individual power tests have also been refined:

- "Idle" testing is now measuring at 1440p, whereas it used 1080p previously. This is to follow the increasing adoption rates of high-res monitors.

- "Multi-monitor" is now 2560x1440 over DP + 1920x1080 over HDMI—to test how well power management works with mixed resolutions over mixed outputs.

- "Video Playback" records power usage of a 4K30 FPS video that's encoded with H.264 AVC at 64 Mbps bitrate—similar enough to most streaming services. I considered using something like madVR to further improve video quality, but rejected it because I felt it to be too niche.

- "Gaming" power consumption is now using Cyberpunk 2077 at 1440p with Ultra settings—this definitely won't be CPU bottlenecked. Raytracing is off, and we made sure to heat up the card properly before taking data. This is very important for all GPU benchmarking—in the first seconds, you will get unrealistic boost rates, and the lower temperature has the silicon operating at higher efficiency, which screws with the power consumption numbers.

- "Maximum" uses Furmark at 1080p, which pushes all cards into its power limiter—another important data point.

- Somewhat as a bonus, and I really wasn't sure if it's as useful, I added another run of Cyberpunk at 1080p, capped to 60 FPS, to simulate a "V-Sync" usage scenario. Running at V-Sync not only removes tearing, but also reduces the power consumption of the graphics card, which is perfect for slower single-player titles where you don't need the highest FPS and would rather conserve some energy and have less heat dumped into your room. Just to clarify, we're technically running a 60 FPS soft cap so that weaker cards that can't hit 60 FPS (GTX 1650S and GTX 1660) won't run 60/30/20 FPS V-Sync, but go as high as able.

- Last but not least, a "Spikes" measurement was added, which reports the highest 20 ms spike recorded in this whole test sequence. This spike usually appears at the start of Furmark, before the card's power limiting circuitry can react to the new conditions. On RX 6900 XT, I measured well above 600 W, which can trigger the protections of certain power supplies, resulting in the machine suddenly turning off. This happened to me several times with a different PSU than the Seasonic, so it's not a theoretical test.

Radeon VII Fail

Since we're running with Resizable BAR enabled, we also have to boot with UEFI instead of CSM. When it was time to retest the Radeon VII, I got no POST, and it seemed the card was dead. Since there's plenty of drama around Radeon VII cards suddenly dying, I already started looking for a replacement, but wanted to give it another chance in another machine, which had it working perfectly fine. WTF?After some googling, I found our article detailing the lack of UEFI support on the Radeon VII. So that was the problem, the card simply didn't have the BIOS update AMD released after our article. Well, FML, the page with the BIOS update no longer exists on AMD's website.

Really? Someone on their web team made the decision to just delete the pages that contain an important fix to get the product working, a product that's not even two years old? (launched Feb 7 2019, page was removed no later than Nov 8 2020).

Luckily, I found the updated BIOS in our VGA BIOS collection, and the card is working perfectly now.

Performance results are on the next page. If you have more questions, please do let us know in the comments section of this article.

Apr 25th, 2024 13:37 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- What are you playing? (20527)

- Black screen after muting (0)

- How to check flatness of CPUs and coolers - INK and OPTICAL INTERFERENCE methods (111)

- Alphacool CORE 1 CPU block - bulging with danger of splitting? (11)

- Ghetto Mods (4321)

- Random blue screen from winload.efi error (0xc000000e) (2)

- Meta Horizon OS (20)

- WCG Daily Numbers (12497)

- Share your AIDA 64 cache and memory benchmark here (2917)

- Best SSD for system drive (76)

Popular Reviews

- Fractal Design Terra Review

- Thermalright Phantom Spirit 120 EVO Review

- Corsair 2000D Airflow Review

- Minisforum EliteMini UM780 XTX (AMD Ryzen 7 7840HS) Review

- ASUS GeForce RTX 4090 STRIX OC Review

- NVIDIA GeForce RTX 4090 Founders Edition Review - Impressive Performance

- ASUS GeForce RTX 4090 Matrix Platinum Review - The RTX 4090 Ti

- MSI GeForce RTX 4090 Suprim X Review

- MSI GeForce RTX 4090 Gaming X Trio Review

- Gigabyte GeForce RTX 4090 Gaming OC Review

Controversial News Posts

- Sony PlayStation 5 Pro Specifications Confirmed, Console Arrives Before Holidays (116)

- NVIDIA Points Intel Raptor Lake CPU Users to Get Help from Intel Amid System Instability Issues (106)

- AMD "Strix Halo" Zen 5 Mobile Processor Pictured: Chiplet-based, Uses 256-bit LPDDR5X (101)

- US Government Wants Nuclear Plants to Offload AI Data Center Expansion (98)

- Windows 11 Now Officially Adware as Microsoft Embeds Ads in the Start Menu (97)

- AMD's RDNA 4 GPUs Could Stick with 18 Gbps GDDR6 Memory (85)

- Developers of Outpost Infinity Siege Recommend Underclocking i9-13900K and i9-14900K for Stability on Machines with RTX 4090 (85)

- Windows 10 Security Updates to Cost $61 After 2025, $427 by 2028 (84)