290

290

How is Intel Beating AMD Zen 3 Ryzen in Gaming?

(290 Comments) »Introduction

If you've read our AMD Ryzen 5000 Series "Zen 3" reviews last week, you will have definitely wondered about our gaming performance scores—so did we. While application performance showed the expected impressive gains, gaming saw the new Ryzens neck and neck with Intel only. AMD marketing materials, on the other hand, show a double-digit FPS lead, which is corroborated by most reviewers, too. This lack of a clear win caused many of you to send feedback. Thanks, I always appreciate learning how people feel. Some emails weren't so nice, but still, thanks for voicing your opinion.

As promised, I spent the last few days digging into these performance numbers and found something worth reporting.

The Investigation

In order to research this more efficiently and rule out many random effects, I crafted a game in Unreal Engine 4. Similar to my graphics card apples-to-apples heatsink testing, this custom load lets me adjust each frame's render complexity dynamically without it affecting any other parameters. The game renders the same frame every time. To create a constant load that does not change over time, as we're looking for small differences here, there is no movement of any sort.Let me explain the charts below. On the vertical y-axis, I've plotted the achieved frames per second; obviously, higher is better. There is no FPS cap, and V-Sync is off, so the system will render as fast as it can. Unreal Engine is set to render with DirectX 12. On the horizontal x-axis, a loading factor in percent is listed. This is somewhat arbitrary, think of it as "to the left, each frame is easier to render, so higher FPS are achieved; to the right, each frame is more complex, which puts more load on the GPU." The idea here is to be able to play with the CPU-GPU bottleneck to see at which point it occurs, and what happens.

Above, you see results for the GeForce RTX 2080 Ti used in our processor reviews. Note how AMD is posting MUCH higher FPS than Intel on the left—around 20% better. This is the Zen 3 microarchitecture IPC advantage, and it is very impressive. In this CPU-limited scenario, there is no doubt AMD is the clear winner. Actually, even the left-most point is not "100%" CPU limited because the GPU is still doing a tiny bit of work, the correct way to interpret this would be "almost completely CPU limited."

As we move to the right on the chart, we see FPS go down, which is expected behavior since the GPU gets busier and busier, which slows things down. Interestingly, the drops are almost symmetrical on both Intel and AMD, at vastly different FPS rates. Only above 40% does the gap between both platforms start to shrink.

At 80%, both AMD and Intel deliver nearly the same FPS rates—we're starting to get more and more GPU limited. Just like "CPU limited" is not a "true/false" value, "GPU limited" is more of a range as well. As GPU load increases, the CPU is left less work because there are fewer frames to perform calculations for. For graphics-related game logic, there is no reason to update states more than once per frame because you can only display updates once per frame. This lowers the CPU usage of the render thread. Other parts of the game logic, like AI, physics, and networking, run at different tick rates, usually a constant rate independent of FPS, so their CPU load is constant throughout this whole test.

Now, as GPU load increases, we gradually see a small window between 80% and 120% where the Intel "Comet Lake" platform does indeed run at higher FPS than Zen 3. I marked it with a red arrow for you. This is quite surprising, but the data is too clear to make it a random event. One theory I have is that AMD's aggressive idle-state logic shuts down some cores because they are no longer at full load. With Zen 3, they are able to do that very rapidly and without OS interaction (for power management), and spin them up just as quickly, although not instantly. I'll dig into this some more.

Once we move past 140% in the chart above, the FPS rates are pretty much identical. Is anyone surprised? The GPU is limiting the FPS here, which is typically referred to as the "GPU bottlenecking". Having now thought about this for a while, isn't it kinda obvious? Did anyone expect Zen 3 to magically increase GPU rendering performance?

Other reviews confirm this last data point, you just have to look for it in their data. Many reviewers have focused on the CPU-limited scenario, using light MOBA-style games that don't fully utilize the graphics card. This is a perfectly valid test to highlight CPU differences, of course. However, a lot of gamers are playing highly demanding AAA games, which means their GPU load is much higher and the limiting factor when it comes to FPS. Otherwise, if the graphics card is idle, even partially, money is wasted on a card that's too fast for what the rest of the system can do. This is why I believe our 1440p and 4K testing at ultra is relevant even though differences between processors are minimal in those scenarios.

Memory Speeds?

Next, I checked on the effect of memory speed. Instead of 3200 CL14, I used 3800 CL16 for this test, with the Infinity Fabric running at 1:1 IF:DRAM clock. This is the best-case scenario for AMD—my Ryzen 9 5900X can not run 2000 MHz Infinity Fabric.

Note how the shape of the curve is identical to the one with lower memory speed. There are only small differences, with the biggest takeaway here that the area where Intel is faster than AMD is still there, but much less pronounced. Interestingly, it is still in exactly the same range: 80% to 120%.

What About a Slower GPU?

Next, I wanted to check what happens with a weaker graphics card. For that I'm using a GeForce RTX 2060 Founders Edition. By the way, during all these tests, all graphics cards are properly heated up before recording any data. When at low temperature, the various boost mechanisms will run the card at a higher frequency than what you can expect for prolonged gaming sessions. I always wait for a steady temperature state to avoid having these affect the data.

Here, we see yet again the same general shape of the curve, which is as expected. When highly CPU limited, AMD is posting huge gains over Intel that are even bigger than on the RTX 2080 Ti configuration, up to 25%. The Intel hump is still there, between 50% and 90%—it moved a little bit to the left. This is because RTX 2060 offers lower base performance than RTX 2080 Ti, which means the workload gets GPU bound sooner. It's also worth mentioning that Intel's headroom is bigger in the small stretch where it's ahead. Do note that we're still running memory at 3800 CL16.

Faster GPU?

Next up, we have a GeForce RTX 3090, which uses the Ampere architecture, whereas the previous GeForce 20 cards used Turing.

Wow! What's happening here? The Intel performance advantage is completely gone. At the left side of the chart, AMD's lead is the same 20% we've been seeing on the 2080 Ti. It drops here, too, while the load gets GPU limited, but at no point of the chart can Intel achieve higher FPS than AMD.

I have no idea why this is happening, but it supports the data: many reviewers have tested with Ampere and saw a clear lead for AMD in most gaming scenarios.

Ampere Effect?

NVIDIA released the GeForce RTX 3070 not long ago, which offers performance nearly identical to the GeForce RTX 2080 Ti. This makes it a great candidate for this testing—GPU performance alone is so similar to the 2080 Ti that the base performance difference can't make enough of a difference.

Here, too! Not even a hint of Intel beating AMD. Actually, it looks like the AMD system has a bigger lead than on the RTX 3090 data, even when heavily GPU limited.

Game Benchmarks

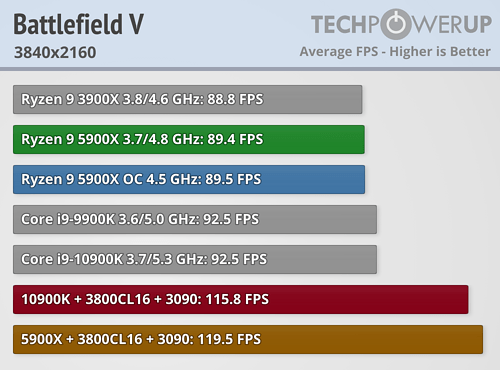

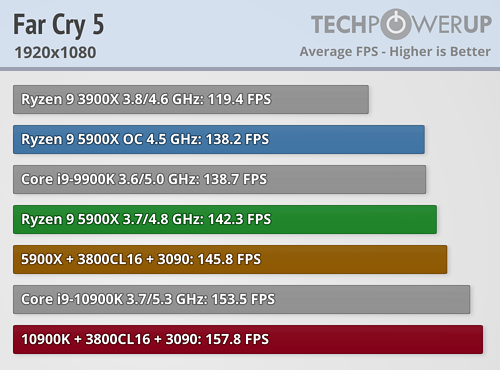

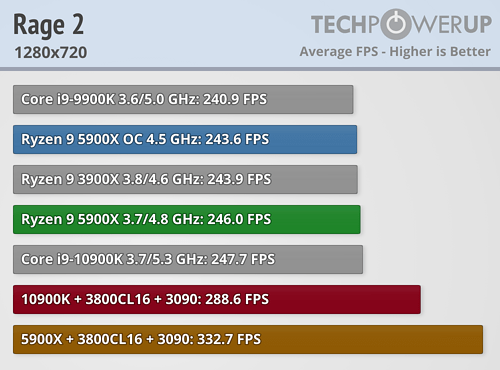

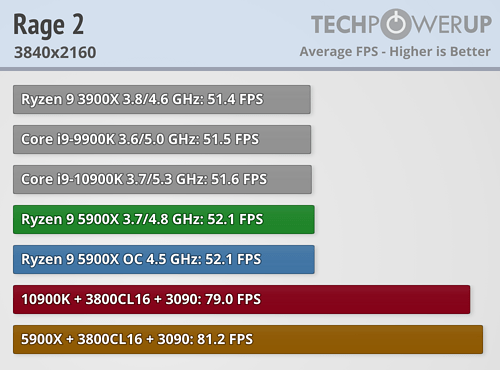

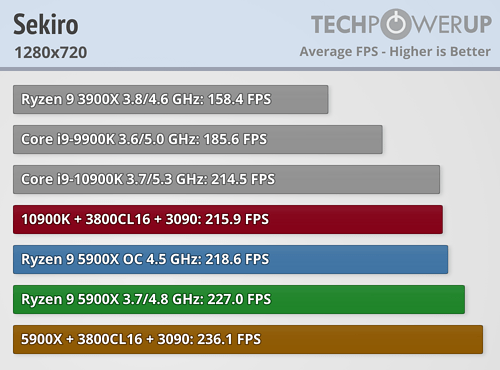

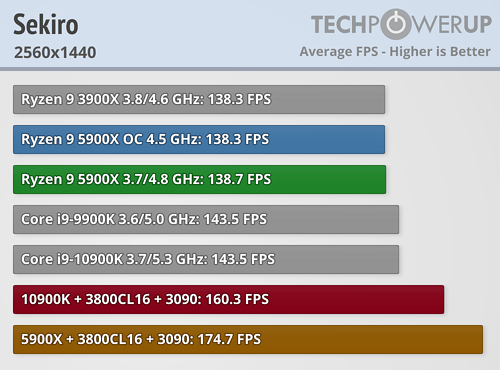

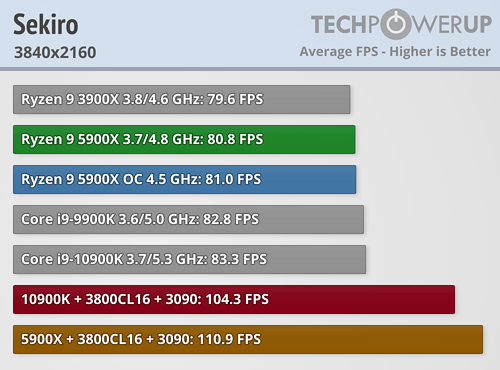

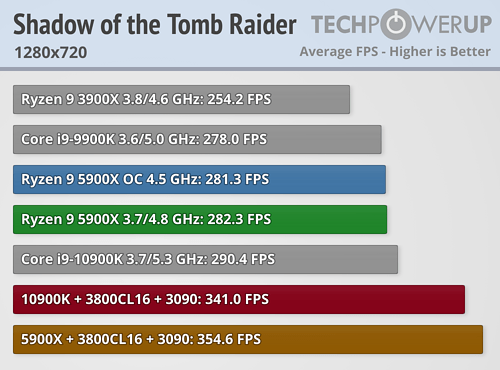

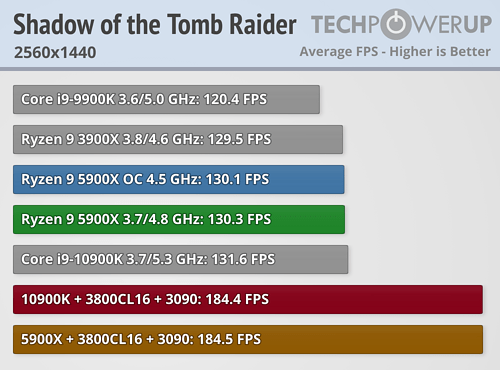

With these new insights I configured our CPU testing platform to DDR4-3800 and installed a GeForce RTX 3090. I then ran through our whole games list for the CPU bench, with surprising results.There are many charts—I'll describe the highlights so you know what to look for.

First of all, one of the most important discoveries is that 720p with the RTX 2080 Ti isn't completely CPU limited in many titles. If it were, we would only see minimal differences in FPS between 2080 Ti and 3090. This does only happen in Sekiro, a little bit in Wolfenstein, Far Cry and others, but not to the degree we expected.

Another takeaway is that at higher resolution, when more GPU bound, the difference between AMD and Intel is almost negligible. Good examples of that are Wolfenstein 4K, Witcher 4K, and Tomb Raider 1080p and up, with other titles showing similar patterns at 4K mostly.

The last and most important result is the highlight of Zen 3—AMD has Intel beat in gaming performance, at least with fast memory at 3800CL16 and an Ampere graphics card.

Conclusion

After a lot of testing even I can confirm what other reviewers have reported during the Zen 3 launch: AMD has beaten Intel in gaming performance, but only in a best-case scenario, using fast memory and with the latest graphics architecture. That is of course a reasonable requirement. People building a new next-gen machine or upgrading to a new processor will probably buy a new graphics card, too. Memory speeds have been an open debate on Ryzen since 1st gen, so I'm hoping people are aware enough not to pair their new shiny Zen 3 CPU with a single-channel DDR4-2400 memory kit just because that's what fits their budget or they thought they found a good deal on memory.On the other hand, AMD's wonderful AM4 platform makes upgrades very easy because of the wide compatibility with previous generations. This makes it likely that gamers upgrade their processor independent from the rest of the hardware—possibly upgrading just the CPU while keeping slower memory and an older graphics card. In these cases, the gains will still be substantial, especially in application performance, but don't expect a 100% guaranteed to beat a Core i9-10900K. But does beating Intel even matter in this scenario? You're still gaining a lot with a relatively small investment. Any performance differences vs. Intel's top CPU will be impossible to spot even in a blind side-by-side test, and you've saved a lot of money in the process—don't worry about that last one percent!

I could also imagine some gamers hoping that plopping in a Zen 3 processor will magically give them more FPS in their AAA gaming experiences. That might be true for cases where a very strong GPU is paired with light titles, like CS:GO or other MOBA style games. But in that case you have effectively wasted money on the graphics card—a weaker GPU would have achieved nearly the same performance at much better cost. In what we typically call "GPU limited" even though it actually is "highly or mostly GPU limited," you will see much smaller gains that vary wildly between titles depending on how much of a bottleneck the GPU is—don't expect more than a few percent.

I'm also relieved that I found out what was "wrong" with my Zen 3 gaming performance results—nothing. I simply tested in a scenario that's not the most favorable for AMD. I have been using these exact same settings, methodology, games, etc., for quite a while, in over 30 CPU reviews for both AMD and Intel, so claiming that I deliberately cherry picked to make AMD look bad is far-fetched. I'm more than happy that we now have a situation where both players have to innovate or keep innovating to stay competitive—this is the best scenario for us consumers, companies fighting neck-to-neck means better products at better prices for the customer.

Are the other reviews wrong then? Nope, they are perfectly fine. Especially in the context of a CPU review does it make perfect sense to test in a more CPU-limited scenario and skip resolutions like 1440p and 4K, or use lower settings to put more stress on the CPU. If everyone tested the same thing, results would be identical and you wouldn't have to look at multiple sources for your buying decision. Now, if at that point companies manage to influence how we conduct our reviews, they would have won, being able to hide what you should know about the product before spending your hard earned cash on it.

For my future processor reviews, I'll definitely upgrade to Ampere, or RDNA2 if that turns out to be the more popular choice on the market. I'm also thinking about upping memory speeds a bit, DDR4-3600 seems like a good balance between cost and performance. I doubt I'll pick DDR4-3800 or 4000 just because AMD runs faster with it—guess I'll still get flak from the AMD fanboys. I also have many ideas for new tests in the realms of applications, gaming, and beyond. I expect this new CPU test bench will be ready in early 2021—I have a lot of processors to retest, stay tuned. Zen 3 for VGA reviews? I'm tempted and considering it, but not decided yet.

I'm definitely not finished with gaming performance on Zen 3. My next steps will be to investigate why there is a significant performance difference between AMD and Intel in certain cases, and how to overcome it. I feel like beating Intel in these cases is possible with Zen 3, with small tweaks or fixes.

If you have ideas, suggestions, or requests, do let me know in the comments please.

Apr 27th, 2024 05:29 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Ryzen Owners Zen Garden (7248)

- im new to throttelstop and i think i messed it up by copying others any hints would be very much aprreciated (6)

- hacked (79)

- Post your Speedtest.net Speeds! (2256)

- Should I install Windows 10 or 11 for my new device (5)

- TechPowerUp Screenshot Thread (MASSIVE 56K WARNING) (4214)

- wireless mic for pc (1)

- My mouse randomly stops working (2)

- Horizontal black lines popping up on my screen? (14)

- MSI Stealth GS65 9SF settings after repaste (0)

Popular Reviews

- Ugreen NASync DXP4800 Plus Review

- HYTE THICC Q60 240 mm AIO Review

- MOONDROP x Crinacle DUSK In-Ear Monitors Review - The Last 5%

- Upcoming Hardware Launches 2023 (Updated Feb 2024)

- Thermalright Phantom Spirit 120 EVO Review

- FiiO K19 Desktop DAC/Headphone Amplifier Review

- Quick Look: MOONDROP CHU 2 Budget In-Ear Monitors

- AMD Ryzen 7 7800X3D Review - The Best Gaming CPU

- Alienware Pro Wireless Gaming Keyboard Review

- ASUS Radeon RX 7900 GRE TUF OC Review

Controversial News Posts

- Windows 11 Now Officially Adware as Microsoft Embeds Ads in the Start Menu (137)

- Sony PlayStation 5 Pro Specifications Confirmed, Console Arrives Before Holidays (117)

- NVIDIA Points Intel Raptor Lake CPU Users to Get Help from Intel Amid System Instability Issues (106)

- AMD "Strix Halo" Zen 5 Mobile Processor Pictured: Chiplet-based, Uses 256-bit LPDDR5X (103)

- US Government Wants Nuclear Plants to Offload AI Data Center Expansion (98)

- AMD's RDNA 4 GPUs Could Stick with 18 Gbps GDDR6 Memory (95)

- Developers of Outpost Infinity Siege Recommend Underclocking i9-13900K and i9-14900K for Stability on Machines with RTX 4090 (85)

- Windows 10 Security Updates to Cost $61 After 2025, $427 by 2028 (84)