AMD Ryzen 7 8700G Loves Memory Overclocking, which Vastly Favors its iGPU Performance

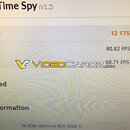

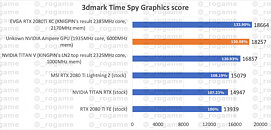

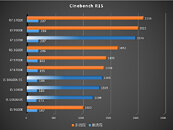

Entry level discrete GPUs are in trouble, as the first reviews of the AMD Ryzen 7 8700G desktop APU show that its iGPU is capable of beating the discrete GeForce GTX 1650, which means it should also beat the Radeon RX 6500 XT that offers comparable performance. Based on the 4 nm "Hawk Point" monolithic silicon, the 8700G packs the powerful Radeon 780M iGPU based on the latest RDNA3 graphics architecture, with as many as 12 compute units, worth 768 stream processors, 48 TMUs, and an impressive 32 ROPs; and full support for the DirectX 12 Ultimate API requirements, including ray tracing. A review by a Chinese tech publication on BiliBili showed that it's possible for an overclocked 8700G to beat a discrete GTX 1650 in 3DMark TimeSpy.

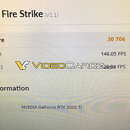

It's important to note here that both the iGPU engine clock and the APU's memory frequency are increased. The reviewer set the iGPU engine clock to 3400 MHz, up from its 2900 MHz reference speed. It turns out that much like its predecessor, the 5700G "Cezanne," the new 8700G "Hawk Point" features a more advanced memory controller than its chiplet-based counterpart (in this case the Ryzen 7000 "Raphael"). The reviewer succeeded in a DDR5-8400 memory overclock. A combination of the two resulted in a 17% increase in the Time Spy score over stock speeds; which is how the chip manages to beat the discrete GTX 1650 (comparable performance to the RX 6500 XT at 1080p).

It's important to note here that both the iGPU engine clock and the APU's memory frequency are increased. The reviewer set the iGPU engine clock to 3400 MHz, up from its 2900 MHz reference speed. It turns out that much like its predecessor, the 5700G "Cezanne," the new 8700G "Hawk Point" features a more advanced memory controller than its chiplet-based counterpart (in this case the Ryzen 7000 "Raphael"). The reviewer succeeded in a DDR5-8400 memory overclock. A combination of the two resulted in a 17% increase in the Time Spy score over stock speeds; which is how the chip manages to beat the discrete GTX 1650 (comparable performance to the RX 6500 XT at 1080p).