Friday, March 26th 2010

NVIDIA Preparing First Fermi-Derivative Performance GPU, GF104

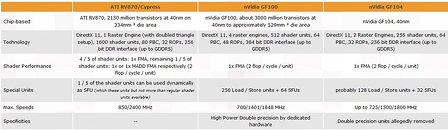

With the launch of GeForce GTX 400 series enthusiast-grade graphics cards based on the GF100 GPU being a stone's throw away, it is learned that work could be underway at NVIDIA to develop a new performance GPU as a successor to G92 and its various derivatives, according to 3DCenter.org, a German tech portal. Codenamed GF104, the new GPU targets performance/price sweet-spots the way G92 did back in its day with the GeForce 8800 GT and 8800 GTS-512, which delivered high-end sort of performance at "unbelievable" price points. The GF104 is a derivative of the Fermi architecture, and uses a physically down-scaled design of the GF100. It is said to pack 256 CUDA cores, 32 ROPs, 32 TMUs, and a 256-bit wide GDDR5 memory interface. The more compact die as a result could achieve high clock speeds, like G92 did compared to the G80.

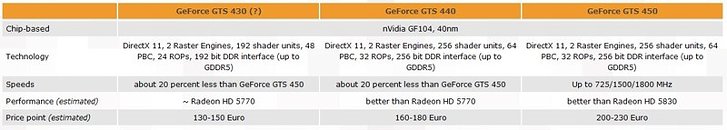

GF104 is believed to form three SKUs to fill performance-thru-mainstream market segments, starting with the fastest GeForce GTS 450, GeForce GTS 440, and GeForce GTS 430 (likely name). Among these, the GTS 450 enables all of GF104's features and specifications, with well over 700 MHz core speed, 1500 MHz shader, and 1800 MHz memory. This part could be priced at around the 240 EUR mark, and target performance levels of the ATI Radeon HD 5830. A notch lower, the GTS 440 has all the hardware inside the GF104 enabled, but has around 20% lower clock speeds, priced over 160 EUR, under 180 EUR. At the bottom is the so-called GTS 430, which could disable a few of the GPU's components, with 192 CUDA cores, and 192-bit GDDR5 memory interface, priced under $150. The lower two SKUs intend to compete with the Radeon HD 5700 series. The source says that the new SKUs could be out this summer.

Source:

3DCenter.org

GF104 is believed to form three SKUs to fill performance-thru-mainstream market segments, starting with the fastest GeForce GTS 450, GeForce GTS 440, and GeForce GTS 430 (likely name). Among these, the GTS 450 enables all of GF104's features and specifications, with well over 700 MHz core speed, 1500 MHz shader, and 1800 MHz memory. This part could be priced at around the 240 EUR mark, and target performance levels of the ATI Radeon HD 5830. A notch lower, the GTS 440 has all the hardware inside the GF104 enabled, but has around 20% lower clock speeds, priced over 160 EUR, under 180 EUR. At the bottom is the so-called GTS 430, which could disable a few of the GPU's components, with 192 CUDA cores, and 192-bit GDDR5 memory interface, priced under $150. The lower two SKUs intend to compete with the Radeon HD 5700 series. The source says that the new SKUs could be out this summer.

36 Comments on NVIDIA Preparing First Fermi-Derivative Performance GPU, GF104

it's really lame, i want GTX 480 benched right now!!!!!:cry:

I'm not saying I'm happy about it, but it would be a great money spinner for nVidia

Or maybe they'll just pospone the main product indefinitely and move straight to the derivatives

woops read wrong it says 32

They're gonna have to do better than that to compete.

Credit where credit is due :toast:

Read wrong on ur comment and on the specs, was sure i read 128 bit, confused me :S

Still, theese will be expensive, and yet slower than 5850 all of them, same production price...

No 64 bit float like the big bro's.

What is there to them ?

50% or maybe less, i dunno how much the 2x float takes of space. but i reccon its roughly around 270-330 nm.

Ati has 334 NM for 5870.

GTX285 has ~484

Estimate suggests its a fairly high production cost for the performance.

The question will remain, is the GPGPU stuff that nvidia taunts the big deal, or wont it be much use for us.

Surely, they HAVE to do something, cause they are getting well, smashed between AMD and Intel, both making ONdie NB, SB, GPU.

Making Nvidia chipset with IGP obsolute!

Nvidia chipsets all in all.

Thats huuuge incomes, immagine, Only high end laptops would have nvidia, thats just about 20% ? ouch...

Nvidia's GPGPU (Tesla) really is a great income source!

Will it affect us costumers, design cost for two seperate designs really isnt worth it either.(archs)

Really no hard fact on the die size, estimates, but well, atleast 260mm^2. unless nvidia has a magic way to shrink the size of a cuda core.