Friday, June 17th 2011

AMD Charts Path for Future of its GPU Architecture

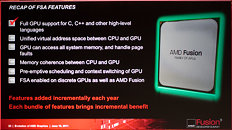

The future of AMD's GPU architecture looks more open, broken from the shackles of a fixed-function, DirectX-driven evolution model, and that which increases the role of GPU in the PC's central processing a lot more than merely accelerating GPGPU applications. At the Fusion Developer Summit, AMD detailed its future GPU architecture, revealing that in the future, AMD's GPUs will have full support for C, C++, and other high-level languages. Integrated with Fusion APUs, these new number-crunching components will be called "scalar co-processors".

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

Source:

TechReport

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

114 Comments on AMD Charts Path for Future of its GPU Architecture

AMD got in the graphic side, dusting off ATI and got them back in contention, all along wanting to do achieve this. It just takes time and research is bearing fruit.

The best reason for us that AMD appears to maintain to the open specification, and that will really make more developer want in.

They talk directly to developers and have had a forum running for years where people can communicate about it.

Go on the AMD developer forums to see : ]

i know that the GPU is way faster than the CPU,

so does this mean that GPU will replace the CPU in common tasks also??

VLIW5 -> VLIW4 -> ACE or CU

www.realworldtech.com/forums/index.cfm?action=detail&id=120431&threadid=120411&roomid=2www.realworldtech.com/

^wait for the article dar

2. In 2 years you will see this GPU in the Z-series APU(The tablet APU)In the cloud future yes, CPU will only need to command in the futureAMD GPUs have been GPGPU compatible since the high end GPUs could do DP

This architecture just allows a bigger jump(ahead of Kepler)Nvidia was very late, some late 200 series can do DX10.1 but not very wellThe reason they are changing is not because of the GPGPU issue its more on the scaling issue

Theoretical -> Realistic

Performance didn't scale correctly

It's all over the place, well scaling is a GPGPU issue but this architecture will at least allow for better scaling ^

I got me a new math co-processor!

One will not replace the other, they will merge and instructions will be exectuted on the hardware best for the job.

These days cloud computing is starting to make some noice and it makes sense, average guys/gals are not interested in FLOPS performance, they just want to listen to their music, check facebook and play some fun games. What I'm saying is that in the future we'll only need a big touch screen with a mediocre ARM processor to play Crysis V. The processing, you know GPGPU, the heat and stuff, will be somewhere in China, we'll be given just what we need, the final product through a huge broadband. If you've seen the movie Wall-E, think about living in that spaceship Axiom, it'd be something like that... creepy, eh?

I'll get excited about this when it is actually being implemented by devs in products I can use.

The APU

with

Enhanced Bulldozer + Graphic Core Next

Will be perfect unison

and with

2013

FX+AMD Radeon 9900 series

Next-Gen Bulldozer + Next-Gen Graphic Core Next

and DDR4+PCI-e 3.0 will equal MAXIMUM POWUH!!!

:rockout::rockout::rockout: :rockout::rockout::rockout: :rockout::rockout::rockout:

Only one thing, but it does point to things to come I think.

if there was some killer application for gpu computing wouldn't nvidia/cuda have found it by now?

In stead they announce it, brag about it and then we can all forget about it as it'll never happen.

They should invest those resources into more productive things instead of wasting them on such useless stuff.

Only thing that they pulled off properly is MLAA which uses shaders to process screen and anti-alias it. It functions great in pretty much 99,9% of games, is what they promised and i hope they won't remove it like they did with most of their features (Temporal AA, TruForm, SmartShaders etc). Sure some technologies got redundant like TruForm, but others just died because AMD didn't bother to support them. SmartShaders were good example. HDRish was awesome, giving old games a fake HDR effect which looked pretty good. But it worked only in OpenGL and someone else had to make it. AMD never added anything useful for D3D which is what most of the games use. So what's the point!?!?! They should really get their stuff together and stop wasting time and resources on useless stuff and start making cool features that can last. Like again, MLAA.

You can on the other hand run CUDA on old G80. NV has definitely been pushing GPGPU harder.

On the other, other hand however I can't say that GPGPU affects me whatsoever. I think AMD is mostly after that Tesla market and Photoshop filters. I won't be surprised if this architecture is less efficient for graphics. I sense a definite divergence from just making beefier graphics accelerators. NV's chips have proven with their size that GPGPU features don't really mesh with graphics speed.