Monday, November 12th 2012

AMD Introduces the FirePro S10000 Server Graphics Card

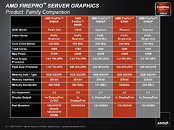

AMD today launched the AMD FirePro S10000, the industry's most powerful server graphics card, designed for high-performance computing (HPC) workloads and graphics intensive applications.

The AMD FirePro S10000 is the first professional-grade card to exceed one teraFLOPS (TFLOPS) of double-precision floating-point performance, helping to ensure optimal efficiency for HPC calculations. It is also the first ultra high-end card that brings an unprecedented 5.91 TFLOPS of peak single-precision and 1.48 TFLOPS of double-precision floating-point calculations. This performance ensures the fastest possible data processing speeds for professionals working with large amounts of information. In addition to HPC, the FirePro S10000 is also ideal for virtual desktop infrastructure (VDI) and workstation graphics deployments."The demands placed on servers by compute and graphics-intensive workloads continues to grow exponentially as professionals work with larger data sets to design and engineer new products and services," said David Cummings, senior director and general manager, Professional Graphics, AMD. "The AMD FirePro S10000, equipped with our Graphics Core Next Architecture, enables server graphics to play a dual role in providing both compute and graphics horsepower simultaneously. This is executed without compromising performance for users while helping reduce the total cost of ownership for IT managers."

Equipped with AMD next-generation Graphics Core Next Architecture, the FirePro S10000 brings high performance computing and visualization to a variety of disciplines such as finance, oil exploration, aeronautics, automotive design and engineering, geophysics, life sciences, medicine and defense. With dual GPUs at work, professionals can experience high throughput, low latency transfers allowing for quick compute of complex calculations requiring high accuracy.

Responding to IT Manager Needs

With two powerful GPUs in one dual-slot card, the FirePro S10000 enables high GPU density in the data center for VDI and helps increase overall processing performance. This makes it ideal for IT managers considering GPUs to sustain compute and facilitate graphics intensive workloads. Two on-board GPUs can help IT managers reap significant cost savings, replacing the need to purchase two single ultra-high-end graphics cards, and can help reduce total cost of ownership (TCO) due to lower power and cooling expenses.

Key Features of AMD FirePro S10000 Server Graphics

● Compute Performance: The AMD FirePro S10000 is the most powerful dual-GPU server graphics card ever created, delivering up to 1.3 times the single precision and up to 7.8 times peak double-precision floating-point performance of the competition's comparable dual-GPU product. It also boasts an unprecedented 1.48 TFLOPS of peak double-precision floating-point performance;

● Increased Performance-Per-Watt: The AMD FirePro S10000 delivers the highest peak double-precision performance-per-watt -- 3.94 gigaFLOPS -- up to 4.7 times more than the competition's comparable dual-GPU product;

● High Memory Bandwidth: Equipped with a 6GB GDDR5 frame buffer and a 384-bit interface, the AMD FirePro S10000 delivers up to 1.5 times the memory bandwidth of the comparable competing dual-GPU solution;

● DirectGMA Support: This feature removes CPU bandwidth and latency bottlenecks, optimizing communication between both GPUs. This also enables P2P data transfers between devices on the bus and the GPU, completely bypassing any need to traverse the host's main memory, utilize the CPU, or incur additional redundant transfers over PCI Express, resulting in high throughput low-latency transfers which allow for quick compute of complex calculations requiring high accuracy;

● OpenCL Support: OpenCL has become the compute programming language of choice among developers looking to take full advantage of the combined parallel processing capabilities of the FirePro S10000. This has accelerated computer-aided design (CAD), computer-aided engineering (CAE), and media and entertainment (M&E) software, changing the way professionals work thanks to performance and functionality improvements.

Please visit AMD at SC12, booth #2019, to see the AMD FirePro S10000 power the latest in graphics technology.

The AMD FirePro S10000 is the first professional-grade card to exceed one teraFLOPS (TFLOPS) of double-precision floating-point performance, helping to ensure optimal efficiency for HPC calculations. It is also the first ultra high-end card that brings an unprecedented 5.91 TFLOPS of peak single-precision and 1.48 TFLOPS of double-precision floating-point calculations. This performance ensures the fastest possible data processing speeds for professionals working with large amounts of information. In addition to HPC, the FirePro S10000 is also ideal for virtual desktop infrastructure (VDI) and workstation graphics deployments."The demands placed on servers by compute and graphics-intensive workloads continues to grow exponentially as professionals work with larger data sets to design and engineer new products and services," said David Cummings, senior director and general manager, Professional Graphics, AMD. "The AMD FirePro S10000, equipped with our Graphics Core Next Architecture, enables server graphics to play a dual role in providing both compute and graphics horsepower simultaneously. This is executed without compromising performance for users while helping reduce the total cost of ownership for IT managers."

Equipped with AMD next-generation Graphics Core Next Architecture, the FirePro S10000 brings high performance computing and visualization to a variety of disciplines such as finance, oil exploration, aeronautics, automotive design and engineering, geophysics, life sciences, medicine and defense. With dual GPUs at work, professionals can experience high throughput, low latency transfers allowing for quick compute of complex calculations requiring high accuracy.

Responding to IT Manager Needs

With two powerful GPUs in one dual-slot card, the FirePro S10000 enables high GPU density in the data center for VDI and helps increase overall processing performance. This makes it ideal for IT managers considering GPUs to sustain compute and facilitate graphics intensive workloads. Two on-board GPUs can help IT managers reap significant cost savings, replacing the need to purchase two single ultra-high-end graphics cards, and can help reduce total cost of ownership (TCO) due to lower power and cooling expenses.

Key Features of AMD FirePro S10000 Server Graphics

● Compute Performance: The AMD FirePro S10000 is the most powerful dual-GPU server graphics card ever created, delivering up to 1.3 times the single precision and up to 7.8 times peak double-precision floating-point performance of the competition's comparable dual-GPU product. It also boasts an unprecedented 1.48 TFLOPS of peak double-precision floating-point performance;

● Increased Performance-Per-Watt: The AMD FirePro S10000 delivers the highest peak double-precision performance-per-watt -- 3.94 gigaFLOPS -- up to 4.7 times more than the competition's comparable dual-GPU product;

● High Memory Bandwidth: Equipped with a 6GB GDDR5 frame buffer and a 384-bit interface, the AMD FirePro S10000 delivers up to 1.5 times the memory bandwidth of the comparable competing dual-GPU solution;

● DirectGMA Support: This feature removes CPU bandwidth and latency bottlenecks, optimizing communication between both GPUs. This also enables P2P data transfers between devices on the bus and the GPU, completely bypassing any need to traverse the host's main memory, utilize the CPU, or incur additional redundant transfers over PCI Express, resulting in high throughput low-latency transfers which allow for quick compute of complex calculations requiring high accuracy;

● OpenCL Support: OpenCL has become the compute programming language of choice among developers looking to take full advantage of the combined parallel processing capabilities of the FirePro S10000. This has accelerated computer-aided design (CAD), computer-aided engineering (CAE), and media and entertainment (M&E) software, changing the way professionals work thanks to performance and functionality improvements.

Please visit AMD at SC12, booth #2019, to see the AMD FirePro S10000 power the latest in graphics technology.

69 Comments on AMD Introduces the FirePro S10000 Server Graphics Card

S10000

(SP) 5.91 TFLOPS

(DP) 1.48 TFLOPS

K10

(SP) 4.58 TFLOPS

(DP) 0.19 TFLOPS

But that's just my guessing, only time will tell what exactly is the chip meant for ;)

What would you expect Nvidia to do with the full 15SMX GPU's and other GPU's that are likely to fall outside of server power budget? It would seem you either fuse off perfectly good blocks for 15SMX parts, or throw away high leakage GPU's that could be utilized in a 250+W consumer card- and gain some PR into the bargain. Looking at Nvidia's past record, I'm pretty certain which course of action they would likely take.

My NV 4600 (8800GTX) can max out CS:GO and play anything out there currently. These cards still need to have the ability to run DirectX applications just as well as OpenGL.

If the S10000 isn't specced for 375W use;

1. Why does AMD specify 375W board power for a part which has no capacity for boost/overclocking?, and,

2. The "S" series are all passively cooled with the exception of the S10000 which obviously requires three fans. Why would that be?

for AMD, TDP means the maximum power that can be delivered to graphic board.

but for nVidia, TDP means power that limited by their power limiter.

take a look at HD6970 that has 250W TDP VS GTX580 244W TDP

how on earth that 6970 has higher power consumption than the beast 580 ???

then look at the 'real' power consumption tested by Wizz

even the next gen graphics, 7870 ( 175W TDP ) VS 660 ( 130W TDP ) also follow this trend.

So direct TDP comparison between both companies doesn't make any sense at all.

PS. the 6990 also has 375W of TDP.

its just Denial some people are in

Secondly, the Fermi cards are blatantly fudged by Nvidia for PR purposes. I wouldn't argue that Fermi cards TDP aren't based on wishful thinking, but the issue here is Tahiti (S10000) and Kepler (K20)

Your analogy would be HD 7970 ( 250W board power) vs GTX 680 (195W TDP) - although that an apples-to-apples comparison either since Tesla lacks the boost facility. Closer would be a non-boost Kepler vs non-boost Southern Islands. But since W1zz hasn't tested any stock cards, maybe check out another site...

GTX 650Ti (110W TDP).......191W system load (173.6% of TDP)

HD 7850 (130W board).....216W system load (166.2% of TDP)

Not a huge difference.True. And it's maximum power draw is 404 watts.

TH put the single GPU FirePro W9000 through it's paces earlier(the S9000 is a passive version of the same card). The card is basically a HD 7970 non-GHz edition with 6GB VRAM and 225W board power. Under GPGPU the card clocked 275W

look at the Furmark test of GTX680 by Wizz

and compare to the gaming test

you see a difference between Furmark and gaming test of 680 compared to 7970 ???

If a power limiter affected stated performance you'd have an argument, but as the case stands, you are making excuses not a valid point. And just for the record, the gaming charts don't have a direct bearing on server/WS/HPC parts- as I mentioned before, you can't get a true apples-to-apples comparison between gaming and pro parts- all they can do is provide an inkling into the efficiency of the GPU. If you want to use a gaming environment argument, why don't you take it to a gaming card thread, because it is nonsensical to apply it to co-processors.Because volt modding is (of course) the first requirement for server co-processors [/sarcasm]

Take your bs to a gaming thread.

But thats also why they manage a huge score in most GPGPU applications. And compare the power efficiency per FLOP even if it draws a few extra watts....

www.tomshardware.com/charts/2012-vga-gpgpu/15-GPGPU-Luxmark,2971.html

www.tomshardware.com/charts/2012-vga-gpgpu/14-GPGPU-Bitmining,2970.htmlb

How old is that PSU in there. Swap it for a new one with correct connections.

Fix yo links.

True enough that Tahiti/GCN is optimized for GPGPU, but then GK104 is just the opposite....and if the S10000 were a desktop gaming card I certainly wouldn't disagree with the premise, but if server GPGPU is the point of the discussion- and it should be for this thread- shouldn't the comparison be between server parts? Seems a little pointless making a case for the S10000 using desktop cards running at higher clocks using desktop drivers, while comparing them to deliberately compute hobbled Nvidia counterparts.

Wouldn't a more apropos comparison be gained by testing server parts to server parts?

(BTW: The W/S9000 is a Tahiti part (3.23TFlop), the Quadro 6000 is a Fermi GF100 (1.03TFlop) based on the GTX 470 ).Single precision.......................................................Double precision

W/S9000.(225W)...3.23FTlop....14.36 GFlop/watt.......0.81 TFlop.....3.58 GFlop/watt

S10000...(375W)...5.91TFlop....15.76 GFlop/watt........1.48 TFlop....3.95 GFlop/watt

K10........(225W)...4.85TFlop....21.56 GFlop/watt........0.19 TFlop.... Negligable

K20........(225W)...3.52TFlop....15.64 GFlop/watt........1.17 TFlop.....5.20 GFlop/watt

K20X......(235W)...3.95TFlop....16.81 GFlop/watt........1.31 TFlop.....5.57 GFlop/sec

And, for all the hoo-hah regarding the S10000 powering the SANAM system to number two in the Green500 list, the placement still relies more upon the asymmetric setup of the computer. 420 S10000's vs 4800 Xeon E5-2650'sServers and HPC racks in general are built around a 225W per board specification. Example HP , and from Anandtech...for example. That is why pro co-processors and are invariably rated at 225 watts. Check the specifications for top tier FireStream, FirePro, Quadro and Tesla. All the top SKU's are geared for 225W power envelope.

Funny how much faster the Radeon is.

Most other people would realize that 225W input power means 225W heat dispersal requirement, as well as power requirement.Wow! Tahiti's faster than a GTX 470 in LightWave, Ensight, SolidWorks, bitmining, Luxmark and CAPS viewer. Colour me surprised. I am truly shocked and stunned!

Isn't it more surprising that the latest generation AMD GPU isn't overly convincing against a two generations old Nvidia GTX 470 in AutoCAD 2013, May 2013 and Siemens freeform modelling?

BTW

You missed out the Maya benchesin the same review...

and you missed out the Catia benchesin the same review...

and you missed out the Pro/ENGINEER benchesin the same review...

and you missed out the Siemens Visualization benchesin the same test...

Then of course you've got AMD's forte- OpenCL - which is also a very mixed bunch. AMD is strong in Image processing, but the Video benches and general benchmarks are pretty much a wash. Shouldn't Tahiti be putting up better numbers against a GTX 470 than this?

Not to worry though, I bet the GK104 and GK110 based Tesla and Quadro will be shit at everything- and if they aren't, you can just look at the pages you like- just like the TH review.

(Better not look at the HotHardware review)

Can you link me to the 225w specification. I've never seen it.I'd be unfortunate if you were implying that 225w is the limit and kind of halarious

Linking to SL390s G7 implying thats the standard is baffiling.The (up to 225 watt) is configuration where the available PSUs options only provide for (2) 6-pin connecters per slot. Not the 225w your implying per board :laugh:

PCIe Gen 2 = 75w

(2) 6-pin = 150w (75w each)

Total = 225w

Not a Server Specification :laugh:

So, yet another instance of were Xzibit's reading skills don't reach the mark.

Just to reiterate. Most racks are pretty standardized which is why most vendors limit themselves to a 225W add in board. The other thing to consider is upgrades of previous generation systems- a 225W swap out for a 225W board is relatively painless. A swap out for a higher TDP board may require more extensive work.Of course if I actually said anything like that...but I didn't. 225W is a general standard that server vendors have adopted- don't believe me, check Cisco, HP, Dell, Penguin or any other server manufacturer and see how many are 225W per add-in-board and how many are, say 300W PCI-SIG.

Of course I don't expect you to actually do this, since it require you to:

1. Be able to parse the information correctly, and

2. Require you to actually spend some time doing research, and

3. You'd give up as soon as you saw the number of vendors' models specced for 225W boards.

Given that you can't even work out basic information about a company or its ownership, I'm not confident you'll fare any better with a companies product line- so I'm not expecting anything else but some worthless trollingYep. GF100 has since been superseded by GF110, which in turn has been superseded by GK104/110

and both companies do not measure TDP in the same way.

that is my point.

hope you understand.

If you have links i'd like to see them tho, remember..

;)

You think a SC cluster or data centre has ATX PSU's ??

Maybe you should watch thisand point out where the PSU's are, or maybe tell these guys they're doing it wrong.Which is already what I've said...and much earlier than you did, so why the bleating? Oh, I know why,...you just need to troll.Nothing at all, except possibly change the cooling and power cabling - and no I don't mean just the individual 6 and 8 pin PCI-E connectors. I mean the main power conduits from the cabinets to the power source. Then of course if a cabinet is being refitted for S10000 then you would have to re-cable all 42 racks in a cabinet for 2 x 8-pin instead of the nominal 6-pin + 8-pin at four cables per rack multiplied by the number of boards per rack, as well as the main power conduits...then of course you'd have to upgrade the cooling system -which for most big iron is water cooling and refrigeration.