Thursday, May 28th 2020

Benchmarks Surface for AMD Ryzen 4700G, 4400G and 4200G Renoir APUs

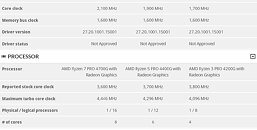

Renowned leaker APISAK has digged up benchmarks for AMD's upcoming Ryzen 4700G, 4400G and 4200G Renoir APUs in 3D Mark. These are actually for the PRO versions of the APUs, but these tend to be directly comparable with AMD's non-PRO offerings, so we can look at them to get an idea of where AMD's 4000G series' performance lies. AMD's 4000G will be increasing core-counts almost across the board - the midrange 4400G now sports 6 cores and 12 threads, which is more than the previous generation Ryzen 5 3400G offered (4 cores / 8 threads), while the top-of-the-line 4700G doubles the 3400G's core-cpount to 8 physical and 16 logical threads.

This increase in CPU cores, of course, has implied a reduction in the area of the chip that's dedicated to the integrated Vega graphics GPU - compute units have been reduced from the 3400G's 11 down to 8 compute units on the Ryzen 7 4700G and 7 compute units on the 4400G - while the 4200G now makes do with just 6 Vega compute units. Clocks have been severely increased across the board to compensate the CU reduction, though - the aim is to achieve similar GPU performance using a smaller amount of semiconductor real-estate.The 4700G's 8 Vega CUs clocked at 2.1 GHz, as reported by the benchmark suite, achieve 4,301 points in the graphics and 23,392 points in the CPU score, which are respectively 6.65% and 22.3% higher than the 4400G's 4,033 and 19,113 points (achieved with the same 8 Vega CUs clocked at a slower 1.9 GHz and with two fewer cores. The 4700G scores 20% and a whopping 70.6% higher than the 4200G's in the graphics and physics tests respectively - which makes sense, considering the slower-clocked 6 Vega CUs (1.7 GHz) and 4 core / 8 thread configuration of the former. AMD's 4000G series keeps the same 65 W TDp despite higher number of CPU cores and higher-clocked Vega cores, but the company will also have Ryzen 400=GE series which achieve a 35 W TDP, albeit at the cost of reduced CPU clocks (and likely GPU clocks as well).

_rogame, another well-known leaker, found two comparable system configurations running the Ryzen 4200G and 3200G, where the 4200G delivered 57% higher CPU performance, but 7% less GPU performance.

Sources:

via Videocardz, APISAK @ Twitter, _rogame @ Twitter

This increase in CPU cores, of course, has implied a reduction in the area of the chip that's dedicated to the integrated Vega graphics GPU - compute units have been reduced from the 3400G's 11 down to 8 compute units on the Ryzen 7 4700G and 7 compute units on the 4400G - while the 4200G now makes do with just 6 Vega compute units. Clocks have been severely increased across the board to compensate the CU reduction, though - the aim is to achieve similar GPU performance using a smaller amount of semiconductor real-estate.The 4700G's 8 Vega CUs clocked at 2.1 GHz, as reported by the benchmark suite, achieve 4,301 points in the graphics and 23,392 points in the CPU score, which are respectively 6.65% and 22.3% higher than the 4400G's 4,033 and 19,113 points (achieved with the same 8 Vega CUs clocked at a slower 1.9 GHz and with two fewer cores. The 4700G scores 20% and a whopping 70.6% higher than the 4200G's in the graphics and physics tests respectively - which makes sense, considering the slower-clocked 6 Vega CUs (1.7 GHz) and 4 core / 8 thread configuration of the former. AMD's 4000G series keeps the same 65 W TDp despite higher number of CPU cores and higher-clocked Vega cores, but the company will also have Ryzen 400=GE series which achieve a 35 W TDP, albeit at the cost of reduced CPU clocks (and likely GPU clocks as well).

_rogame, another well-known leaker, found two comparable system configurations running the Ryzen 4200G and 3200G, where the 4200G delivered 57% higher CPU performance, but 7% less GPU performance.

37 Comments on Benchmarks Surface for AMD Ryzen 4700G, 4400G and 4200G Renoir APUs

It's true that the bandwidth increase with DDR4-3200 but the new SKU also have the double the cores. And a dual channel DDR4-3200 is just 50 GB/S where a Radeon Rx 560 or Geforce 1050 have about 112 GB Dedicated. So not only an APU struggle to get half the bandwidth, they have to share it with 6 or 8 core CPU.

They reduced the CU count because they knew they could increase the frequency and give aproximativelly the same performance that is anyway, limited by the available bandwidth.

We will see larger APU when the memory bandwidth will increase. But unless they use on chip memory, i doubt we will see very large chip. Also the market for large APU outside console is very niche and probably not worth the investment.

LPDDR4X at 4266MT/S cost a fortune. Laptop are shipping with PC-3200 instead and that is way less than 78%

On Desktop, you could buy a high speed kit, but for the extra cost, you better just spend it on a dedicated GPU and you will always get more performance.

There are rare scenario where you would want a very small form factor so the external GPU might not be possible, but these are rare and you don't build a lineup for special case.

At least not for now.

If you're talking about the 4700U and below that are more commonly seen with DDR4-3200, then those are only 7CU variants. They've lost 30% of their CUs and gained 50% bandwidth by going from DDR4-2400 to DDR4-3200.

I expect the 4700U to be the common choice for midrange laptops at the same price as the previous 3700U. 30% lower CU count and 50% more bandwidth means that memory bottlenecks aren't the issue they were with Raven Ridge and Pinnacle Ridge.

Also, Bandwidth is shared with the CPU and a 3700U is a 4 core/8 thread cpu where 4700U is a 8 core/8 Thread CPU. Each CPU core will do their memory request putting more constraint on the memory.

Also dual channel LPDDR4X 4266 would give you about 70 GB/s. It's getting there, but it's still far from what a Rx 560 or a 1050 have (around 110 GB/s like said). But it would get there. But since we are still sharing it with the CPU, we are still far from the bandwidth of dedicated GPU.

The extra cores on Renoir won't make a difference to the CPU's utilisation of the memory bandwidth either; An application will use the bandwidth it needs no matter how many cores it's running on.

Renoir has 50-78% more bandwidth than previous APUs, depending on which RAM is used.

The CPU will use less memory bandwidth than Zen and Zen+ APUs because Zen2 has better prediction and twice the L3 cache, requiring fewer calls to main memory.

Put those two things together and Renoir's effective bandwidth gains are anywhere from 55% to 100% better.

You can't seem to admit that memory bandwidth has improved significantly enough to make a difference no matter how much I spell it out for you. Why is that?

The L3 cache on Ryzen is indeed larger than on previous CPU but it still fairly small regarding many application data set. Also it's a victim cache (it contain data ejected from L2 cache).

The Pre-fetcher aren't there to save bandwidth. They are there to lower latency. The data still need to be moved around. They don't make the data magically appear in the cache. The main purpose of a cache is not to save on bandwidth. It's to save on latency. This is where Zen 2 get the most benefits of his cache VS Zen 1. But Zen 2 still benefits greatly from more memory bandwidth.

It's true that the global bandwidth of the platform have increase (Could be better if LPDDR4X wasn't so expensive and more available) but the actual usable bandwidth used by APU.

Something you can think about: Let say what you say is true that CPU do not really need that much bandwidth. Then Why CPU manufacturer even bother putting 2 DDR4 Channel ? the cost of having to put another channel on a motherboard and in a socket is significant. If they could get away without it, they would do.

But i agree that if an APU had 4 memory channel, still the same number of core of a desktop mid range part (8 core / 16 thread), they could definitivelly use a bit more silicon estate. I could say that if it was cost effective on PC to do so, they could even add a GPU chiplet in the package.

The key there is Cost. APU aren't design to cost a fortune.

I got 2 TR's 3970x's. Bought not long ago and that's how I find them useful in a way.

The 2000 U-series need around 22-23W to avoid throttling the Vega CUs in typical gaming/benchmarks. Thankfully both will boost at 25W for short periods and during boost the 2700U is almost 15% quicker than the 2500 on first run of benchmarks. That's still not the gain that 25% extra CUs should give, but we're still limited by DDR4-2400 so you have to expect imperfect scaling.

Edit:

I just found this video -

I can only imagine how great a Renoir Vega10 would have been for ultrabook gaming....

look to me there is a bottleneck somewhere :confused:

I wonder where it is