Tuesday, September 15th 2020

Intel DG2 Discrete Xe Graphics Block Diagram Surfaces

New details have leaked on Intel's upcoming DG2 graphics accelerator, which could help shed some light on what exactly can be expected from Intel's foray into the discrete graphics department. For one, a product listing shows an Intel DG2 graphic accelerator being paired with 8 GB of GDDR6 memory and a Tiger Lake-H CPU (45 W version with 8 cores, which should carry only 32 EUs in integrated graphics hardware). This 8 GB of GDDR6 detail is interesting, as it points towards a 256-bit memory bus - one that is expected to be paired with the 512 EU version of Intel's DG2 (remember that a 384 EU version is also expected, but that one carries only 6 GB of GDDR6, which most likely means a 192-bit bus.

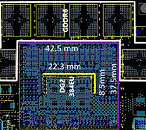

Videocardz says they have received an image for the block diagram on Intel's DG2, pointing towards a 189 mm² die area for the 384 EU version. Looking at component density, it seems that this particular diagram may refer to an MXM design, commonly employed as a discrete notebook solution. 6 total GDDR6 chips are seen in the diagram, thus pointing towards a memory density of 6 GB and the aforementioned 192-bit bus. Other specs that have turned up in the meantime point towards a USB-C interface being available for the DG2, which could either point towards a Thunderbolt 4-supporting design, or something like Virtual Link.

Sources:

Uniko's Hardware @ Twitter, Komachi @ twitter, via Videocardz

Videocardz says they have received an image for the block diagram on Intel's DG2, pointing towards a 189 mm² die area for the 384 EU version. Looking at component density, it seems that this particular diagram may refer to an MXM design, commonly employed as a discrete notebook solution. 6 total GDDR6 chips are seen in the diagram, thus pointing towards a memory density of 6 GB and the aforementioned 192-bit bus. Other specs that have turned up in the meantime point towards a USB-C interface being available for the DG2, which could either point towards a Thunderbolt 4-supporting design, or something like Virtual Link.

31 Comments on Intel DG2 Discrete Xe Graphics Block Diagram Surfaces

Maybe the first generation will have some minor drawbacks compared to nvidia BUT I believe they will be fixed til second generation.

Most important is that there will be hard competition in the low and medium GPU segment off the bat and high end segment down the road.

I believe Intel will price aggressively for at lest 2 or 3 generations to get a good market share and secure their future GPU business.

ALSO Thunderbolt 4 built in could be a game changer since this would make it really simple to make cheap GFX upgrades for all laptops with Thunderbolt 4.

External GFX upgrade market for laptops is pretty much untapped, current solutions way to expensive. Intel have a BIG chance to dominate this market before the competition have time to react.

RTG = Raja Technology Group

AMD's having a hard time matching them with an inferior node & you're saying the potato GPU maker will get to basically 2080Ti levels right from the get go o_O

I think people seem to forget that AMD is a multitude smaller than Nvidia, and yet everyone expects them to compete on every front. Maybe I'm an optimist, but I think the fact that AMD is so much smaller and with such a smaller R&D budget yet still able to compete with Nvidia on the most important and profitable segments AND straight up embarrass Intel is seriously impressive.... We're talking about a single, small company (compared to Intel and Nvidia) beating one and hanging with the other... I think that's impressive, and I am certainly thankful for it, otherwise I'd probably have had to pay $1000 for 8 cores from Intel instead of $290 for my 2700x almost two years ago.

While it's nice to see another competitor entering the dGPU market, it would have been much better for consumers if it was a wholly new entity and not Intel.... We need NEW competitors in old markets, not OLD competitors in new markets.

Raja is smoke and mirrors, which is why he got the boot from AMD.

To suddenly go (consumer friendly) cheap with their first jump into gpu's….I doubt it.

I suspect Intel's big push will be pairing Intel laptops with an Intel dGPU and offering cost incentives to OEMs to do so. Second to that I suspect those dGPUs will land in the desktop add-in market at a price competitive in the entry-level to medium performance segment.

Intel is no stranger to graphics and I suspect their products will be a great option.

The sad truth is the higher end binned chips from AMD just haven't measured up for a few years now. Navi was a pleasant surprise, hopefully Navi2 can build on top of that.

I expect nothing different this time (if it actually materializes of which I'm very doubtful).

And of course they're a huge company, they're not going to go bankrupt and shut down or anything. Whoever suggested that? IBM is a huge profitable company too, but they have become completely irrelevant to the home market several decades now. They used to make home PCs, you know. If not only AMD but nVidia too held a X86 license I honestly think even intel bankrupting might have been a possibility (however slight), but that's another parallel universe.