Wednesday, July 21st 2021

NVIDIA Multi-Chip-Module Hopper GPU Rumored To Tape Out Soon

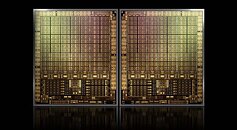

Hopper is an upcoming compute architecture from NVIDIA which will be the first from the company to feature a Multi-Chip-Module (MCM) design similar to Intel's Xe-HPC and AMD's upcoming CDNA2. The Hopper architecture has been teased for over 2 years but it would appear that it is nearing completion with a recent leak suggesting the product will tape out soon. This compute GPU will likely be manufactured on TSMC's 5 nm node and could feature two dies each with 288 Streaming Microprocessors which could theoretically provide a three-fold performance improvement over the Ampere-based NVIDIA A100. The first product to feature the GPU is expected to be the NVIDIA H100 data center accelerator which will serve as a successor to the A100 and could potentially launch in mid-2022.

Sources:

@3DCenter_org, VideoCardz

35 Comments on NVIDIA Multi-Chip-Module Hopper GPU Rumored To Tape Out Soon

Even if not perfect I'm sure MCM will be better than past paired GPU mixing solutions in history unless these companies are just plain incompetent and learned nothing of value from the past attempts.

All in all, I think going this route is a no-brainer from a scalability standpoint. The real issue is cost because it's a more complicated process to do MCM if you're not already doing it at scale. AMD kind of has a leg up on this because they're already doing it and are far further along than just having multiple dies on a chip. The I/O chip with more simple chiplets was a game changer to be honest and I think we'll see that kind of design drive future high performance GPU designs.

All in all, I think nVidia is about where Intel is on the MCM path. They have multiple dies per chip, but they're still doing the direct communication thing which doesn't scale as well as AMD's solution does. It works okay for two dies, 4 dies is complicated and costly (forget the latency penalty talking to a die you don't have a direct connection to,) and any more is inefficient. However with the I/O die, we've seen how many chiplets AMD can cram on to a single chip. Smaller dies are easier to produce consistently with better yields as well.

This is a slight tangent, but what I would like to see is AMD produce a CPU where the chiplets are mixed between CPU and GPU chiplets. We could finally see some really interesting APUs out of AMD if they did.

www.techpowerup.com/gpu-specs/radeon-r9-295x2.c2523

I fail to see any performance to be gained from it if the underlying hardware adds up to the same amount as well. I suppose it might provide scenario's where you can do twice the work in single clock cycle off the CPU/GPU perhaps? If that's the angle you were going towards then maybe idk honestly. I still think the potential power savings of just two APU chiplets would make better sense overall. Just what that would bring for AMD in the mobile market alone is significant and hard to overlook.I think that's one of the significant things DX12 brought about that was intended to help with mGPU was pooled memory. Just wait til pooled memory, direct storage, and infinity cache are combined with mGPU things will start to heat up.