- Joined

- Mar 28, 2018

- Messages

- 1,892 (0.72/day)

- Location

- Arizona

| System Name | Space Heater MKIV |

|---|---|

| Processor | AMD Ryzen 7 5800X |

| Motherboard | ASRock B550 Taichi |

| Cooling | Noctua NH-U14S, 3x Noctua NF-A14s |

| Memory | 2x32GB Teamgroup T-Force Vulcan Z DDR4-3600 C18 1.35V |

| Video Card(s) | PowerColor RX 6800 XT Red Devil (2150MHz, 240W PL) |

| Storage | 2TB WD SN850X, 4x1TB Crucial MX500 (striped array), LG WH16NS40 BD-RE |

| Display(s) | Dell S3422DWG (34" 3440x1440 144Hz) |

| Case | Phanteks Enthoo Pro M |

| Audio Device(s) | Edifier R1700BT, Samson SR850 |

| Power Supply | Corsair RM850x, CyberPower CST135XLU |

| Mouse | Logitech MX Master 3 |

| Keyboard | Glorious GMMK 2 96% |

| Software | Windows 10 LTSC 2021, Linux Mint |

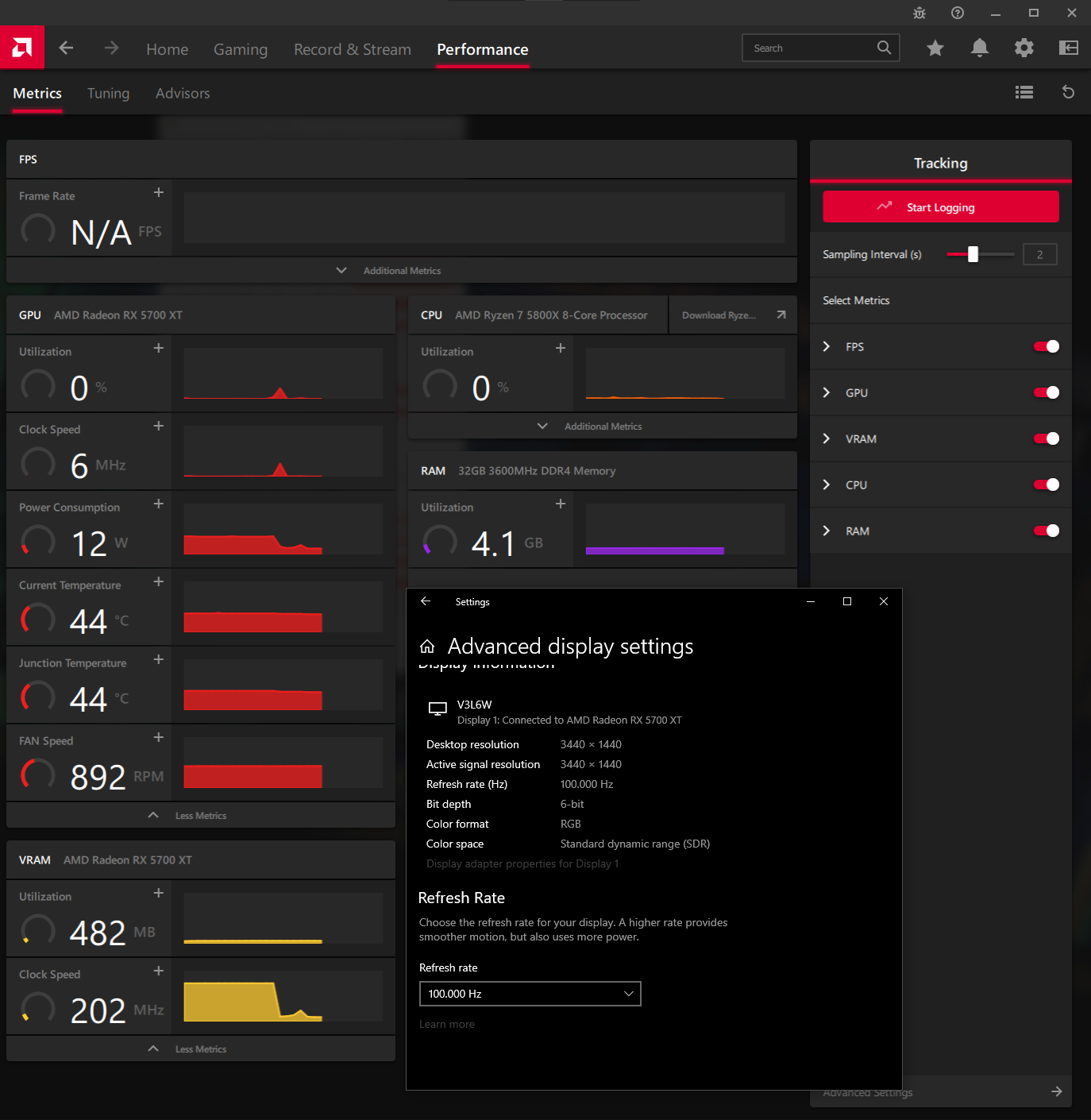

Did some testing.

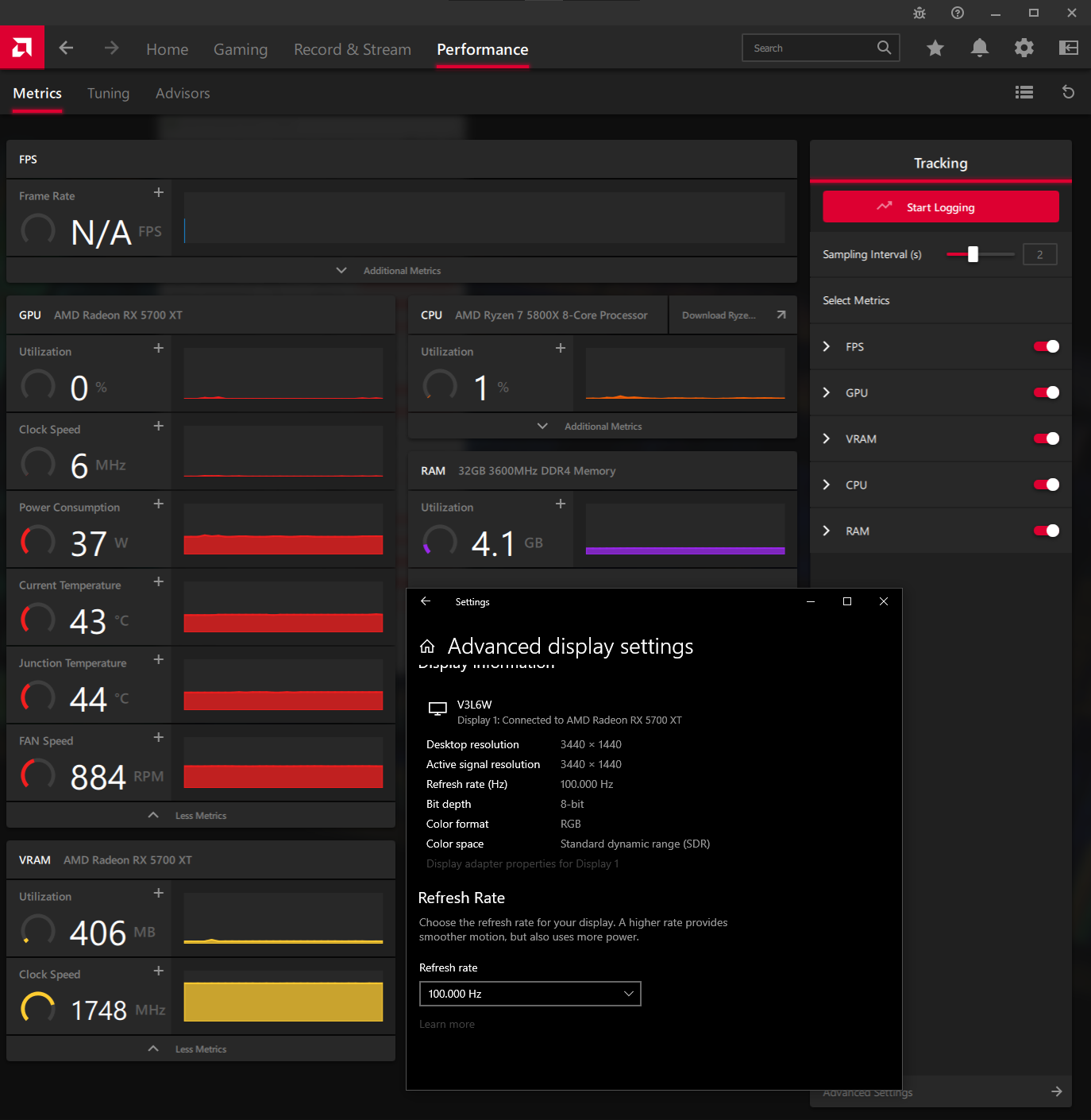

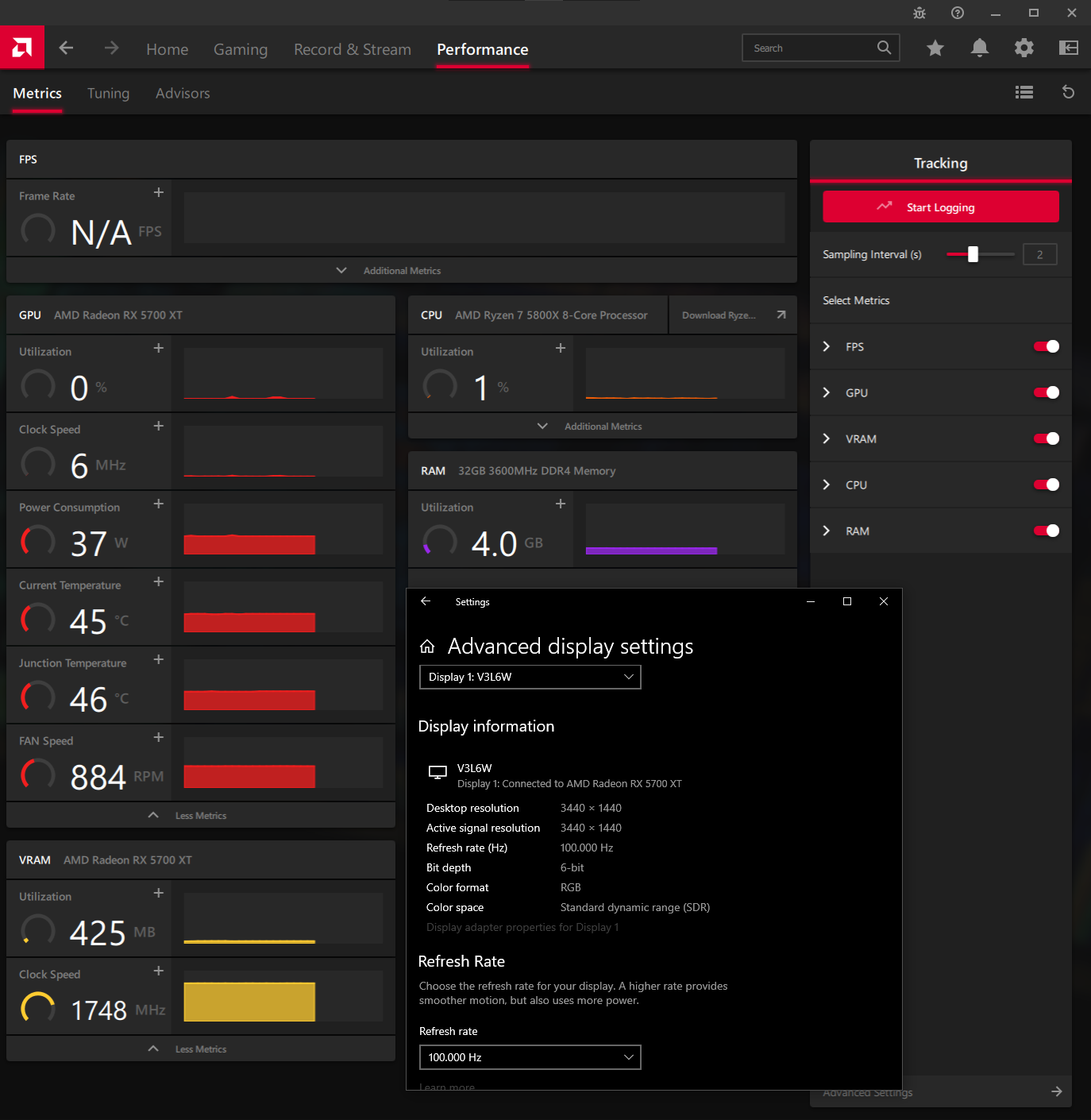

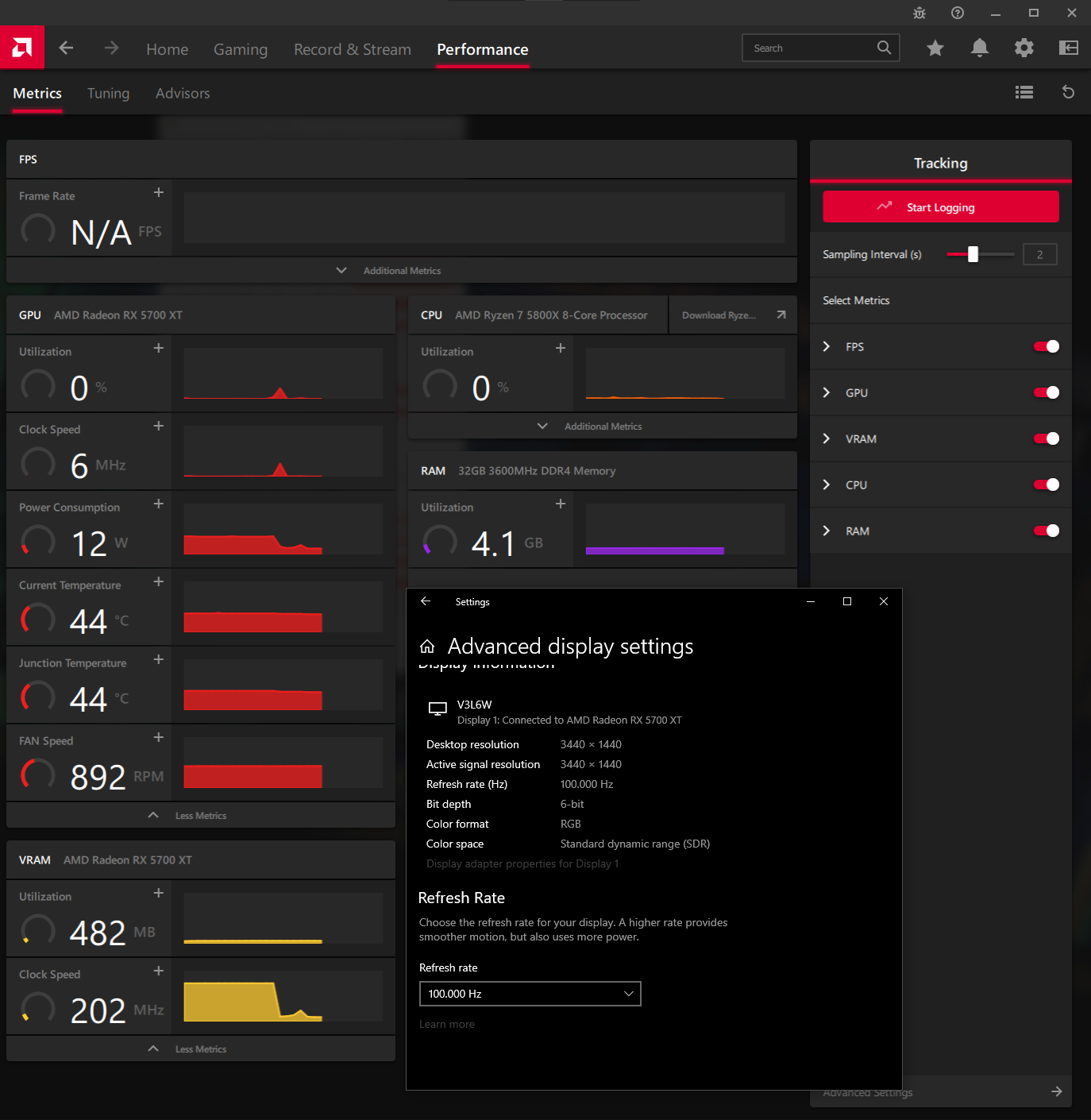

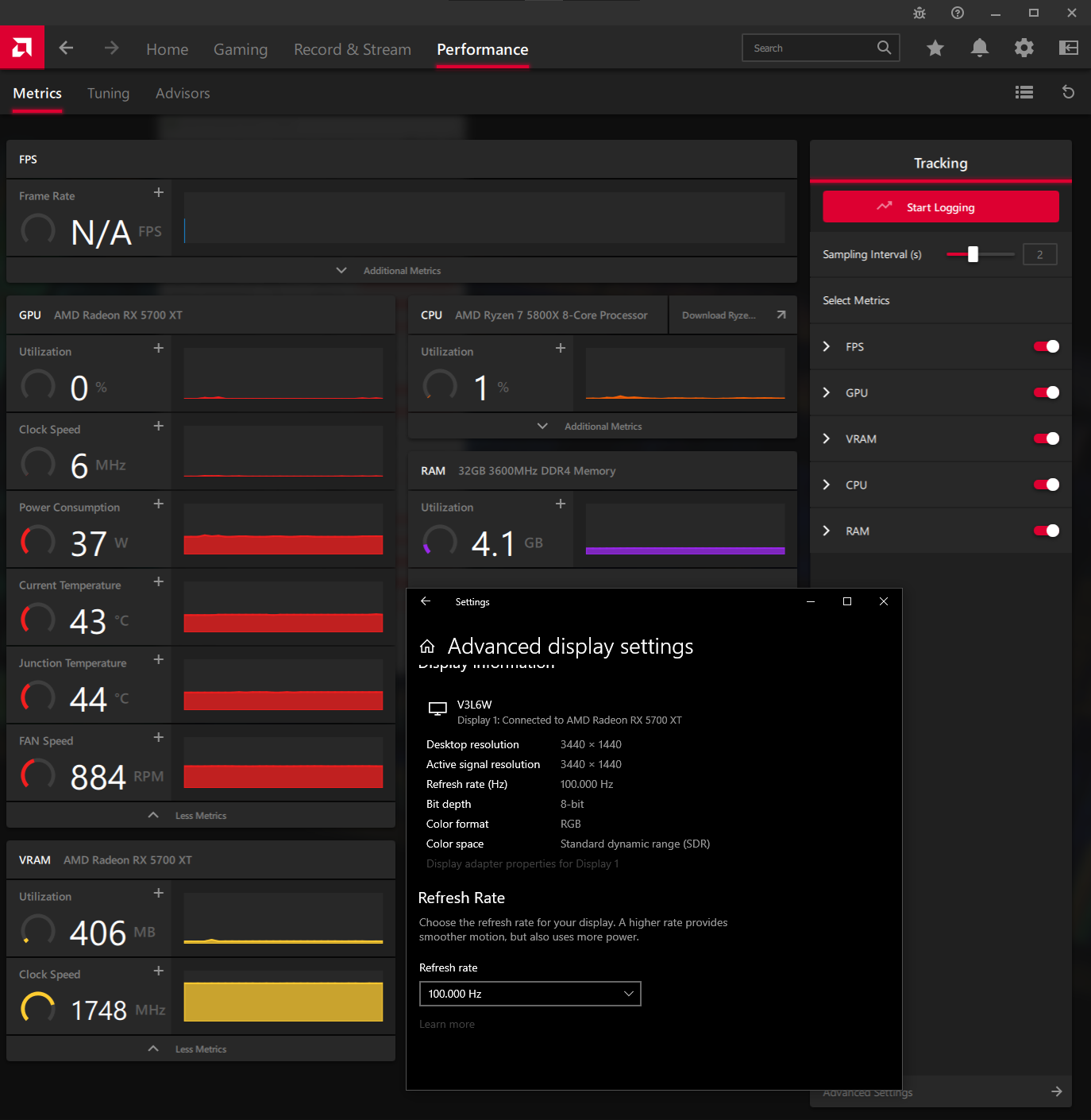

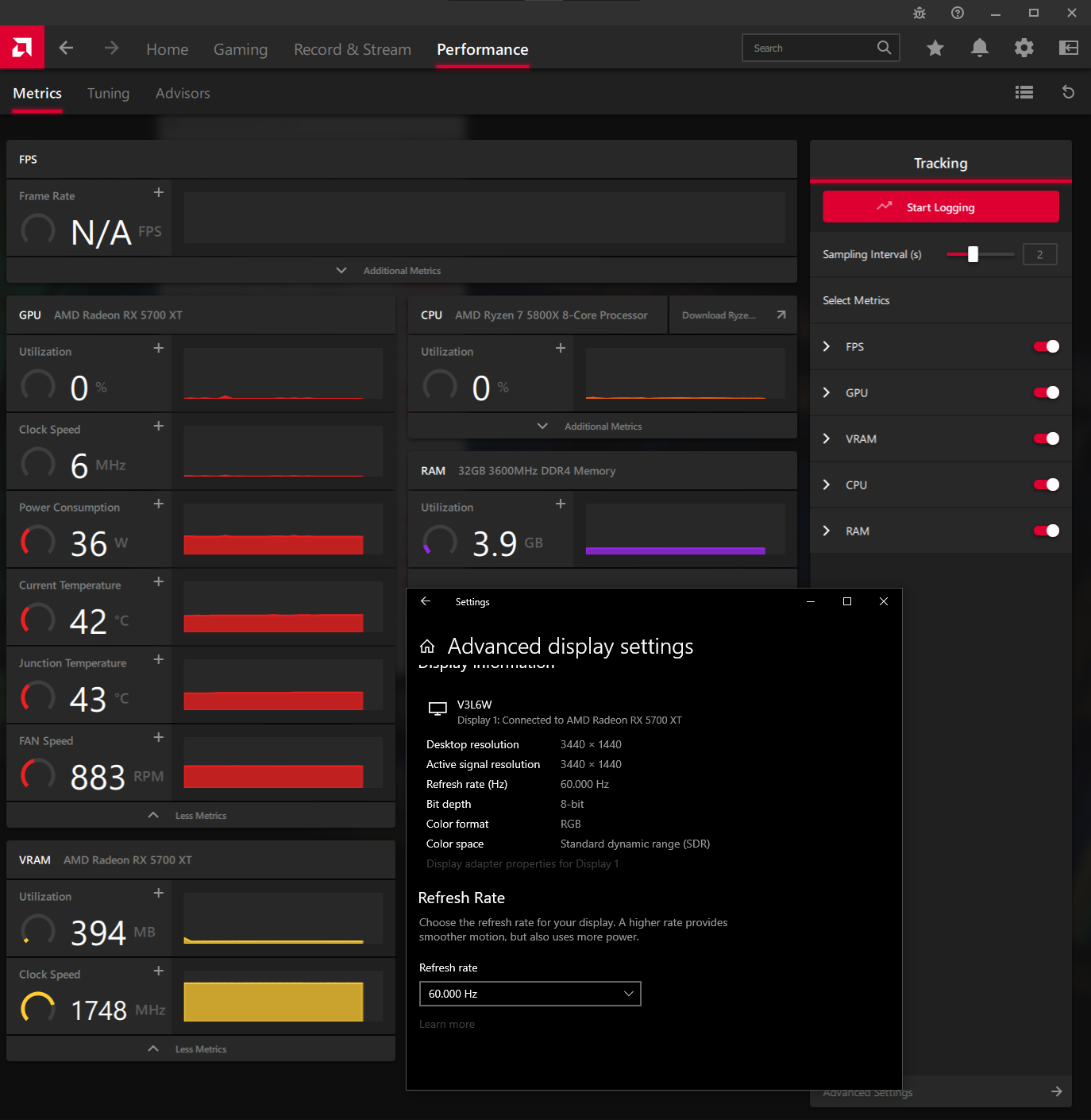

Just changing bpp from 8 to 6 made no difference at 3440x1440 60Hz and 100Hz.

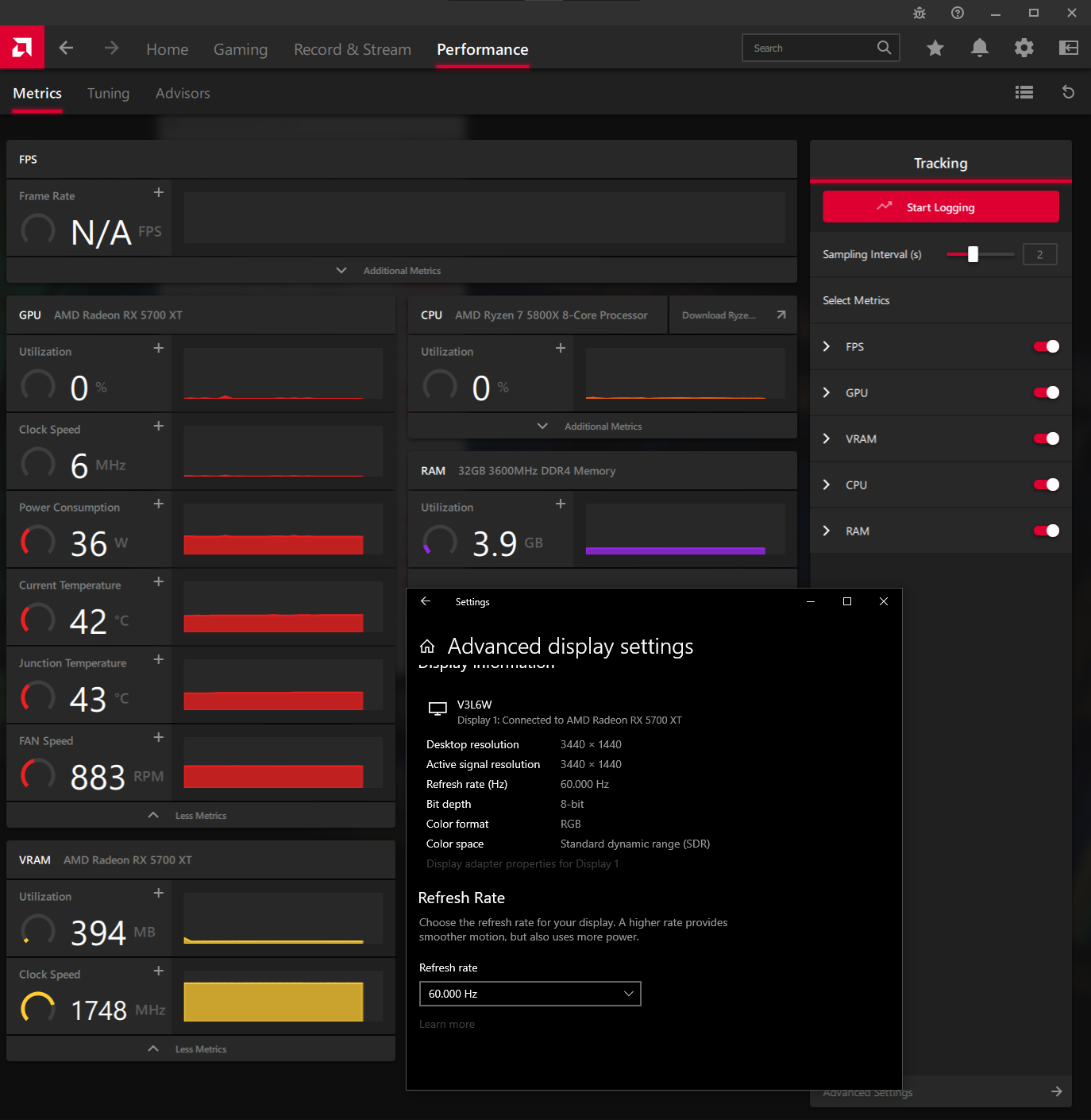

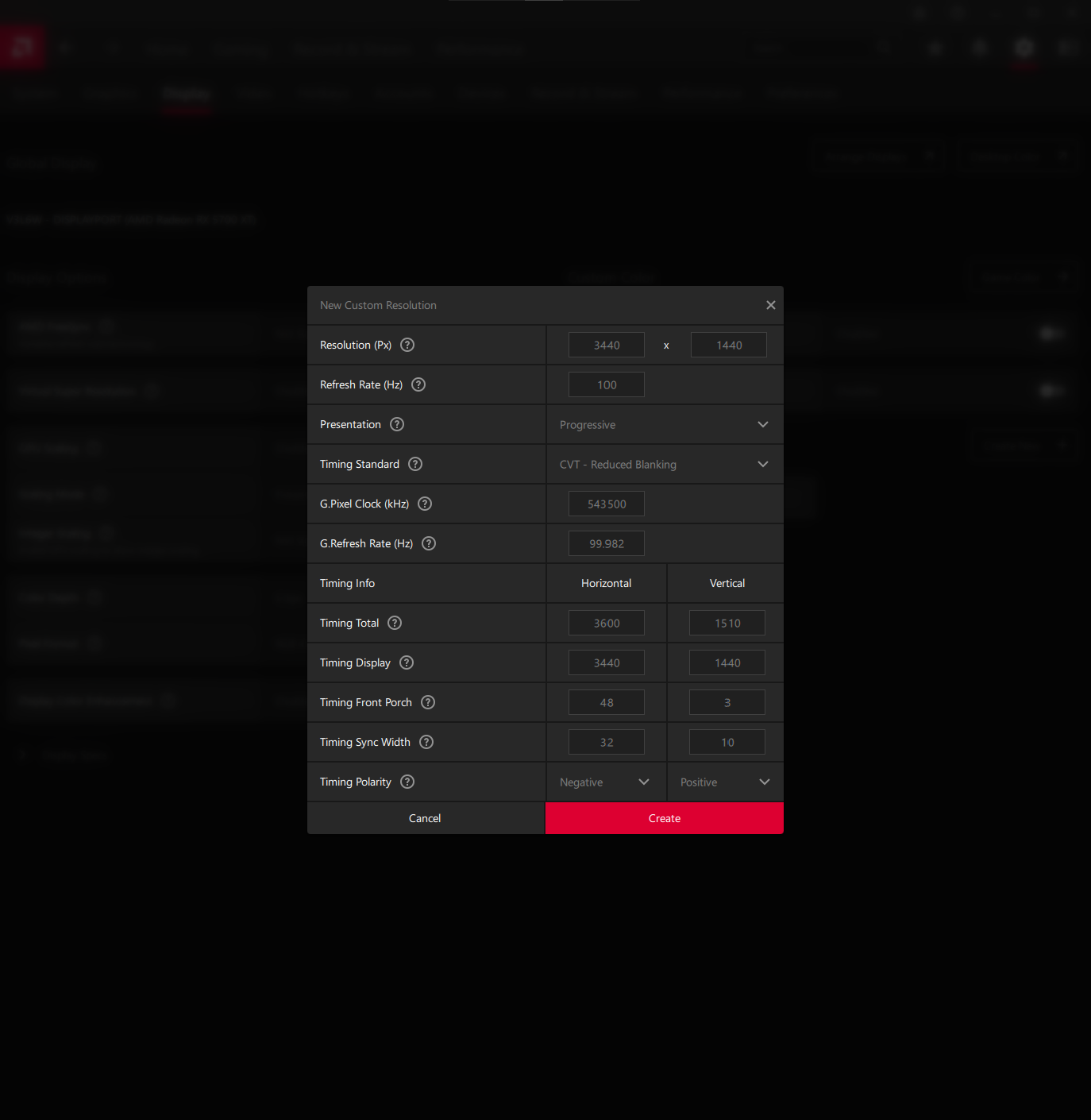

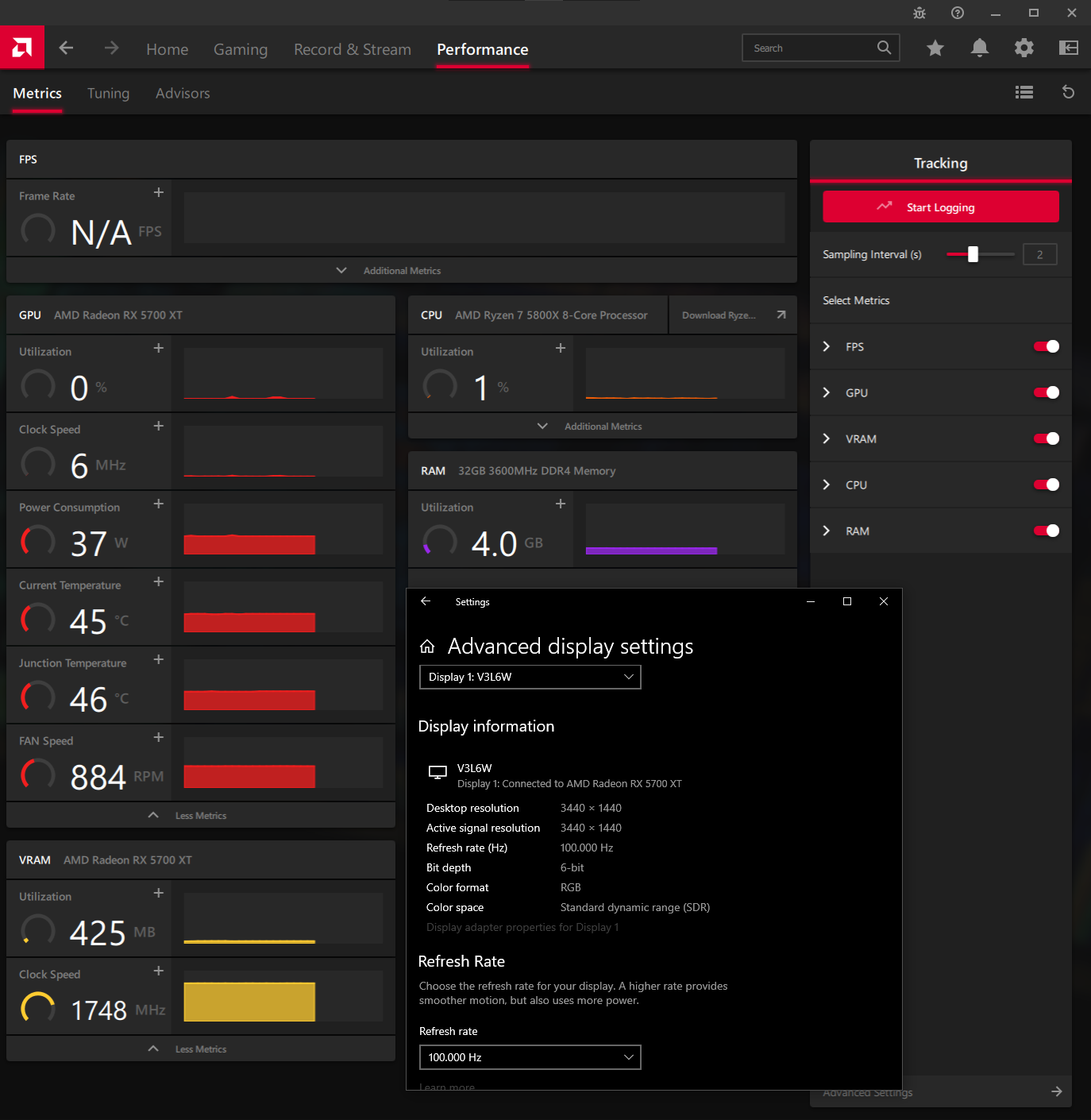

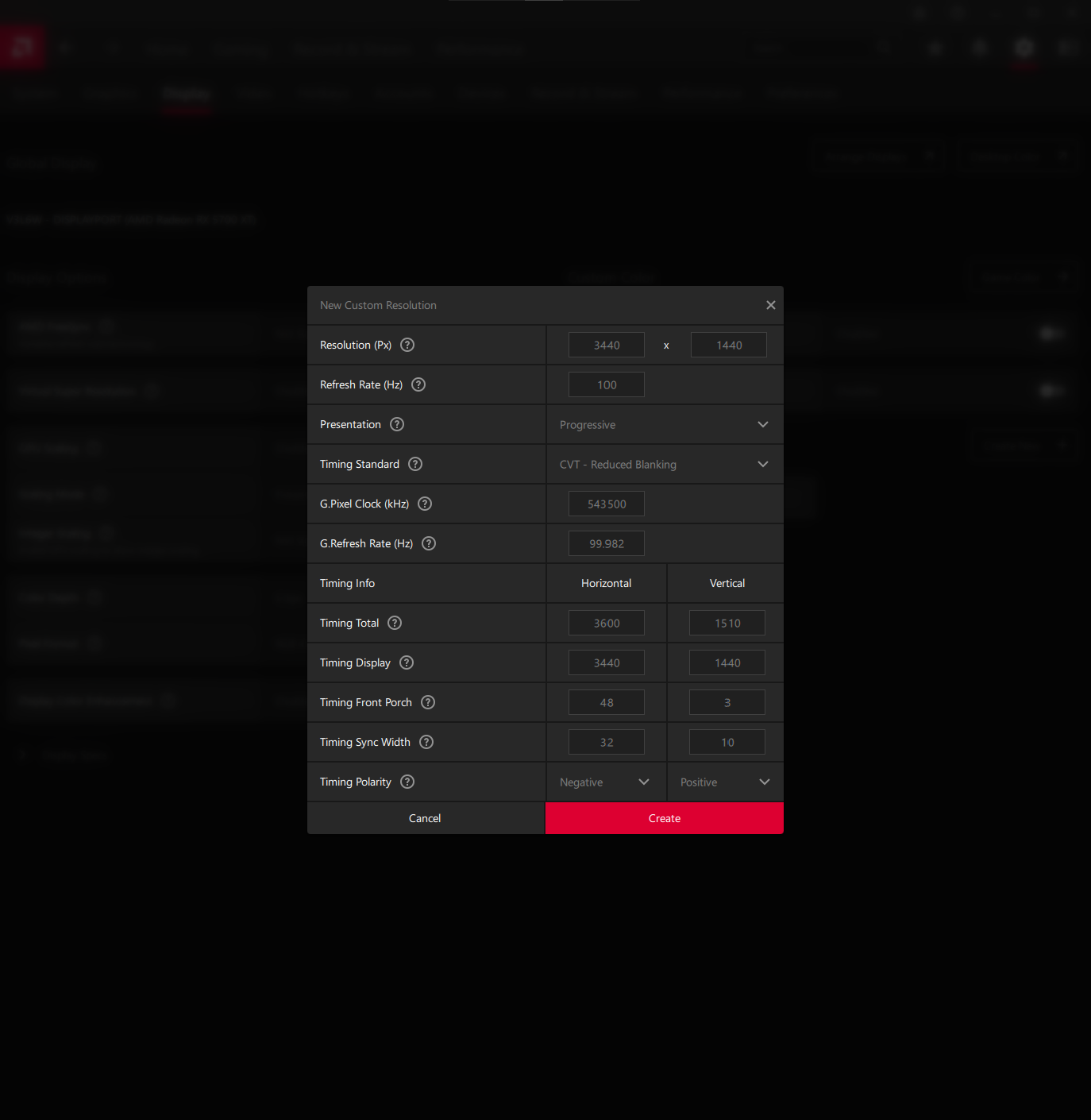

Only with a custom resolution and CVT-RB does my VRAM downclock. That locks me to 6bpp, however.

After doing the comparisons, dithering is especially noticeable on darker and grayscale images at 6bpp. Dark grays have a lot of visible dithering.

I used Radeon software for stats since I'll likely be doing a bug report.

Please let me know how severely flawed my testing methodology is.

Just changing bpp from 8 to 6 made no difference at 3440x1440 60Hz and 100Hz.

Only with a custom resolution and CVT-RB does my VRAM downclock. That locks me to 6bpp, however.

After doing the comparisons, dithering is especially noticeable on darker and grayscale images at 6bpp. Dark grays have a lot of visible dithering.

100Hz 8bpp and 6bpp

60Hz 8bpp and 6bpp

Custom Resolution: 100Hz CVT-RB

60Hz 8bpp and 6bpp

Custom Resolution: 100Hz CVT-RB

I used Radeon software for stats since I'll likely be doing a bug report.

Please let me know how severely flawed my testing methodology is.