- Joined

- Dec 22, 2011

- Messages

- 3,928 (0.80/day)

| Processor | AMD Ryzen 7 5700X3D |

|---|---|

| Motherboard | MSI MAG B550 TOMAHAWK |

| Cooling | Thermalright Peerless Assassin 120 SE |

| Memory | Team Group Dark Pro 8Pack Edition 3600Mhz CL16 |

| Video Card(s) | Sapphire AMD Radeon RX 9070 XT NITRO+ |

| Storage | Kingston A2000 1TB + Seagate HDD workhorse |

| Display(s) | Hisense 55" U7K 4K@144Hz |

| Case | Thermaltake Ceres 500 TG ARGB |

| Power Supply | Seasonic Focus GX-850 |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Logitech UltraX |

| Software | Windows 11 |

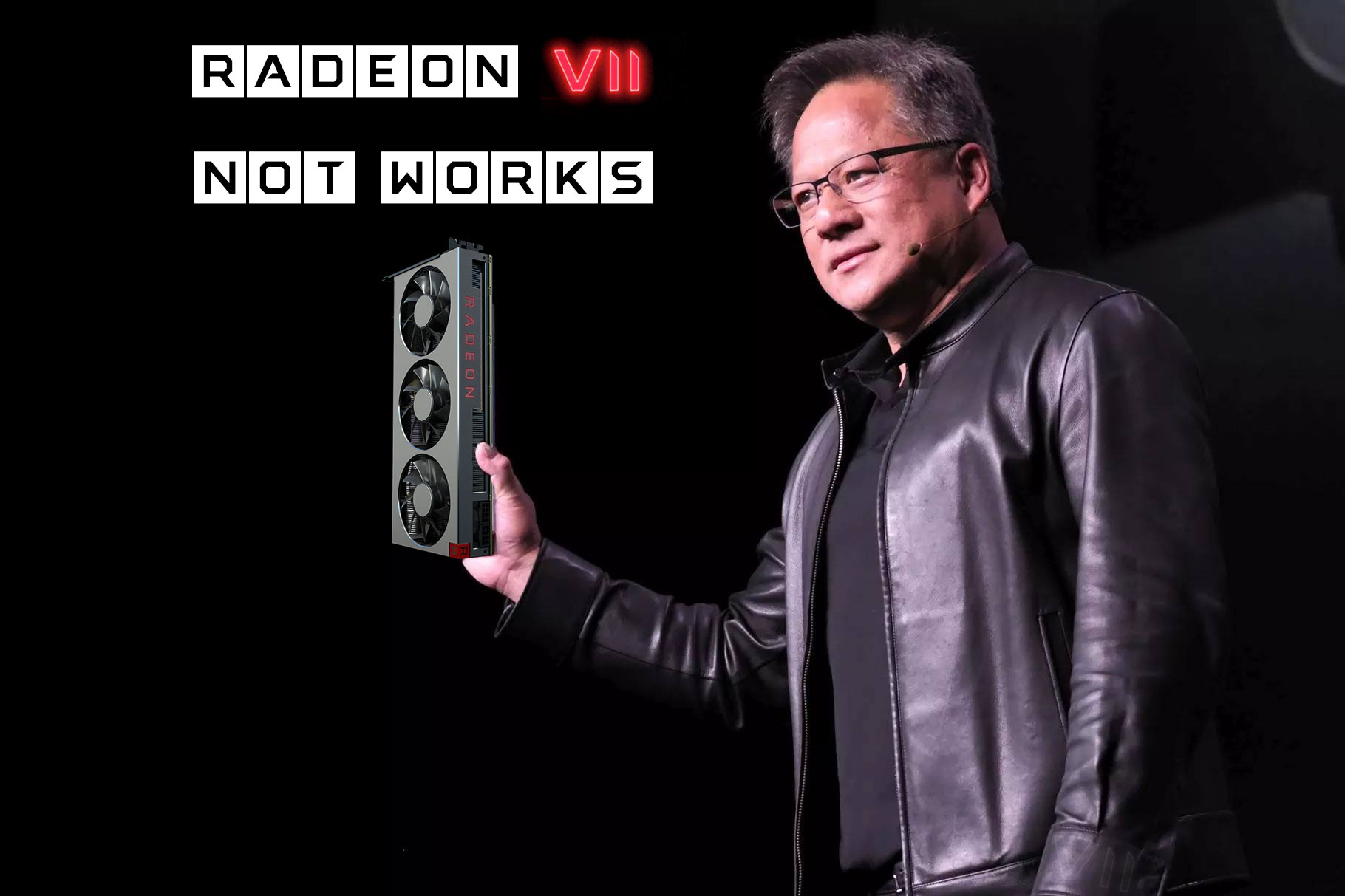

This, highly undignified response and clearly means he's worried.

No doubt, with worse performance per watt than Pascal despite being built on 7nm tech he is wondering when the competiton will turn up.