NVIDIA Announces Financial Results for Fourth Quarter and Fiscal 2017

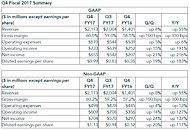

NVIDIA (NASDAQ: NVDA) today reported revenue for the fourth quarter ended January 29, 2017, of $2.17 billion, up 55 percent from $1.40 billion a year earlier, and up 8 percent from $2.00 billion in the previous quarter. GAAP earnings per diluted share for the quarter were $0.99, up 183 percent from $0.35 a year ago and up 19 percent from $0.83 in the previous quarter. Non-GAAP earnings per diluted share were $1.13, up 117 percent from $0.52 a year earlier and up 20 percent from $0.94 in the previous quarter.

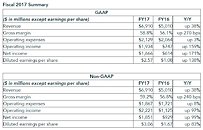

For fiscal 2017, revenue reached a record $6.91 billion, up 38 percent from $5.01 billion a year earlier. GAAP earnings per diluted share were $2.57, up 138 percent from $1.08 a year earlier. Non-GAAP earnings per diluted share were $3.06, up 83 percent from $1.67 a year earlier. "We had a great finish to a record year, with continued strong growth across all our businesses," said Jen-Hsun Huang, founder and chief executive officer of NVIDIA. "Our GPU computing platform is enjoying rapid adoption in artificial intelligence, cloud computing, gaming, and autonomous vehicles.

For fiscal 2017, revenue reached a record $6.91 billion, up 38 percent from $5.01 billion a year earlier. GAAP earnings per diluted share were $2.57, up 138 percent from $1.08 a year earlier. Non-GAAP earnings per diluted share were $3.06, up 83 percent from $1.67 a year earlier. "We had a great finish to a record year, with continued strong growth across all our businesses," said Jen-Hsun Huang, founder and chief executive officer of NVIDIA. "Our GPU computing platform is enjoying rapid adoption in artificial intelligence, cloud computing, gaming, and autonomous vehicles.