Saturday, November 13th 2010

Disable GeForce GTX 580 Power Throttling using GPU-Z

NVIDIA shook the high-end PC hardware industry earlier this month with the surprise launch of its GeForce GTX 580 graphics card, which extended the lead for single-GPU performance NVIDIA has been holding. It also managed to come up with some great performance per Watt improvements over the previous generation. The reference design board, however, made use of a clock speed throttling logic which reduced clock speeds when an extremely demanding 3D application such as Furmark or OCCT is run. While this is a novel way to protect components saving consumers from potentially permanent damage to the hardware, it does come as a gripe to expert users, enthusiasts and overclockers, who know what they're doing.

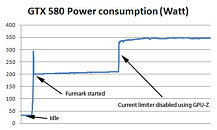

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

116 Comments on Disable GeForce GTX 580 Power Throttling using GPU-Z

i was amazed when reviews pointed less power consumption and about more

10% ~20% in some cases (against GTX480)... well now we all know that's not true!

That is what can happen if you overload the PCI-e slots. Now that was an extreme case of course, but once you start pulling more than 75w through the PCI-E connector things can get hairy pretty quickly.

is the slot/card not designed to stop it from sending more then 75watts through it?

But my point is that wouldn't the card limit its power draw to stay within that limit and pull the rest from it's power connectors? That would prevent any damage to the mobo and stay PCI-E standards compliant. I don't know if it would, which is why I'm throwing the question out to the community.

However, if you assume pretty even load across all the connectors, 1/4 from the PCI-E slot, 1/4 from the PCI-E 6-pin, and 1/2 from the PCI-E 8-Pin, once the power consumption goes over 300w, the extra will be divided between all the connectors supplying power. I don't believe the power curcuits on video cards are smart enough to know that once the power consumption goes over a certain level to load certain connectors more than others.

W1zz, you wanna give us the definitive answer on this one?

I suspect the tech specs published to manufacturers didn't account for the unusual power consumption under furmark etc. This wouldn't have been an accident, but rather a procedure to keep costs down re power circuits and cooling.

You buy the GTX 580 to play games or run Furmark ?

Took you a long time to reply but no matter. Since i asked, W1zzard has stated that the card really does react to Furmark and OCCT and, as such, what i asked is now irrelevant.

The temperature of the GTX 580 when playing is very cool and that is what interests me.

Thanks,

PCi-e 1.1 can only give 75W 2.0 can give more than 75. 150 if i recall right...

Will it work on the HD5870 as well?

75W+2x75W from 2x6Pins makes 225. 8-pins are used if power drain is larger then 225W.

A PCI-E with 150W from slot could get to 350+ with the extra 8-pins, which is not the case.

putting what i said in simpler terms:

drawing more than 75W from the slot wont magically turn the slot off, or anything else like that... if the card has no internal mechanism to deal with the power draw, the wiring feeding the slot will just start to overheat, and bad things can happen.

www.pcisig.com/developers/main/training_materials/get_document?doc_id=b590ba08170074a537626a7a601aa04b52bc3fec

Page 38 is the important one estabilishing how much power can be drawn from where, the slot is still limitted to 75w.

I'm sure that if a 600W power budget (with appropriate cooling) was available, some excellent performance gains could be achieved, like double or more performance and extra rendering features.