Monday, March 16th 2015

NVIDIA GeForce GTX TITAN-X Specs Revealed

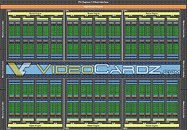

NVIDIA's GeForce GTX TITAN-X, unveiled last week at GDC 2015, is shaping up to be a beast, on paper. According to an architecture block-diagram of the GM200 silicon leaked to the web, the GTX TITAN-X appears to be maxing out all available components on the 28 nm GM200 silicon, on which it is based. While maintaining the same essential component hierarchy as the GM204, the GM200 (and the GTX TITAN-X) features six graphics processing clusters, holding a total of 3,072 CUDA cores, based on the "Maxwell" architecture.

With "Maxwell" GPUs, TMU count is derived as CUDA core count / 16, giving us a count of 192 TMUs. Other specs include 96 ROPs, and a 384-bit wide GDDR5 memory interface, holding 12 GB of memory, using 24x 4 Gb memory chips. The core is reportedly clocked at 1002 MHz, with a GPU Boost frequency of 1089 MHz. The memory is clocked at 7012 MHz (GDDR5-effective), yielding a memory bandwidth of 336 GB/s. NVIDIA will use a lossless texture-compression technology to improve bandwidth utilization. The chip's TDP is rated at 250W. The card draws power from a combination of 6-pin and 8-pin PCIe power connectors, display outputs include three DisplayPort 1.2, one HDMI 2.0, and one dual-link DVI.

Source:

VideoCardz

With "Maxwell" GPUs, TMU count is derived as CUDA core count / 16, giving us a count of 192 TMUs. Other specs include 96 ROPs, and a 384-bit wide GDDR5 memory interface, holding 12 GB of memory, using 24x 4 Gb memory chips. The core is reportedly clocked at 1002 MHz, with a GPU Boost frequency of 1089 MHz. The memory is clocked at 7012 MHz (GDDR5-effective), yielding a memory bandwidth of 336 GB/s. NVIDIA will use a lossless texture-compression technology to improve bandwidth utilization. The chip's TDP is rated at 250W. The card draws power from a combination of 6-pin and 8-pin PCIe power connectors, display outputs include three DisplayPort 1.2, one HDMI 2.0, and one dual-link DVI.

55 Comments on NVIDIA GeForce GTX TITAN-X Specs Revealed

GeForce GTX TITAN Z

GeForce® GTX™ TITAN Z is a gaming monster, the fastest graphics card we’ve built to power the most extreme PC gaming rigs on the planet. Stacked with 5760 cores and 12 GB of memory, this dual GPU gives you the power to drive even the most insane multi-monitor displays and 4K hyper PC machines.

www.geforce.com/hardware/desktop-gpus/geforce-gtx-titan-z

8GB, 375w tdp, 1050mhz clock, 1.28Tbps of bw.....There really isn't going to be a contest unless it's a ton more expensive (titan-like) and/or that extra tdp (or amd cards in general) really bothers someone. I could see stock performance being damn close to the typical overclocked performance of such a cut-down gm200 in some cases. IOW, it's going to out-titan Titan in that form. While it may or may not clock as high, it has a more efficient (in both size and type) memory buffer. The extra tdp beyond whatever engine clocks will be supplemented by bandwidth performance. Going from 4->8GB (or more importantly from 640gb>1.28Tbps) should add around 16% just by itself...if unless

Crazy pants. I really never thought we'd see a true 'battle of the titans'. I hope they give it a different name than '390x 8GB.'

edit: Just realized slide says '1TB is up ahead' and '2x1GB' diagram probably means the dual-link likely won't increase bandwidth...probably still 'only' 640gbps. It should still compete well with 'GM200 Gaming' and Titan both...I think they'll end up being really close (assuming such a GM200 does around 1400 and Fiji can do 1200+ like many 28nm chips [partially perhaps in thanks to Carrizo-style voltage/clock voodoo vs something like Hawaii]), plus or minus how clocks turn out (for said gm200 part as well as 300w and 375w Fiji flavors). 8GB is still nice for crossfire/4k and the extra power pretty much guarantees it's ability to stack up well with Titan.

(I think it's time to get some sleep so my reading comprehension improves beyond skimming the actual important information of said slides and relying on reporters' boldface spec tables).

I still personally want to see this out in public, we are going to have a war on our hands this round and the question is which choice is going to lead to victory?

Here, you can share mine. I am sure the real show will begin soon enough!

Nvidia doesn't want someone hacking the drivers and enabling it (at least a proof of concept) on their cards to prove that Gsync is a scam. That's why even the 900 series do not have 1.2a.

I don't know how long they can keep the charade going, though.

There is no more I can ask for.

Oh well, I'm just waiting for W1zz's review of this card, it goes live at midnight I assume? Pretty sure he'll also have an SLI review as well.

Can't wait to try these cards myself :toast:

If the prices are quite similar, is there a problem?

Look, I'm not here to start an argument, been blessed enough to be able to afford the best cards from both camps, but I understand most ppl only have one choice, and just like when you buy a car, you feel the need to justify your investment on a particular brand name.

What I don't get is how ppl can be so passionate, blindly defending certain brands when obviously all big corporations are out to grab your hard earned money...

Oh well, at least it's entertaining :toast:

Over 800 for a 4k 27" ? Ain't gonna happen lol

32" for that price is more reasonable. And I assume they're all TN panels. Definitely forget it.

The cheapest 1080p G-Sync on this retailer is £330. Unfortunately no 1080p Free-Sync to compare. Another retailer has a 1440p 28" Acer G-Sync at £510 (£80 more than their equiv Free-Sync).

Either way, I have little interest in G/Free sync for now as I may go AMD or Nvidia next round so I don't want a redundant monitor choice.

EDIT: NPU has news - looks like 390X is $700-1000. Sony Xperia wont be happy.www.nextpowerup.com/news/19232/amd-r9-390x-vs-r9-290x-benchmark-scores-and-peformance-leaked-r9-390x-is-60-faster-than-r9-290x.html

www.nextpowerup.com/news/19232/amd-r9-390x-vs-r9-290x-benchmark-scores-and-peformance-leaked-r9-390x-is-60-faster-than-r9-290x.html[/QUOTE]

You guys get shafted on component pricing it seems. Not like the Aussies, though lol

Well the new leaks on 390X are cool indeed. If 60% faster than 290X and Titan is 50% faster than 980, tally it against this:

And you'll get a heat. If an 8GB 390X is $1000 and a 12GB Titan is $1000 and both pull 250Watts, this will be a fucking awesome round of cards :rockout:

No one has called them out on DP specs, though.

Nvidia

AMD