Tuesday, August 23rd 2016

Samsung Bets on GDDR6 for 2018 Rollout

Even as its fellow-Korean DRAM maker SK Hynix is pushing for HBM3 to bring 2 TB/s memory bandwidths to graphics cards, Samsung is betting on relatively inexpensive standards that succeed existing ones. The company hopes to have GDDR6, the memory standard that succeeds GDDR5X, to arrive by 2018.

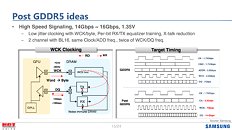

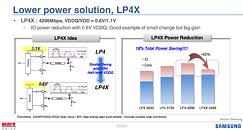

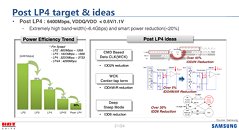

GDDR6 will serve up bandwidths of up to 16 Gbps, up from the 10 Gbps currently offered by GDDR5X. This should enable memory bandwidths of 512 GB/s over a 256-bit wide memory interface, and 768 GB/s over 384-bit. The biggest innovation with GDDR6 that sets it apart from GDDR5X is LP4X, a method with which the memory controller can more responsively keep voltages proportionate to clocks, and reduce power-draw by up to 20% over the previous standard.

Source:

ComputerBase.de

GDDR6 will serve up bandwidths of up to 16 Gbps, up from the 10 Gbps currently offered by GDDR5X. This should enable memory bandwidths of 512 GB/s over a 256-bit wide memory interface, and 768 GB/s over 384-bit. The biggest innovation with GDDR6 that sets it apart from GDDR5X is LP4X, a method with which the memory controller can more responsively keep voltages proportionate to clocks, and reduce power-draw by up to 20% over the previous standard.

57 Comments on Samsung Bets on GDDR6 for 2018 Rollout

Cards. Need. More. Bandwidth. NOW. Look at the pathetic gains received from overclocking the 1080's core by upwards of 20%, and the massive gains that come from overclocking just the memory on the RX 480.

Do people just cry their fan boy opinions without actually stopping and thinking? Never mind, the answer to this is obvious - it's the internet after all.

So yea, every business cares about profits, but they also have to care about the customer(s). I care about both.

With that said, I'm waiting for HBM6 before I jump.

If they can get that performance outta GDDR6, I can see Nvidia skipping HBM for that as it would remove 1 possible step of creating a waste.

HBM 2 isn't limited to 4GB, but it's still in limited supply and costs higher, so NV only used it in their pro computing products... HBM 2 is supposed to go for better supply end of this year - early next year, that's why both NV and AMD postponed it's use for next year..

nVidia seems to be able to develop 3 different chips in parallel and that with serious architectural changes from project to project

AMD is limited to rolling out in one segment at a time and is doing rather small changes to existing architecture.

Volta is expected in 2017 and it might be to Pascal what Maxwell was to Kepler.

Meanwhile AMD is merely competitive in low range, but even that might evaporate in 2017.

We might end up with AMD not being able to compete in any segment in 2017, and if so, it will be game over.Except it DOES use HBM2 with GP100 chip.

And if you were wondering about something else, namely:

Q: Why did AMD bother with HBM in Fury?

A: Because, being an underdog in a rather desperate positions, they need to gamble on new tech.

Q: Why do nVidia cards normally need less bandwidth, than AMD cards?

A: Compression on nVidia cards is said to be more effective(although AMD should be closing the gap with Polaris). Architectural differences (yeah, a vague statement, I know) more effective use of cache might also play role.I guess you are comparing 450$ chip (1070) to 330$ chip (970), makes a lot of sense.I doubt the "not found on Maxwell" part.Yeah, "companies make money" = "all company get as low as nVidia, after all, they also make money", very logical statement.

Bump was about 20%, you claimed twice that.

AIB 980Ti´s are more than competitive vs 1070.

GTX 1070 over GTX 970 gain

at 1440p 38% faster

at 4K 40% faster

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1070/24.html

GTX 1080 over GTX 980 gain

at 1440p 40% faster

at 4K 41% faster

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080/26.html

Pascal Titan X over Maxwell Titan X gain

at 1440p 40% faster

at 4K 42% faster

www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/24.html

Comparing 450 Euro 1070 to 330 Euro 970 is ridiculous.

Same goes to vs 1080 which es even more expensive than 980Ti was.

Oh, and here is a site where they know how %'s work, hover at will:

www.computerbase.de/2016-08/msi-geforce-gtx-1060-oder-sapphire-radeon-rx-480/2/#diagramm-performancerating-1920-1080

AIB 1070 is 23-24% faster than 980 (1440p/1080p)

AIB 1080 is 19-20% faster than 980Ti (1440p/1080p), stock is only 10% faster

Oh wait, we do have comparably priced products, 980 vs 1070, 980Ti vs 1080.

But let's compare 1070 vs 970 and 1080 vs 980, to get bogus higher "improvement" numbers, shall we.