Thursday, August 22nd 2019

AMD CEO Lisa Su: "CrossFire Isn't a Significant Focus"

AMD CEO Lisa Su at the Hot Chips conference answered some questions from the attending press. One of these regarded AMD's stance on CrossFire and whether or not it remains a focus for the company. Once the poster child for a scalable consumer graphics future, with AMD even going as far as enabling mixed-GPU support (with debatable merits). Lisa Su came out and said what we all have been seeing happening in the background: "To be honest, the software is going faster than the hardware, I would say that CrossFire isn't a significant focus".

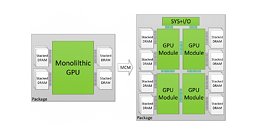

There isn't anything really new here; we've all seen the consumer GPU trends as of late, with CrossFire barely being deserving of mention (and the NVIDIA camp does the same for their SLI technology, which has been cut from all but the higher-tier graphics cards). Support seems to be enabled as more of an afterthought than a "focus", and that's just the way things are. It seems that the old, old practice of buying a lower-tier GPU at launch and then buying an additional graphics processor further down the line to leapfrog performance of higher-performance, single GPU solutions is going the way of the proverbial dodo - at least until an MCM (Multi-Chip-Module) approach sees the light of day, paired with a hardware syncing solution that does away with the software side of things. A true, integrated, software-blind multi-GPU solution comprised of two or more smaller dies than a single monolithic solution seems to be the way to go. We'll see.

Source:

TweakTown

There isn't anything really new here; we've all seen the consumer GPU trends as of late, with CrossFire barely being deserving of mention (and the NVIDIA camp does the same for their SLI technology, which has been cut from all but the higher-tier graphics cards). Support seems to be enabled as more of an afterthought than a "focus", and that's just the way things are. It seems that the old, old practice of buying a lower-tier GPU at launch and then buying an additional graphics processor further down the line to leapfrog performance of higher-performance, single GPU solutions is going the way of the proverbial dodo - at least until an MCM (Multi-Chip-Module) approach sees the light of day, paired with a hardware syncing solution that does away with the software side of things. A true, integrated, software-blind multi-GPU solution comprised of two or more smaller dies than a single monolithic solution seems to be the way to go. We'll see.

88 Comments on AMD CEO Lisa Su: "CrossFire Isn't a Significant Focus"

In fact, it shouldn't be that difficult to have socketed GPUs, too. Perhaps, given the insanely-high cost of high-end GPUs these days, it's time to start demanding more instead of passively accepting the disposable GPU model. If a GPU board has a strong VRM system and is well-made, why replace that instead of upgrading the chip?

Personally, I'd like to see GPUs move to the motherboard and the ATX standard be replaced with a modern one that's efficient to cool. A vapor chamber that can cool both the CPU and the GPU could be nice (and the chipset — 40mm fans in 2019, really?). A unified VRM design would be a lot more efficient.

It's amusing that people balk at buying a $1000 motherboard but seem not to notice the strangeness of disposing of a $1200 GPU rather than being able to upgrade it.

And I'm with ya on multi gpu in general - you know, it's never been a technology that's for folks on a budget or looking for perfect scaling. We know we're not getting 100% scaling, it just comes down to wanting a certain level of image quality or framerates, etc. and knowing we can't get it with a single GPU.

I know not everyone is a fan and some folks have reported bad experiences, but IMO, as someone who has run the technology for years and years, I've had a great experience with it. Sure hope more developers make use of mGPU.

Here you have a 35 game sample

www.techspot.com/review/1701-geforce-rtx-2080/page2.html ,

www.techspot.com/article/1702-geforce-rtx-2080-mega-benchmark/ ,

from Techspot ( known as HardwareUnboxed on Youtube wich is a reference when it comes to GPU reviews ) . On those 35 games as an average in 4K 2080Ti is hitting 92fps in terms of average frames and 73,31fps in terms of 1% low frames , furthermore there are only 4 games out of 35 where 2080Ti doesn't hit 60fps ( still above 50fps ) . When you compare the review you provided there are plenty of weird results such as GTA 5 : GpuCheck 59fps vs Techspot 122fps !

Keep in mind this is a stock 2080Ti , with memory OC ( more important in 4K ) you are warrated to hit over 60fps in all those games ..... so yeah come again and tell about those unplayable games . Sure there might be some poorly optimized games here and there but other than that you have to be out of your mind to believe that 2080Ti can't drive 4K/60 !

www.techpowerup.com/review/nvidia-geforce-gtx-460-sli/25.html

So, a reeeaallly expensive GPU that has zero competition in the market... Sounds like a recipe for a bargain, not the situation one is in when there is a monopoly that raises prices artificially.

(I've told people before that it's in Nvidia's interest to get rid of multi-GPU as long as AMD isn't competing at the high end. Since AMD is competing against the PC gaming platform by peddling console hardware it also doesn't have as much incentive to compete at the high end. Letting Nvidia increase prices helps it to peddle its midrange hardware at a price premium, undercutting Nvidia's higher premium. But, why not cheerlead for the situation where we can have any color we want as long as it's black?)

Sell a kidney to play at 4K or stick with 1440. Who needs dual GPU these days? Most everyone's got two kidneys.

(Given AMD's success with Zen 2 chiplets I would expect that future is going to be multi-GPU, only the extra GPU chips will be chiplets.)

It's ok i get it , bashing Nvidia for no reason will never get old for some peoples !I've been playing in 4K since 2014 , i started with R9 290 then moved R9 290 CF then to a single GTX 970 ( because of heat and noise reasons and because one of my 290s died on me ) then to GTX 1060 and recently to GTX 1080Ti wich can handle anything i throw at it in 4k/60 . All those GPU's i named prior to 1080Ti where perfectly able to play in 4K . Obviously sometimes you had to lower some settings depending the game and the GPU but with very minimal impact in terms of visual quality ( on most games there is barely any difference between Ultra and High ) . Nowadays GPUs like GTX 1080 can be found for dirty cheap and are perfectly able to handle 4K assuming you are not one of those '' ultra everything '' elitists and are smart enough to optimise your game settings in order to make the most out of your hardware !

So to answer your question no peoples don't need to sell a kindey to play at 4K they just need to buy a brain ! I don't know who needs dual GPU these days but what i do know is that if you value silence , thermals and power consumption there is absolutely no need to go dual GPU for 4K gaming especially nowadays .That's a reasonable expectation and a much more elegant solution ( in terms of architecture / noise / thermals ) than dual discrete GPU systems !